This repository provides the official PyTorch implementation for the following paper:

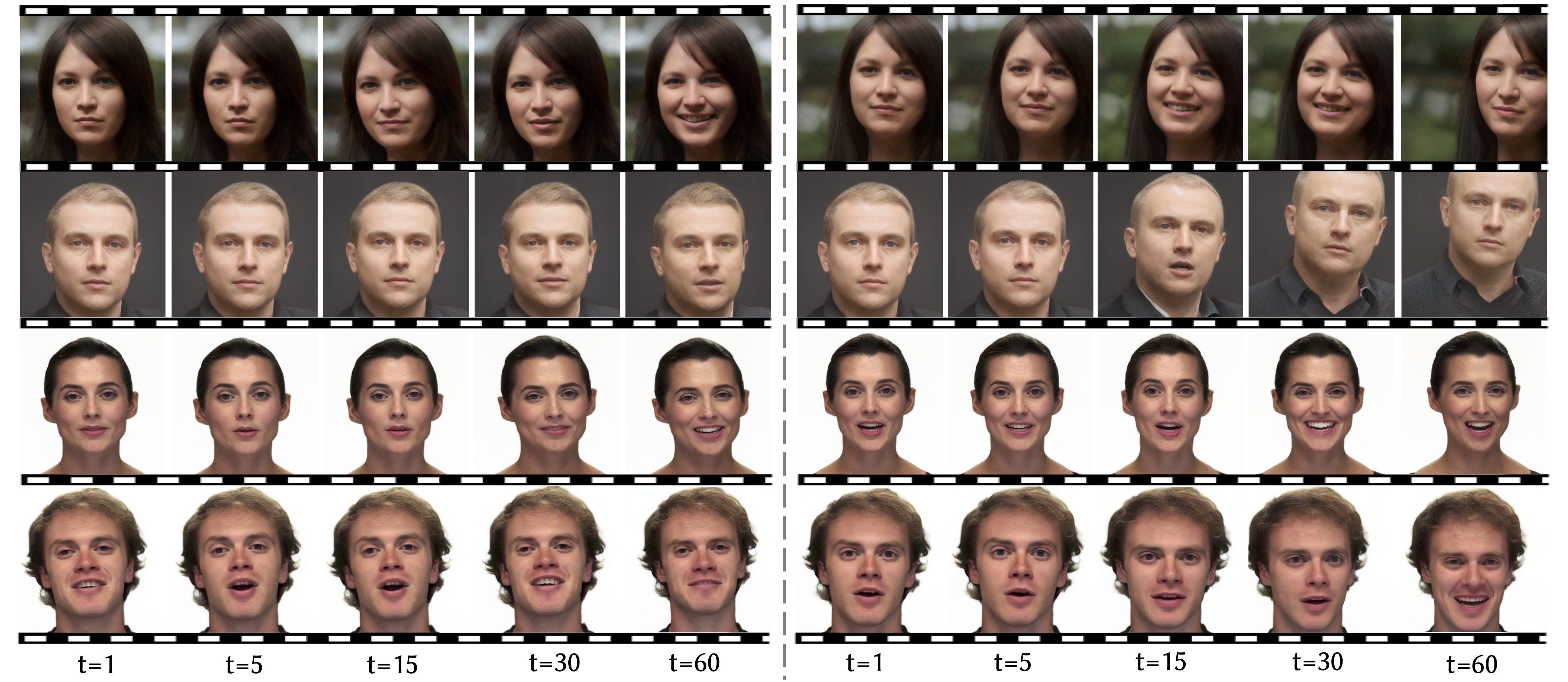

StyleFaceV: Face Video Generation via Decomposing and Recomposing Pretrained StyleGAN3

Haonan Qiu, Yuming Jiang, Hang Zhou, Wayne Wu, and Ziwei Liu

Arxiv, 2022.

From MMLab@NTU affliated with S-Lab, Nanyang Technological University and SenseTime Research.

[Project Page] | [Paper] | [Demo Video]

- [07/2022] Paper and demo video are released.

- [07/2022] Code is released.

Clone this repo:

git clone https://github.com/arthur-qiu/StyleFaceV.git

cd StyleFaceVDependencies:

All dependencies for defining the environment are provided in environment/stylefacev.yaml.

We recommend using Anaconda to manage the python environment:

conda env create -f ./environment/stylefacev.yaml

conda activate stylefacevImage Data: Unaligned FFHQ

Video Data: RAVDESS

Download the processed video data via this Google Drive or process the data via this repo

Put all the data at the path "../data".

Transform the video data into .png form:

python scripts vid2img.pyPretrained models can be downloaded from this Google Drive. Unzip the file and put them under the dataset folder with the following structure:

pretrained_models

├── network-snapshot-005000.pkl # styleGAN3 checkpoint finetuned on both RAVDNESS and unaligned FFHQ.

├── wing.ckpt # Face Alignment model from https://github.com/protossw512/AdaptiveWingLoss.

├── motion_net.pth # trained motion sampler.

├── pre_net.pth

└── pre_pose_net.pth

checkpoints/stylefacev

├── latest_net_FE.pth # appearance extractor + recompostion

├── latest_net_FE_lm.pth # first half of pose extractor

└── latest_net_FE_pose.pth # second half of pose extractor

python test.py --dataroot ../data/actor_align_512_png --name stylefacev \\

--network_pkl=pretrained_models/network-snapshot-005000.pkl --model sample \\

--model_names FE,FE_pose,FE_lm --rnn_path pretrained_models/motion_net.pth \\

--n_frames_G 60 --num_test=64 --results_dir './sample_results/'This stage is purely trained on image data and will help the convergence.

python train.py --dataroot ../data/actor_align_512_png --name stylepose \\

--network_pkl=https://api.ngc.nvidia.com/v2/models/nvidia/research/stylegan3/versions/1/files/stylegan3-r-ffhqu-256x256.pkl \\

--model stylevpose --n_epochs 5 --n_epochs_decay 5

python train.py --dataroot ../data/actor_align_512_png --name stylefacev_pre \\

--network_pkl=pretrained_models/network-snapshot-005000.pkl \\

--model stylepre --pose_path checkpoints/stylevpose/latest_net_FE.pthYou can also use pre_net.pth and pre_pose_net.pth from the folder of pretrained_models.

python train.py --dataroot ../data/actor_align_512_png --name stylefacev_pre \\

--network_pkl=pretrained_models/network-snapshot-005000.pkl --model stylepre \\

--pre_path pretrained_models/pre_net.pth --pose_path pretrained_models/pre_pose_net.pthpython train.py --dataroot ../data/actor_align_512_png --name stylefacev \\

--network_pkl=pretrained_models/network-snapshot-005000.pkl --model stylefacevadv \\

--pose_path pretrained_models/pre_pose_net.pth \\

--pre_path checkpoints/stylefacev_pre/latest_net_FE.pth \\

--n_epochs 50 --n_epochs_decay 50 --lr 0.0002python train.py --dataroot ../data/actor_align_512_png --name motion \\

--network_pkl=pretrained_models/network-snapshot-005000.pkl --model stylernn \\

--pre_path checkpoints/stylefacev/latest_net_FE.pth \\

--pose_path checkpoints/stylefacev/latest_net_FE_pose.pth \\

--lm_path checkpoints/stylefacev/latest_net_FE_lm.pth \\

--n_frames_G 30 If you find this work useful for your research, please consider citing our paper:

@misc{https://doi.org/10.48550/arxiv.2208.07862,

doi = {10.48550/ARXIV.2208.07862},

url = {https://arxiv.org/abs/2208.07862},

author = {Qiu, Haonan and Jiang, Yuming and Zhou, Hang and Wu, Wayne and Liu, Ziwei},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {StyleFaceV: Face Video Generation via Decomposing and Recomposing Pretrained StyleGAN3},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}