Sparkify wants to analyze the data they've been collecting on songs and user activity on their new music streaming app. The analytics team is particularly interested in understanding what songs users are listening to. Currently, they don't have an easy way to query their data, which resides in a directory of JSON logs on user activity on the app, as well as a directory with JSON metadata on the songs in their app.

They'd like a data engineer to create a Postgres database with tables designed to optimize queries on song play analysis, and bring you on the project. Your role is to create a database schema and ETL pipeline for this analysis. You'll be able to test your database and ETL pipeline by running queries given to you by the analytics team from Sparkify and compare your results with their expected results.

You will create a Postgre database which will host data collected on songs and user activity on Sparkify's new music streaming app. First you will start by creating the database schema during which process we will define fact and dimension tables for a star schema. Then, you will create ETL pipelines which will transfer data from files in local directories into the tables in Postgre using both Python and SQL. Finally, you will conclude by testing the database and ETL pipelines using provided tests from the analytics team.

- python

- PostgreSQL

- Jupyter notebook

You will combine data from two local directories to create the fact and dimension tables.

The first dataset contains metadata about a song and the artist of that song which is saved in a JSON format. These files are partitioned by the first three letters of each song's track ID and are located under ./data/song_data/. A single song file has the following information:

| Fields | Description |

|---|---|

| num_songs | number of songs by artist |

| artist_id | artist id |

| artist_latitude | latitude coordinate if provided which depends on the artist's location |

| artist_longitude | longitude coordinate if provided which depends on the artist's location |

| artist_location | address or location of artist |

| artist | full name of artist |

| song_id | id used to identify song |

| title | title of song |

| duration | duration of song |

| year | year when song was published |

The second dataset contains log files generated by an event simulator based on the songs in the first dataset. This event simulation, simulates activity logs from the Sparkify music streaming app. These log files are partitioned by year and month and are located under ./data/log_data/. These files contain the following information:

| Fields | Description |

|---|---|

| artist | name of artist |

| auth | tracks whether the user logged in or logged out |

| firstName | user first name |

| lastName | user last name |

| gender | user gender |

| length | length of session/event |

| itemInSession | number of items for a specific session |

| level | tracks whether the user paid for the session or if the session was free |

| location | user location |

| method | HTTP methods |

| page | tracks page name such as 'NextSong', 'Home', 'Logout', 'Settings', 'Downgrade', 'Login', 'Help', 'Error', 'Upgrade' |

| registration | registration timestamp |

| sessionId | session id |

| song | song name |

| status | tracks the status of the request such as status 200, 307, 404 |

| ts | timestamp in millisecond |

| userAgent | operating system user agent |

| userId | user id |

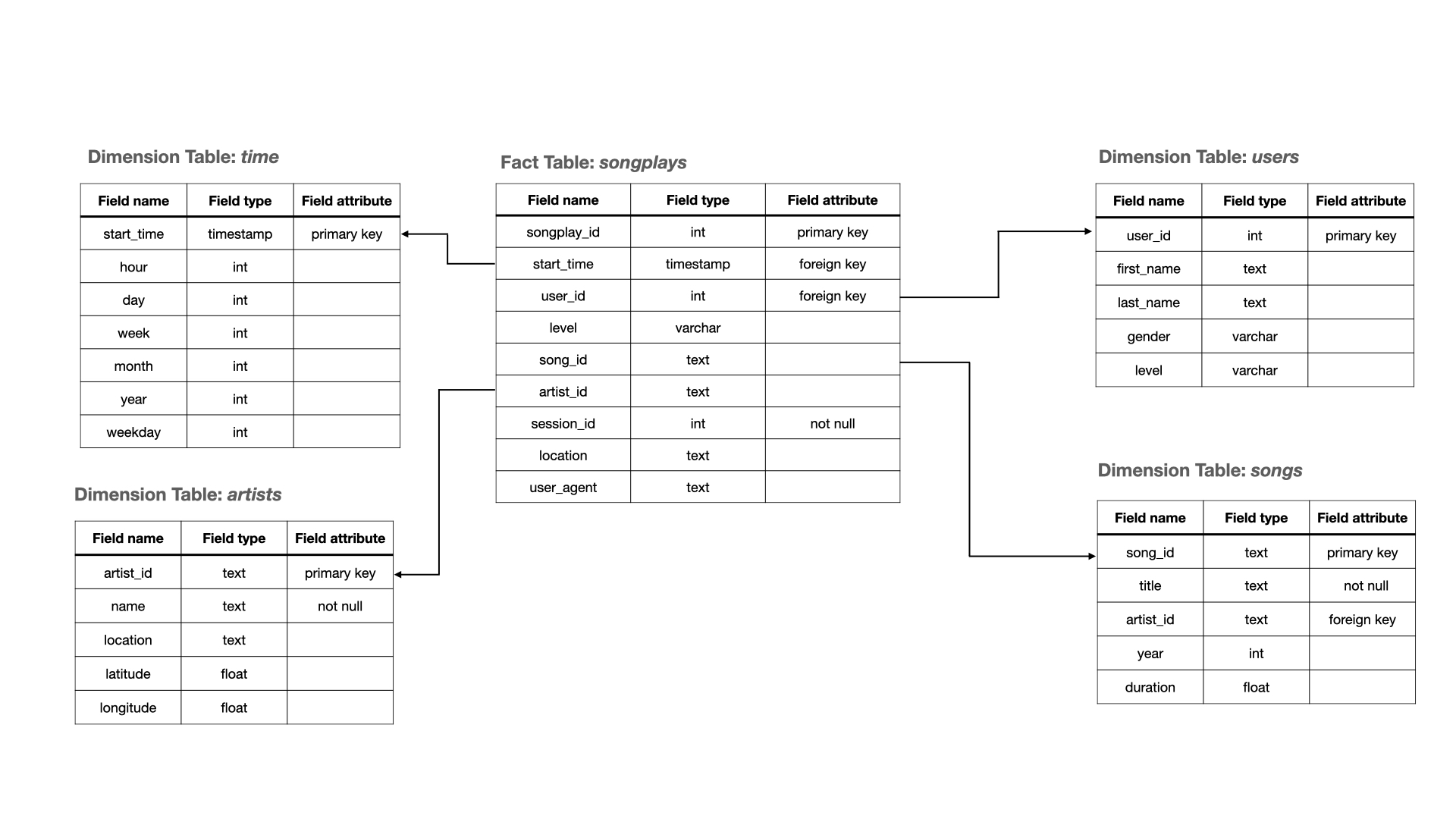

You will use the star database schema as data model for this ETL pipeline, which contains fact and dimension tables. An entity relationship diagram is shown below.

Clone this repository

git clone https://github.com/najuzilu/DM-PostgreSQL.git- conda

- python 3.8

- psycopg2

- pandas

- ipykernel

Create a virtual environment through Anaconda using

conda env create --file environment.ymlTo install the IPython kernel, run

python -m ipykernel install --user --name dm-postgre-sql- Run

create_tables.pyto create the tablespython create_tables.py

- Run

etl.pyto execute the ETL pipeline and load the data in PostgreSQLAlternatively, you can open and runpython etl.py

etl.ipynbfor an easy-to-use, interactive environment. - Open and run

test.ipynbto make sure the tables have been populated.

Yuna Luzi - @najuzilu

Distributed under the MIT License. See LICENSE for more information.