- This repository provides official PyTorch implementations for Latent-HSJA.

- This work is presented at ECCV 2022 Workshop on Adversarial Robustness in the Real World.

- Dongbin Na, Sangwoo Ji, Jong Kim

- Pohang University of Science and Technology (POSTECH), Pohang, South Korea

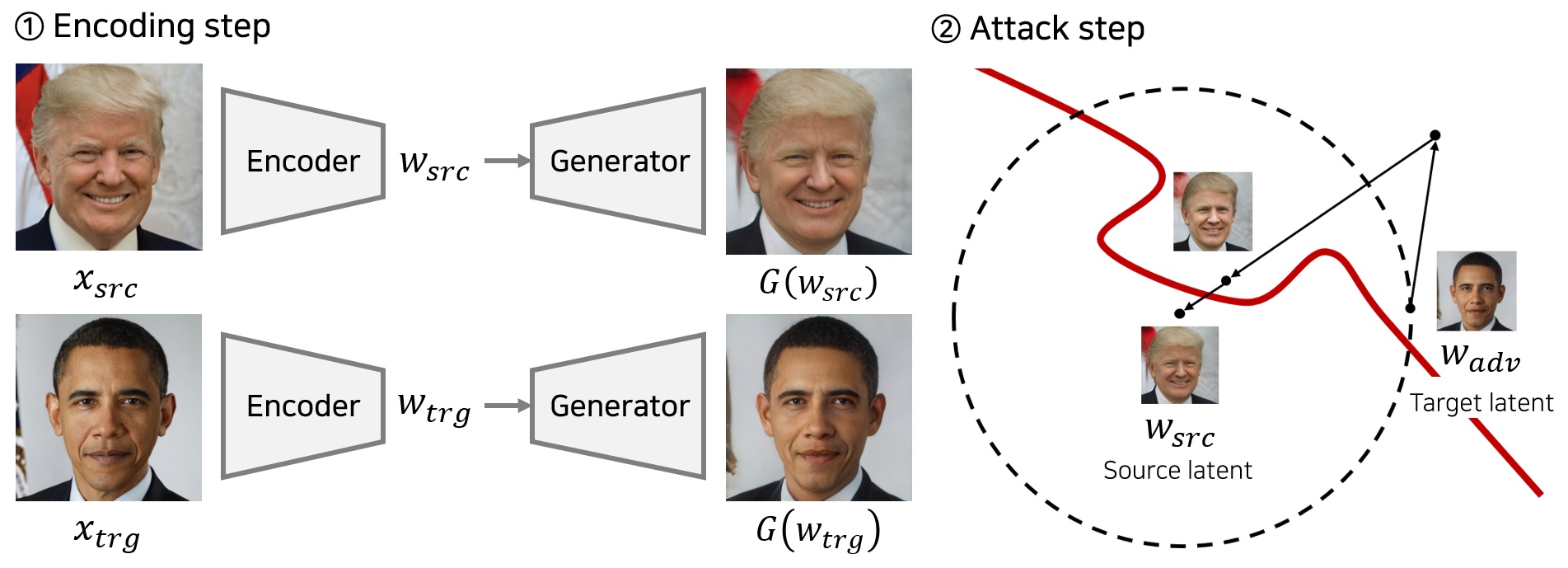

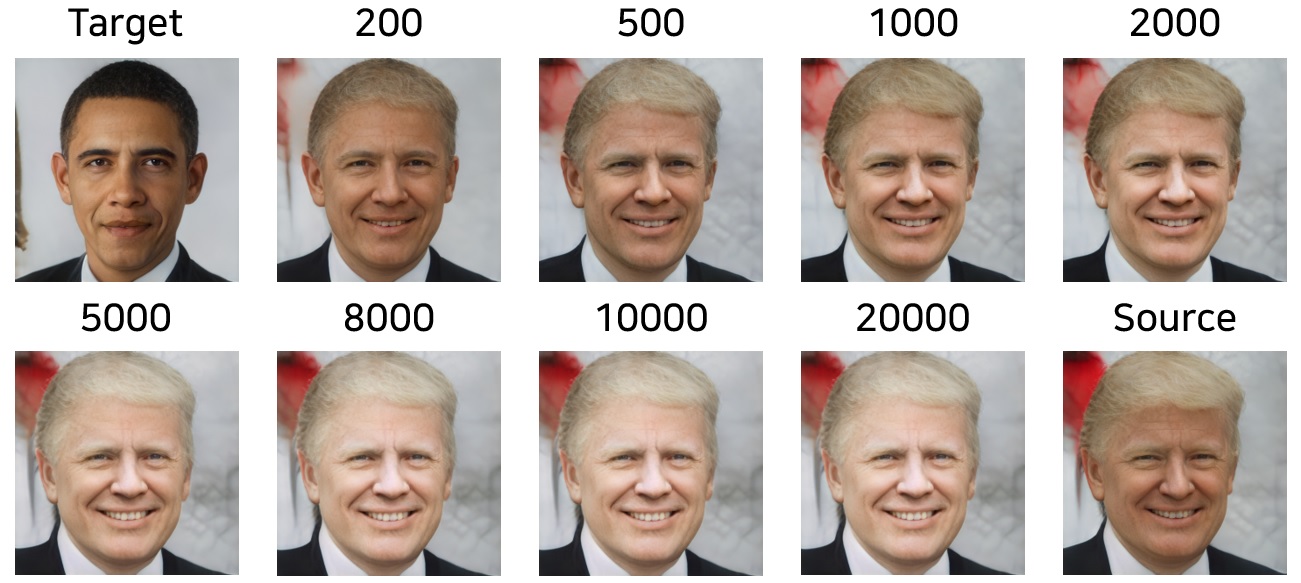

Adversarial examples are inputs intentionally generated for fooling a deep neural network. Recent studies have proposed unrestricted adversarial attacks that are not norm-constrained. However, the previous unrestricted attack methods still have limitations to fool real-world applications in a black-box setting. In this paper, we present a novel method for generating unrestricted adversarial examples using GAN where an attacker can only access the top-1 final decision of a classification model. Our method, Latent-HSJA, efficiently leverages the advantages of a decision-based attack in the latent space and successfully manipulates the latent vectors for fooling the classification model.

- All datasets are based on the CelebAMask-HQ dataset.

- The original dataset contains 6,217 identities.

- The original dataset contains 30,000 face images.

- This dataset is curated for the facial identity classification task.

- There are 307 identities (celebrities).

- Each identity has 15 or more images.

- The dataset contains 5,478 images.

- There are 4,263 train images.

- There are 1,215 test images.

- Dataset download link (527MB)

Dataset/

train/

identity 1/

identity 2/

...

test/

identity 1/

identity 2/

...

- This dataset is curated for the face gender classification task.

- The dataset contains 30,000 images.

- There are 23,999 train images.

- There are 6,001 test images.

- The whole face images are divided into two classes.

- There are 11,057 male images.

- There are 18,943 female images.

- Dataset download link (2.54GB)

- Dataset (1,000 test images version) download link (97MB)

Dataset/

train/

male/

female/

test/

male/

female/

- The size of input images is 256 X 256 resolution (normalization 0.5).

| Identity recognition | Gender recognition | |

|---|---|---|

| MNasNet1.0 | 78.35% (code | download) | 98.38% (code | download) |

| DenseNet121 | 86.42% (code | download) | 98.15% (code | download) |

| ResNet18 | 87.82% (code | download) | 98.55% (code | download) |

| ResNet101 | 87.98% (code | download) | 98.05% (code | download) |

- For this work, we utilize the pixel2style2pixel (pSp) encoder network.

- E(ꞏ) denotes the pSp encoding method that maps an image into a latent vector.

- G(ꞏ) is the StyleGAN2 model that generates an image given a latent vector.

- F(ꞏ) is a classification model that returns a predicted label.

- We can validate the performance of a encoding method given a dataset that contains (image x, label y) pairs.

- Consistency accuracy denotes the ratio of test images such that F(G(E(x))) = F(x) over all test images.

- Correctly consistency accuracy denotes the ratio of test images such that F(G(E(x))) = F(x) and F(x) = y over all test images.

- We can generate a dataset in which images are correctly consistent.

| SIM | LPIPS | Consistency acc. | Correctly consistency acc. | ||

|---|---|---|---|---|---|

| Identity Recognition | 0.6465 | 0.1504 | 70.78% | 67.00% | (code | dataset) |

| Gender Recognition | 0.6355 | 0.1625 | 98.30% | 96.80% | (code | dataset) |

If this work can be useful for your research, please cite our paper:

@inproceedings{na2022unrestricted,

title={Unrestricted Black-Box Adversarial Attack Using GAN with Limited Queries},

author={Na, Dongbin and Ji, Sangwoo and Kim, Jong},

booktitle={European Conference on Computer Vision},

pages={467--482},

year={2022},

organization={Springer}

}