RFC: Consider retiring the PI1s ARMv6 (downgrading support to "experimental")

refack opened this issue · 63 comments

Is this worth putting on the Build WG agenda? Might be a good idea to lay out the case for retiring them in a sentence or two.

FYI I spent the day tinkering with the Pi1's. Ended up reprovisioning 4 of the Pi1's entirely and replacing two of the SD cards. So currently Jenkins sees them all as online (first time in a long while), but I'm dubious about a few of them. I've been keeping a log for the past 18 months of maintenance so I can better track which Pi's have repeating problems and might suggest more fundamental problems than just a dodgy OS or SD card. Over the next few days we should keep an eye on repeat failures on individual Pi's and take repeat offenders offline. If any of the ones I fixed up today show recurring problems, particularly ones that I replaced SD cards in, then it might be time to be retiring hardware.

I think we're in a better place with the whole compliment online, and there's a ton of green so doesn't appear to be any problems (yet) with the machines I've brought back online.

This is not to nullify the original point though, it's certainly worth considering retirement, especially as the test suite grows and these are holding us back. Mean execution time for test-binary-arm seems to be ~42 minutes with the current test suite. That's up from under 30 minutes a couple of years ago.

If we drop the Pi1's, we're essentially dropping ARMv6 support unless we want to go an emulation route (that might be more pain than the Pi1's are though!). Maybe it's time though? There's very little ARMv6 hardware being shipped anymore, it's nearly 20 years old and the main consumers are users with old devices, like Pi1's.

Pretty much the only market data we have is the download numbers, see 2019 so far below. I honestly don't know how to frame this. ARMv6 is only 0.08%, but that's still more than ARM64 (whodathunk?) and double any of the IBM platforms. What do we do with that information?

(This is ordered by total recorded download count, not just for the time period I've selected, hence s390x being last even though it beats ppc64 which has been downloaded more times in its lifetime).

Since this has evolved (during today's Build WG meeting, anyway) into a discussion about dropping support for armv6/Pi 1 devices entirely: /ping @nodejs/hardware

We are considering dropping official ARMv6 support for Node 12 similar to x86 being dropped for Node 10. The cost of running our growing test suite and maintaining the hardware for it isn't small and each LTS release line locks us in for 3 years.

This would mean that Raspberry Pi 1 and 1+ would not have official binaries available for download from nodejs.org, but it would not prevent anyone from offering unofficial ones. Unfortunately building directly on ARMv6 hardware takes a long time and cross-compiling is extremely complicated.

We would like feedback from who this might impact so we can better understand the costs to users, we have very little insight.

Node 10 and below would still support ARMv6 and still ship binaries on nodejs.org for the duration of their support lifetime.

I think this would also affect Pi0 and Pi0w

The Pi 1 and 1+ have both been superseded by newer ARMv7 models.

The Pi Zero is ARMv6 and from its site:

End of life of the Raspberry Pi Zero is currently stated as being not before January 2022.

(edited to add link: see Specification tab on https://www.raspberrypi.org/products/raspberry-pi-zero/)

If that means this gen of the Pi Zero is here to stay for a while yet, there will be a 8 month window at least once Node 10 reaches EOL where there will be no official build of Node for the Pi Zero. Of course they might refresh the hardware before then.

We in the Node-RED project do have a user-base running on Pis of all shapes and sizes. The Pi Zero is pretty under powered, so I don't believe there are that many users on that particular device, but there will be some. We can try to gauge interest from our user community on this topic.

I use node on Pi0 boards. They’re definitely popular. not sure about node on them though—it’s awful slow.

still, if there’s a path forward for armv6, I’d like to see support retained.

Unfortunately this seems like one of those things that will be tough to gather much feedback on. I’ll try to put some feelers out in the nodebots community.

I use nodejs on a A LOT of Pi0 boards. This would affect me for sure if I want to update node version on those boards in the future :/

I'm a huge fan of Node with RPi and have written several guides (downloads exceeding 500,000) teaching people how to install and use Node on the RPi. While the RPi1 is obsolescing, the RPi0 (also based on the ARMv6 architecture) is alive and well and used in Node/IoT projects.

The PI Zero performance is quite acceptable for hobby IoT projects when using Node in conjunction with Raspbian Lite. It is the small form factor that makes the Pi Zero with Node so compelling and this helps make up for any deficits in the performance arena.

I hope support will continue for ARMv6 since the lack of support will alienate current and future users of Node.js until such time that the Pi Zero architecture is updated beyond ARMv6.

Am I correctly interpreting this table to indicate that Pi Zero devices have faster CPUs and more RAM than Pi 1 devices? And faster CPUs (but not more RAM) than Pi 2 devices?

And that a Pi Zero device was introduced/released as recently as last year?

I don't know how much effort/pain it will be or how effective it will be, but I wonder if swapping out the Pi 1 devices in CI for Pi Zero devices might be a way to reduce our ARMv6 pain? (Doesn't help with cross-compiling, though.)

Echoing what's been said above, the Pi Zero and Zero W are both still in production and are armv6. In my experience as the author of Raspi IO, which brings Raspberry Pi to the Johnny-Five Node.js robotics framework, a sizeable portion of my users use the Zero/Zero W. I don't have exact stats unfortunately as I don't gather that sort of data, but based on issues filed/people who reach out to me to ask questions/show off projects, I'd estimate that Zero/Zero W users make up anywhere from 25-40% of my userbase.

I would recommend waiting to drop armv6 support until the Zero and Zero W are EOLed which are stated as "being not before January 2022." I don't know when the Zero W is slated to be EOLed, but I'd imagine it's at most a year after the Zero.

I also just discovered that the original compute module, which has the same processor as the Pi 1, is still available for sale. I suspect it will likely be retired soon though (and admittedly I thought it already had been).

I also support @Trott's recommendation to replace the aging RPi1s with RPi Zero W's because they're still in production and, as mentioned, they have a faster CPU.

I can donate some lightly used hardware (Pi0-W, memory card, OTG dongle, etc) if you tell me where to ship it.

I'm happy to purchase + ship new Pi0/Pi0w as well if it would be helpful.

+1 to Tierney's suggestion. I'm sure we can get Microsoft to sponsor some hardware, if you're interested.

@thisdavej @boneskull In practice, do y'all use the official node binaries? @nebrius' raspi-io wiki pages point users to the Nodesource binaries.

My Beginner's Guide to Installing Node.js on a Raspberry PI also points people to use the NodeSource binaries. I direct people to articles like this one which instructs people to download binaries from https://nodejs.org/dist/ when they are seeking to run Node on the Pi Zero W or an RPi1.

Thanks for the feedback so far folks, it'd be great to hear more if others are reading this, we've had such a hard time connecting with the Node+ARM user community so we end up making guesses and assumptions.

To be honest, I hadn't even considered the Zero but that does seem like it might be a compelling reason to continue support if we can solve some speed problems we're facing.

I like the idea of ditching the Pi 1 B+'s with Zeros but the challenge is that we NFS-boot (edit: NFS-root is probably more accurate, we still load the initial bootcode via SD for full Pi compatibility) everything now and it's given us a lot more stability than relying on SD cards. NFS-boot without an ethernet port is going to be a bit of a challenge. Since the Zero can act as a device over USB and it's apparently possible to NFS-boot over USB, we might be able to come up with a novel setup for a cluster of Zeros.

I've ordered a Zero W to do some experimenting with. If practical, maybe we do another community-donor drive to get a cluster of them and aim for ~18 of them to future-proof ourselves a bit better. It'll depend on performance and the practicality of running a cluster with our infra. Procuring them might be a bit tricky since it seems that the Pi Foundation are enforcing a 1-per-customer limit on retailers at the moment.

I always use NodeSource binaries on Linux.

^ FWIW the NodeSource packages support ARMv7, so they'll only work on Pi 2 and upward, we're talking about ARMv6 binaries here which will only impact Pi 1, 1+ and Pi Zero. Plus other miscellaneous hardware, although it's such an old architecture.

FWIW the NodeSource packages support ARMv7, so they'll only work on Pi 2 and upward, we're talking about ARMv6 binaries here which will only impact Pi 1, 1+ and Pi Zero. Plus other miscellaneous hardware, although it's such an old architecture.

Thanks for the update @rvagg, I apparently missed that this happened. I updated my install instructions accordingly to use the NodeSource PPAs for the 2/3, and the binaries from nodejs.org for the Zero/1.

Like the others above me:

- I have a bunch of Pi0's used in hobby projects

- I'm willing to donate a Pi0 or three to help the cause

- Official ARMv6 binaries are ideal, but could live with unofficial builds if need be.

I use a Pi Zero frequently with IoT and I am keen on using NodeJS with some machine learning problems as well as running later editions of Angular on the Raspberry Pi Zero. I hope that the support continues.

I use NodeJS on the Raspberry Pi Zero W for personal projects and I would be sad to see the build for ARM 6 disappear.

Now, I understand the headache of maintaining several architectures... Do what's best for NodeJS.

Installing NodeJS is literally the third thing I do on every Pi-based project, after setting up networking and installing vim. I'm not a Python person, and don't need to be thanks to the efforts of the community with NPM packages for supporting so much hardware. I've lost count of the number of Pi Zeros I've got in my posession; yeah, they're slow, but they're capable enough to do the tasks they're required for.

If I have to compile it myself, so be it. I'll be sad that there's an extra step for me to run and maintain, but my first 'go' with NodeJS was in 2010 and I had to compile that myself (there wasn't even Windows support back then) so I will do what I need to to get my JS environment!

@rvagg I think we could use the same approach as we do for the P1's we would just need USB to ethernet dongles?

You'll need a micro USB to RJ45 adapter of some kind. It looks like many of the cheap ones require kernel patching to work with the Pi Zero/Pi Zero W as explained in the comments on this Stack Exchange answer. The two most promising options I'm seeing (but have not tested) are:

-

Ethernet Hub and USB Hub w/ Micro USB OTG Connector - see review here. You'd also get three USB ports for free which you won't need.

-

Ethernet Adapter for Chromecast - this is purported to work out of the box according to answers on this RPi forum.

Perhaps others have direct experience and even better options to recommend to achieve Pi Zero RJ45 connectivity.

armv6 covers many of the millions of RPis manufactured and in use. The Zero is also unlikely to be upgraded with an armv7 chip from what we know.

I would hate to see all those devices deprecated or "experimental" which could be almost the same thing.

What's the primary problem? Unreliable SD cards?

I wonder if there's a way you can boot with a read-only SD card and then use a USB primary partition on an external rotary HDD? There appears to be a way to netboot over USB -> https://dev.webonomic.nl/how-to-run-or-boot-raspbian-on-a-raspberry-pi-zero-without-an-sd-card

Alex

What's the primary problem? Unreliable SD cards?

The primary problem as I understand it is: We do all our armv6 stuff on a cluster of Raspberry Pi 1 devices and it is the big bottleneck for a lot of things now. @rvagg is getting a Zero to play around with and maybe see if using those will appreciably speed stuff up.

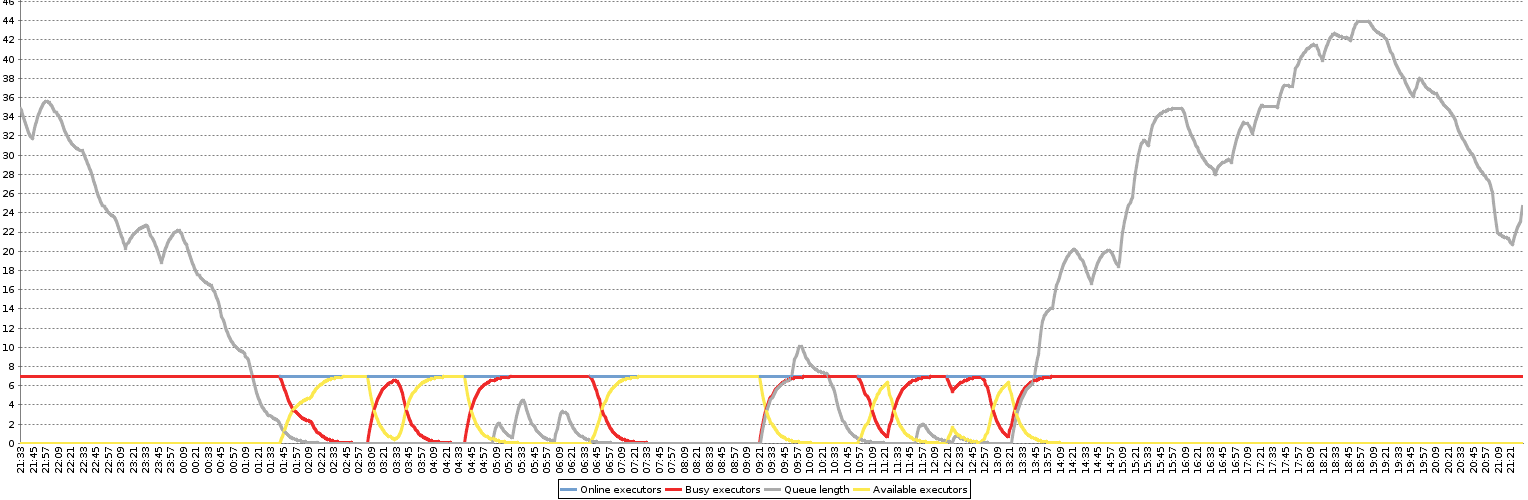

You can see the difference in bottleneck-iness in those first two graphs at the top. The Y access goes to a much larger value in the Pi1 graph. The grey line that looks like a mountain is the job queue--stuff waiting for a Pi1 so it can run/test/build/whatever. The Pi2 devices, by comparison, seem to keep up with the queue.

Does anyone have experience with stuff like:

https://sano.shop/en/products/detail/350889

What's the primary problem?

As I see it the most significant problem is that we need to self host this hardware. The special requirements of these system, currently even preclude co-location.

I had some good conversations with @alexellis and also @vielmetti from @WorksOnArm. They suggested using QEMU as another option to explore to emulate ARMv6. @vielmetti provided a link to Emulate Raspberry Pi with QEMU. The I believe the qemu option of -cpu arm1176 corresponds to ARMv6 according to this forum discussion. Perhaps the whole CI farm could be virtualized.

If the cross compilation route is taken Chris Lea has an interesting blog post directly related to the topic at Cross Compiling Node.js for ARM on Ubuntu. The post deals with ARMv7 and ARMv8 so at the very least some tweaking would be needed for ARMv6.

Another idea might be to cross compile for the Pi Zero on a Pi 3. I'm not sure but perhaps this could be achieved by setting a few flags to different values.

@rvagg having done some more reading I see that your suggestion of boot over USB is probably better than adding dongles. I have a few Pi Zero's so I might try it out if I get a chance. Possible set of instructions https://dev.webonomic.nl/how-to-run-or-boot-raspbian-on-a-raspberry-pi-zero-without-an-sd-card.

The option of emulation if it provides a true representation of a Pi also sounds interesting.

Thanks for the suggestions!

@thisdavej we have considered QEMU. It will will be a step outside of our standard operating procedure, which is to at least test on the actual platform.

FTR: QEMU is an emulator, that allows running software compiled for different Hardware then the host

@fivdi, we have been using the recipe distilled by @rvagg and @chrislea (who is a friend of the WG) to cross compile the ARM binaries for several years (our CI config, should be visible after the embargo is lifted - #1699). The issue is we want to test the binaries on the actual platform.

@hashseed do you know how does V8 test ARM (e.g. https://ci.chromium.org/p/v8/builders/luci.v8.try/v8_android_arm_compile_rel/b8920698817726780720)?

FWIW, when we were still building our packages from source at NodeSource, we used sbuild to generate the ARMv7 binaries, which internally uses QEMU. There was never any reported instance of this causing any issues. The builds took roughly 10x as long as building for Intel using the emulation, but the resulting binaries worked perfectly.

OK, we have a lot of people in here with minimal context of what we do now, so I may as well do a brain dump (and I find this interesting so I'm guessing other ARMians will too). Here's our ARM story for testing and releasing for Node:

Testing ARM

- With Raspberry Pi's:

- We cross-compile everything for testing on Pi's, no native compiling because it's far too slow. We use a form of https://github.com/raspberrypi/tools to cross-compile for a wheezy-minimum (GCC 4.8) which is still tested for Node 8 and 6. We use a custom crosstools that I put together @ https://github.com/rvagg/rpi-newer-crosstools for a jessie-minimum (GCC 4.9) which we test across all active release lines for Node 10 onward. The crosstools provide the same magic that gives Raspbian ARMv6 support while its Debian parent is ARMv7 minimum.

- For ARMv6, our Raspberry Pi 1 B+ cluster tests Wheezy for Node < 10 and Jessie for Node >= 10. For ARMv7, our Pi 2 cluster tests the same and our Pi 3 cluster tests the ARMv7 binaries on Jessie for Node < 10 and Stretch for Node >= 10. It's nowhere near as comprehensive as our x64 test configurations but we're severely limited by execution time and resources (hardware, people) so we make a best base-case that we think gives us the best coverage.

- Our cross-compiled Pi binaries are done on fast x64 hardware and shipped on to 6 separate Pi devices of each type (1 B+, 2, 3) where the test suite is divided up evenly and run in parallel. We have approximately 12 of each type of Pi so in theory we can run 2 CI jobs in parallel although flaky hardware means that we don't often have the full compliment of Pi 1 B+'s online which contributes to the graph that @refack posted above. But our test suite is also growing and keeps on taking longer and longer. Expanding the parallelism beyond 6 has diminishing returns because executing tests isn't the only overhead, simply setting up the filesystem for running tests is slow too.

- We NFS-root all our Pi's (onto a couple of SSDs) and it's given us a lot more stability than running direct from SD card (and we don't burn through nearly as many of them now), although speed hasn't improved as much as you'd think.

- We also have some non-Pi ARMv7 hardware donated by Scaleway.com. It's native compiled and tested on the same machine, within Docker to give us flexibility to switch base-OS version. Wheezy only on Node < 10, Jessie on everything, Stretch on Node >= 10.

- For ARM64 / ARMv8 we test on proper server hardware (Cavium ThunderX) donated by ARM & packet.net via @WorksOnArm on on both Ubuntu 16.04 and CentOS 7. We used to have some Odroid C3's graciously donated and supported by miniNodes to test a non-server variant of ARM64 but they ended up having too many stability challenges. Aside: ARM64 is where ARM's interest in Node is focused since it's what their server platforms are all built on. They've been an amazing help so far—they shipped us some very early prototype servers that we used to get our ARM64 support up and going (IIRC that was even back in io.js days) and then hooked us up with packet.net hardware when @WorksOnArm became a thing.

ARM binaries for nodejs.org:

- We produce ARMv6 binaries natively on Raspberry Pi 1's for Node < 10 and cross-compile at Jessie-level for Node >= 10. Native on Pi 1 can be slow, depending on the state of the ccache for the binary being built and is why ARMv6 binaries are sometimes a day late for Node < 10.

- ARMv7 is built natively on our Scaleway hardware for Node < 10 and cross-compiled at Jessie-level for Node >= 10.

- For ARM64 we build them straight on the packet.net hardware on CentOS 7 with devtoolset-6 (modified GCC 6 for CentOS 7 level compatibility).

Compatibility

Regarding CPU compatibility:

- Our ARMv6 and ARMv7 binaries are labelled

armv6landarmv7l, the 'l' being 'little-endian' and comes from the classic GCC way of naming things (IIRC). We could have gone witharmhffor our ARMv7 binaries to match the Debian ecosystem but since they ignore ARMv6 they don't need a way to make a distinction (Raspbian still ships all binaries as ARMv6 so they don't need a distinction either .. yet). - Our ARMv6 are compiled for Pi compatibility in the same way that all Raspbian binaries are, "ARMv6 ZK" architecture (

armv6zk), tuned for the "ARM11 76JZ(F)-S" (arm1176jzf-s) (the Broadcom BCM2835 SoC that all the Pi 1's and Zero's have are of this variant). This is the latest of the ARM11 / ARMv6 variants/extensions (aside from "MP" - multi-core) and for that reason is the most common SoC type that we still see today in ARMv6 hardware I think. But this is important because it means our binaries probably don't support the older variants that don't have support for the newer ARM11 extensions that shipped up to 76JZ(F)-S. I haven't heard of any problems with our binaries not working on people's ARMv6 hardware but I suspect if you're running anything older than 76JZ(F)-S then you're used to pre-compiled stuff not being able to run! Thankfully things are simpler for ARMv7 and simpler-still for ARM64. - (edit) Our ARMv7 binaries are compiled for

armv7-a(until a year or so ago ourarmv7lbinaries on nodejs.org were actually compiled for ARMv6 since we only had the Raspberry Pi cross compiler). You're better off grabbing these if you have anything that's at least ARMv7. Even though you could run the ARMv6 binaries on ARMv7 hardware (like all the Raspbian binaries), having them compiled specifically for ARMv7 gives you some optimisation benefits. So, Raspberry Pi 2 or 3? Grab thearmv7lbinaries. And as I said in an earlier comment, deb.nodesource.com is your friend for Debian-based operating systems (like Raspbian) for ARMv7, just don't use it for Pi 1 or Zero.

Regarding OS compatibility: our binaries' compatibility with other operating systems can be seen in this very handy table by looking up the OS & version we compile against and considering it the oldest libc that those binaries support. So our binaries should work on any recent Fedora, Stretch, Ubuntu 16.04+, etc.

We may be hitting some challenges with Node 12, however since various pressures (the greatest of which is V8) are pushing us to require a newer GCC (or possibly clang) and we can't fake that on ARMv6 & 7 (probably not 7 but that's not as definitive as 6) with the devtoolset hack that we can pull off on CentOS. So even if we proceed with ARMv6 testing and releases on nodejs.org, they may require newer operating systems to even work.

Emulation?

Regarding QEMU: we've considered and done some basic experimentation with it in the past but the experience that @chrislea mentioned above when producing binaries for deb.nodesource.com put a bit of a halt to it. We've never attempted a cross-compile and then run tests on QEMU but that's a possibility and there's a good chance it'll yield better performance than our Pi cluster. We'd need to allocate additional x64 hardware to do this from amongst our sponsors and we're already pushing at the limits of many of those donations.

Along with evaluating the Pi Zero option, we should probably give some consideration to emulation as a possible solution.

Making the go/no-go decision

The considerations that we're balancing against community demand for official ARMv6 support are primarily:

- Speed of executing our test suite, which grows every week as new features are added and coverage is improved. ARMv6 is our slowest platform and holds everything up.

- Complexity as a maintenance concern, something we're currently fighting on multiple fronts since we've taken more of a "let's do it if we are able to" approach that ignores some of costs of people-time, technical debt and simply the contextual knowledge (like what I've written in this post!) that is tucked in individuals' heads and therefore has a bus-factor problem.

- Cost, not in a strict dollar sense since almost everything we do with Node infrastructure costs the Foundation nothing. But we manage a lot of relationships with sponsors and make sure we're doing the best by them so they're happy to keep generously donating infrastructure and we have to balance our usage of all of those providers so that we're within the (mostly soft) boundaries for each of them.

ARMv6 is also unique for us since it's the only remaining architecture that we physically manage (specifically, in my garage, by me, who isn't going to be here forever) without redundancy. We have ARMv7 and a couple of other pieces that are also physically managed but we have forms of redundancy for them all. There's no ARMv6-as-a-Service, so unless we go full emulation, we're forced to manage hardware and that has bus-factor and redundancy problems. We've discussed splitting our cluster up across physical locations, but to be honest, the complexity and time investment it takes to manage mean that it has to involve someone on our trusted team who enjoys getting dirty with this stuff on a regular basis like I do.

We already stopped shipping x86 Linux binaries and it's fully "experimental" now, meaning we don't even test on x86 for Node >= 10. Yet our raw download numbers show that there are way more users downloading x86 than armv6 binaries from nodejs.org (see a chart above in this thread).

We also don't know how to classify our ARMv6 user-base and how to weigh it against everything else we've spread ourselves across. This thread is the most feedback we've got in a long time (maybe ever?) on ARMv6. Are we dealing with most hobbyists? Users shipping commercial IoT solutions? How much does it matter if binaries are shipped on nodejs.org or provided by some third-party or if cross-compile instructions are made available? Is this simply a matter of convenience? And if so, how do we weigh that against the costs we're incurring here continuing the support?

Thank you to everyone that continues to provide binaries for any version of ARM as I know how much extra effort this can be.

I provide prebuilt, cross-compiled ARM binaries for sharp/libvips and keep some basic download statistics to help make decisions about what to continue to support. Over the last 6 months the approximate percentage for each ARM version is:

- ARMv6: 43%

- ARMv7: 40%

- ARMv8: 17%

Sadly none of the ARMv6 cross-compiler toolchains I'm aware of provide a recent enough version of GCC with the C++14 support required by Node 12. Unless we can get the Raspberry Pi Foundation to provide/sponsor the work to update these (unlikely), compilation via QEMU is probably the best bet for continued ARMv6 support.

I've got a couple of Pi Zeros' connected over usb and with everything nfs mounted (ie nothing except for the Pi Zero itself). Getting the interface up seems to be a bit flaky but I can seem to do it but bringing the if on the host up/down manually. It might be because my host is a virtual machine but I don';t think so.

I did a speed test and it seems like they can hit close to 40Mbit/sec downloads. The git checkout still took a long time but that seems to be due to limited CPU as git used most of the CPU for the period of the checkout.

Doing a compile of node which as @rvagg mentions will take a long... time. I'm going to let it run build overnight as I don't have the cross-compile setup.

After that completes I'll time how long it takes to run a full test suite. We break up the suite across a number of Pi's now and the binaries are being built with a different compiler (6.x which came with the latest raspbian distro), but at least that might give us an idea of how much faster(or not) a zero will be when running the tests.

I also tried to get QEMU going with the latest raspbian stretch but it failed to start. Has anybody on this thread managed to get it to work with the latest stretch?

Unfortunately the i/f of the machine does not seem to stay up long enough to get it compiled :( Not sure if it's the load or flakiness with the usb from a virtual machine.

Might have been because I did not specify -j ? Trying with -j 1 now.

EDIT: Maybe I was just not patient enough and the ssh timed out while the compilation was taking place. It seems to be stuck on compiling one file and taking forever.

I should probably just grab the latest nightly and run the tests with that instead of trying to compile

These are the times having run just the default tests (2441 tests) with -j 1 and -j 2

-J 1

real 134m45.212s

user 99m43.689s

sys 9m29.116s

-J 2

real 126m20.501s

user 101m26.707s

sys 10m4.198s

@mhdawson do those times include compilation? As a point of comparison, ATM we only actually test the cross-compiled binary on the PI1, with a multiplicity of 6.

Our typical test jobs take ~40m, so 40 * 6 = ~240m which is double what you got.

@refack, unfortunately, I could not even get them to compile on the PI zero. They would take a very long time and then the compile was killed. Most likely I think by the OOM killer.

I'll have to get the specific command line when I'm back home but what I did instead was:

- do git checkout in advance (took long time)

- pull down and unzip the node.js ARM6 binary from nodejs.org (In advance)

- use tools/test.py to run the tests. I ran the "default" target which is a subset of what we normally test.

The times shown were only for step 3 above.

So I think we need to understand what is not covered by default (which I know includes addon tests, and more) as well as the time taken to clone etc. to be able to compare.

3. use tools/test.py to run the tests. I ran the "default" target which is a subset of what we normally test.

The times shown were only for step 3 above.

So I think we need to understand what is not covered by default (which I know includes addon tests, and more) as well as the time taken to clone etc. to be able to compare.

That's good news. AFAIK make test is a superset of make test-ci. So what you are describing is encouraging preliminary results.

IMHO with the support of the community we could make progress with such a migration.

@refack to clarify I did not run make test. I ran tools/test.py specifying default as the tests to run. I'm pretty sure that is a subset of test-ci so I still think we need more info to have a good comparison.

@refack to clarify I did not run

make test. I rantools/test.pyspecifying default as the tests to run. I'm pretty sure that is a subset of test-ci so I still think we need more info to have a good comparison.

At the current time, the tests that are run by test-ci that are not run by tools/test.py default are:

- addons

- doctool

- js-native-api

- node-api

I ran

tools/test.pyspecifying default as the tests to run.

Ok. Less clear indication, but still in the ballpark IMO...

later tonight I'll disable from the farm and then log into test-requireio--mhdawson-debian9-armv6l--pi1p-1 and run the same set of tests as I did on the PiZero and that should give us a closer comparison.

Command line for reference:

time tools/test.py -j 1 -p tap --logfile test.tap --mode=release --flaky-tests=dontcare default >withj1

Have had some trouble getting the output of time. The ssh connection seems to drop at some point during the run. Had tried running in the background but command I used still did not pipe time output to file. Running again and hope to get it this time.

ok equivalent run on the Pi1

real 184m8.136s

user 152m9.797s

sys 13m8.520s

Which means the Pi Zero does show to be about 37% faster, which makes sense given the increased clock frequency. Given that there are a few mins for the git work, I guess we'd expect 6 PI zeros to reduce the time down to ~32 mins instead of the current ~40 mins. That excludes the time for the cross-compile as well.

I got mine but have only had a brief play. I'm currently stuck on how I'd get a cluster to boot via NFS at will (including reboot), it's not very straightforward and quite hacky at the moment.

Here's a thought I've been toying with, and it came up with x86 Linux support that we dropped but apparently still ship Docker images for (!). We could set up a parallel project, "unofficial builds", maybe as part of nodejs/build, but it might work better as an independent project that outsiders can contribute to and "own" in a sense. We could get unofficial.nodejs.org (or similar) to point to a place where binaries are put that are part of this grey area of builds that are wanted, but don't meet our threshold for support in our stretched resources here at nodejs/build. I could imagine communities owning their bit, x86, armv6, and could even expand to more obscure binary types, like x64-musl for Alpine so the docker-node folks don't need to compile in-containerfor each release or x64-libressl for some of the *BSD folks.

Such a project would have not over-burden the nodejs/build team because in being "unofficial", if it's broken then it's up to users to fix it and it certainly won't stop Node.js releases from moving forward.

So my question here is: is it just the binaries you care about? If you could continue to get binaries for each release from some source then do you care much if we don't test every commit against armv6 and don't have armv6l binaries on nodejs.org/dist?

@rvagg do you have historical data on test failures on armv6? I'd be curious to know if those failures closely tracked failures on armv7+ or not. If they do (which seems likely to me), then I think moving it to a new "unofficial builds" project would be fine.

The binaries are the big thing I care about, yes. I would be fine getting them from another source if that source is reliable (i.e. not having to wait days/weeks for the latest release after the official builds are released).

I agree with @rvagg on the boot front. I think it would likely require some scripting as well as programatic control over the power to the USB port powering the Pi Zero. Not impossible (I've already bought a USB hub that switches could be wired into) but would definitely require some work.

@nebrius no data unfortunately but I can't remember the last time we had something serious that was isolated to ARMv6 aside from resource constraint problems that we regularly have (some tests need skipping because they test allocation of lots of memory, for example). My subjective impression is that there's a tight coupling between ARMv6 and ARMv7 for any bugs we've had in the past and I'd be confident that in the near future at least this would continue. It starts to break down if V8 de-prioritises ARMv6 (and I don't know the status of their testing), same goes for OpenSSL although they have more natural pressure to retain good support.

So we had a discussion about this in our Build WG meeting today and the approach we'd like to propose goes something like this for Node.js 12+ (everything remains as-is for <=11).

- Move ARMv6 to "Experimental", which means that we don't test every commit in our CI infrastructure, and therefore don't ship official binaries.

- "Experimental" comes with a caveat that we could, at any time, turn it back into a Tier 2 supported platform if we come up with solutions that ease the burden on this team. So perhaps someone invests time and comes up with a magical qemu solution that is easy and efficient so we opt to take it back on again.

- We try to spin up an "unofficial builds" project like I mentioned a couple of comments above ^. This would be an arms-length project such that breakages and failure to deliver don't fall back on either the Build WG or the TSC, but rather it's a community-driven project, where the community is comprised of people like those in this thread and people focused on other compilation targets. Build can lend some minimal resources, a single server would get it off the ground I think. But it would need to stand alone. The docker-node project is an example, there's almost no overlap between people who push that forward and Build or even much of the nodejs/node collaborator base. Plus docker-node have developed all of the valuable relationship they need to make it official and well supported. Docker releases of Node are part of our normal release schedule but it's a throw-it-over-the-wall approach where releasers just give a trigger for the docker-node folks to take over with.

I'll outline the "unofficial builds" idea a bit more in an issue or PR to this repo in the near future. For now though, know that we want to continue shipping binaries but we'd like to reduce the support burden on this team and the way to do that is to (1) decouple ARMv6 from our test-all-commits infrastructure and (2) decouple it from the critical release infrastructure (where breakage can mean lost sleep).

I don't think Build really has the last say on this, it's ultimately up to the TSC to decide what burden the project wishes to take on. But it'll probably end up depending entirely on what Build says it can handle.

This is an interesting post from a Microsoft employee on the challenges of cross-build of Arm images on Arm hardware, noting in particular ARMv6 issues.

The most interesting part of that post for me is that they don't seem to even bother testing on real ARMv{6,7} hardware, they just run the binaries on an ARM64 host in a Raspbian chroot. The problem being addressed come from missing instructions that have to be trapped and emulated by the kernel, causing delay. The "solution" is simply to emulate ARM64 so it can run in a single core, I guess this has something to do with core affinity and the cache advantage, or something like that? But it's still ARM64. That's not an approach we've even considered as an option but I guess it is? I have some doubts about the utility of such testing, does it get you close enough to be even worth doing? Something to consider at least.

OK folks, so this has panned in the following way:

- ARMv6 was unfortunately demoted to "Experimental" for Node 12: https://github.com/nodejs/node/blob/master/BUILDING.md#platform-list

- With Experimental status, binaries are no longer being released for ARMv6 to nodejs.org for Node 12 and later.

- Our Raspberry Pi 1 B+ set has been pulled from CI for Node 12+ code (still active for prior), which has helped with our test suite speed problems. Unfortunately there is no longer any ARMv6 testing occurring on Node commits for Node 12+ (there's still two different ARMv7 variants being tested).

But it's not all bad news. I'm attempting to start an "unofficial-builds" project as I mentioned earlier in this thread. It's producing ARMv6 binaries automatically following every release. The catch is that it's automatic, so may break and may be delayed. The intention is also not for the Build Working Group to be the owner of it, it shouldn't stretch Build resources at all because they're already stretched.

The project is housed at https://github.com/nodejs/unofficial-builds and it's looking for contributors and people to help maintain it. It has a single server that's (so far) pumping out 3 types of binaries that folks have been asking for but the core project (via Build) hasn't been able to accomodate: linux-x86, linux-x64-musl and linux-armv6. Those binaries are published to https://unofficial-builds.nodejs.org/ where you'll find a /download/ directory that's very similar to nodejs.org/download, complete with index.tab and index.json (perhaps someone could talk Jordan to hooking nvm up to it one day).

So this issue is considered closed as far as Build is concerned but I'd encourage you to consider whether there are ways you might be able to contribute to making unofficial-builds sustainable, even if that's just clicking 'Watch' and helping dealing with easy issues as they come in. With no ARMv6 testing of new commits, the users of these binaries are going to have to be the test platform and will have to help report and fix problems.