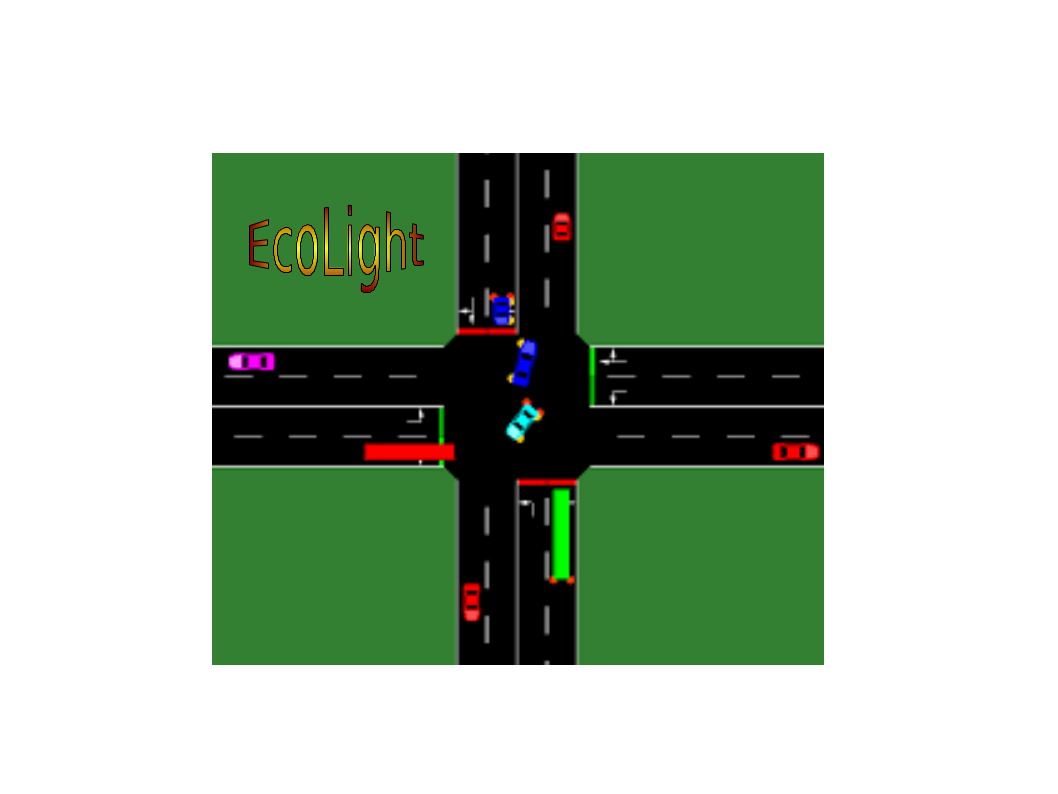

EcoLight is an ecosystem friendly DRL appraoch for traffic signal control. The code is based on SUMO-RL. SUMO-RL provides a simple interface to instantiate Reinforcement Learning environments with SUMO for Traffic Signal Control.

The main class SumoEnvironment inherits MultiAgentEnv from RLlib.

If instantiated with parameter 'single-agent=True', it behaves like a regular Gym Env from OpenAI.

TrafficSignal is responsible for retrieving information and actuating on traffic lights using TraCI API.

Goals of this repository:

- Provide a simple interface to work with Reinforcement Learning for Traffic Signal Control using SUMO

- Support Multiagent RL

- Compatibility with gym.Env and popular RL libraries such as stable-baselines3 and RLlib

- Easy customisation: state and reward definitions are easily modifiable

- Prioritize different road users based on their CO2 emission class

- Reward shaping scheme with hyper-parameter tuning

sudo add-apt-repository ppa:sumo/stable

sudo apt-get update

sudo apt-get install sumo sumo-tools sumo-doc

Don't forget to set SUMO_HOME variable (default sumo installation path is /usr/share/sumo)

echo 'export SUMO_HOME="/usr/share/sumo"' >> ~/.bashrc

source ~/.bashrc

Stable release version is available through pip

pip install sumo-rl

or you can install using the latest (unreleased) version

git clone https://github.com/LucasAlegre/sumo-rl

cd sumo-rl

pip install -e .

Alternatively you can install it with compatible version of Eco-Light (skip this section)

git clone https://github.com/pagand/eco-light

cd ecolight

pip install -e .

| SUMO simulator | Eco-Light white paper | Eco-light presentation | Eco-Light video |

|---|---|---|---|

| Link | Link | Link | Link |

Check experiments to see how to instantiate a SumoEnvironment and use it with your RL algorithm.

Q-learning in a one-way single intersection:

python3 experiments/ql_single-intersection.py

RLlib A3C multiagent in a 4x4 grid:

python3 experiments/a3c_4x4grid.py

stable-baselines3 DQN in a 2-way single intersection:

python3 experiments/dqn_2way-single-intersection.py

Q-learning in a one-way single intersection:

python3 run1_single-intersection.py

DQN in a two-way single intersection:

python3 run2_dqn_2way-single-intersection.py

Q-learning in a two-way single intersection:

python3 run3_ql_2way-single-intersection.py

A2C in a two-way single intersection:

python3 run4_a2c_2way-single-intersection.py

SARSA in a two-way single intersection:

python3 run5_sarsa_2way-single-intersection.py

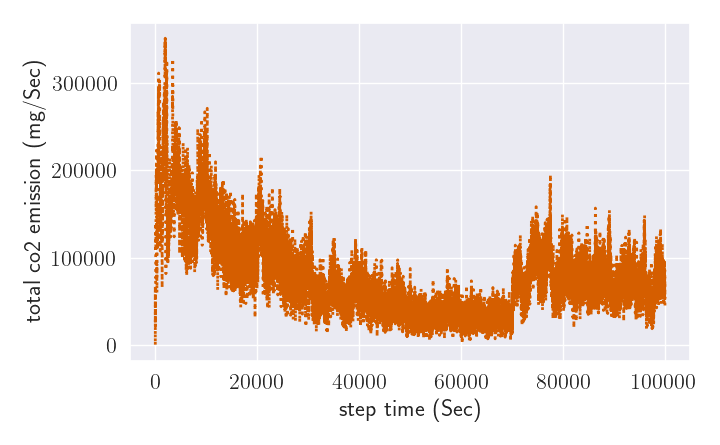

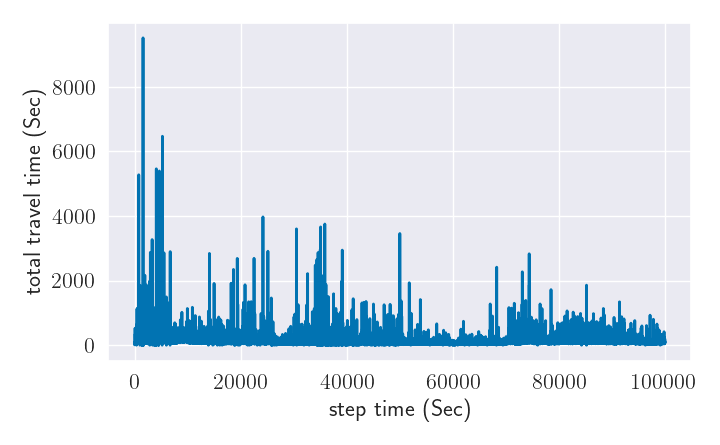

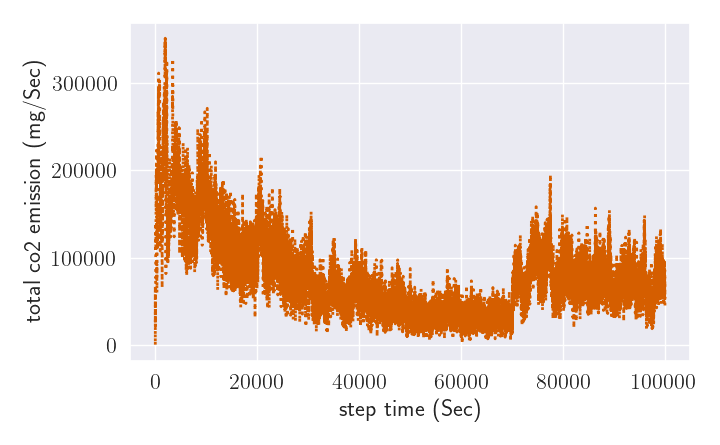

| Total CO2 emission | Total travel time |

|---|---|

|

|

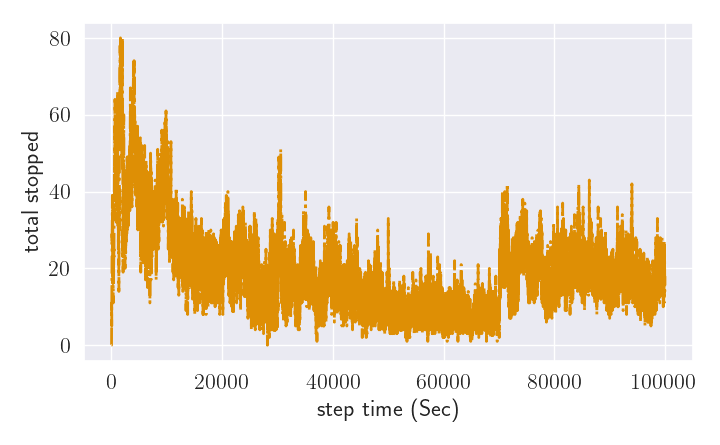

| Total stopped time | Total waiting time |

|---|---|

|

|

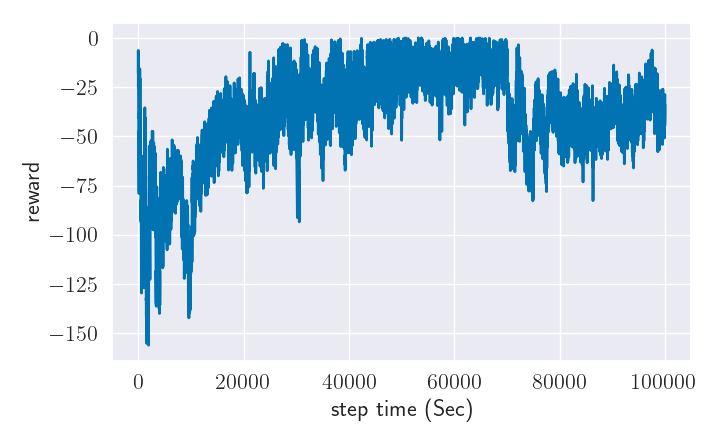

| Reward profile |

|---|

|

|

|

| Waiting time | Weighted waiting time |

|

|

| Queue length | weighted Queue length |

@article{aganddeep,

title={Deep Reinforcement Learning-based Intelligent Traffic Signal Controls with Optimized CO2 emissions},

author={Agand, Pedram, Iskrov, Alexey and Mo Chen},

booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems},

pages={},

year={2023},

organization={IEEE}

}

@article{agandecolight,

title={EcoLight: Reward Shaping in Deep Reinforcement Learning for Ergonomic Traffic Signal Control},

author={Agand, Pedram and Iskrov, Alexey},

booktitle={NeurIPS 2021 Workshop on Tackling Climate Change with Machine Learning},

year={2021}

}