- Based on MADDness/Bolt.

- More information about the base project is here

- arXiv paper link

This repo is used for the algorithmic exploration. I will try to update this repo with as much hardware information as I am allowed to publish.

# install conda environment & activate

conda env create -f environment_gpu.yml

conda activate halutmatmul

# IIS prefixed env

conda env create -f environment_gpu.yml --prefix /scratch/janniss/conda/halutmatmul_gpu

# install CLI

./scripts/install-cli.sh

# now use CLI with

halut --help

# or without install

./halut --help| All Designs | ASAP7 | NanGate45 |

|---|---|---|

| All Report | All | All |

| History | History | History |

| halut_matmul | ASAP7 | NanGate45 |

|---|---|---|

| Area [μm^2] | 9643.6787 | 140647.7656 |

| Freq [Mhz] | 666.7 | 333.3 |

| GE | 110.238 kGE | 176.25 kGE |

| Std Cell [#] | 68186 | 68994 |

| Voltage [V] | 0.77 | 1.1 |

| Util [%] | 45.0 | 59.2 |

| TNS | -1086.59 | -0.31 |

| Clock Net |  |

|

| Gallery | Gallery Viewer | Gallery Viewer |

| Metrics | Metrics Viewer | Metrics Viewer |

| Report | Report Viewer | Report Viewer |

| halut_encoder_4 | ASAP7 | NanGate45 |

|---|---|---|

| Area [μm^2] | 4844.5405 | 69711.9531 |

| Freq [Mhz] | 666.7 | 333.3 |

| GE | 55.378 kGE | 87.358 kGE |

| Std Cell [#] | 34334 | 33746 |

| Voltage [V] | 0.77 | 1.1 |

| Util [%] | 45.0 | 58.7 |

| TNS | 0.0 | 0.0 |

| Clock Net |  |

|

| Gallery | Gallery Viewer | Gallery Viewer |

| Metrics | Metrics Viewer | Metrics Viewer |

| Report | Report Viewer | Report Viewer |

| halut_decoder | ASAP7 | NanGate45 |

|---|---|---|

| Area [μm^2] | 4749.8286 | 68923.7891 |

| Freq [Mhz] | 666.7 | 333.3 |

| GE | 54.296 kGE | 86.37 kGE |

| Std Cell [#] | 33709 | 34395 |

| Voltage [V] | 0.77 | 1.1 |

| Util [%] | 44.4 | 58.9 |

| TNS | -11340.5098 | -0.66 |

| Clock Net |  |

|

| Gallery | Gallery Viewer | Gallery Viewer |

| Metrics | Metrics Viewer | Metrics Viewer |

| Report | Report Viewer | Report Viewer |

I am aware that there is still a lot that could be optimized here (warp etc.), but it was only developed for fast analysis

Caveats: No retraining and fine-tuning done yet!

LeViT (Source)

SOTA Vision Transformer on ImageNet 1K

Legacy Classifier on ImageNet 1K

- Data visualizer

be sure to select ResNet-50 layers

layerX.X.convX

The goal was to find out how much offline training data is needed to get the maximum accuracy.

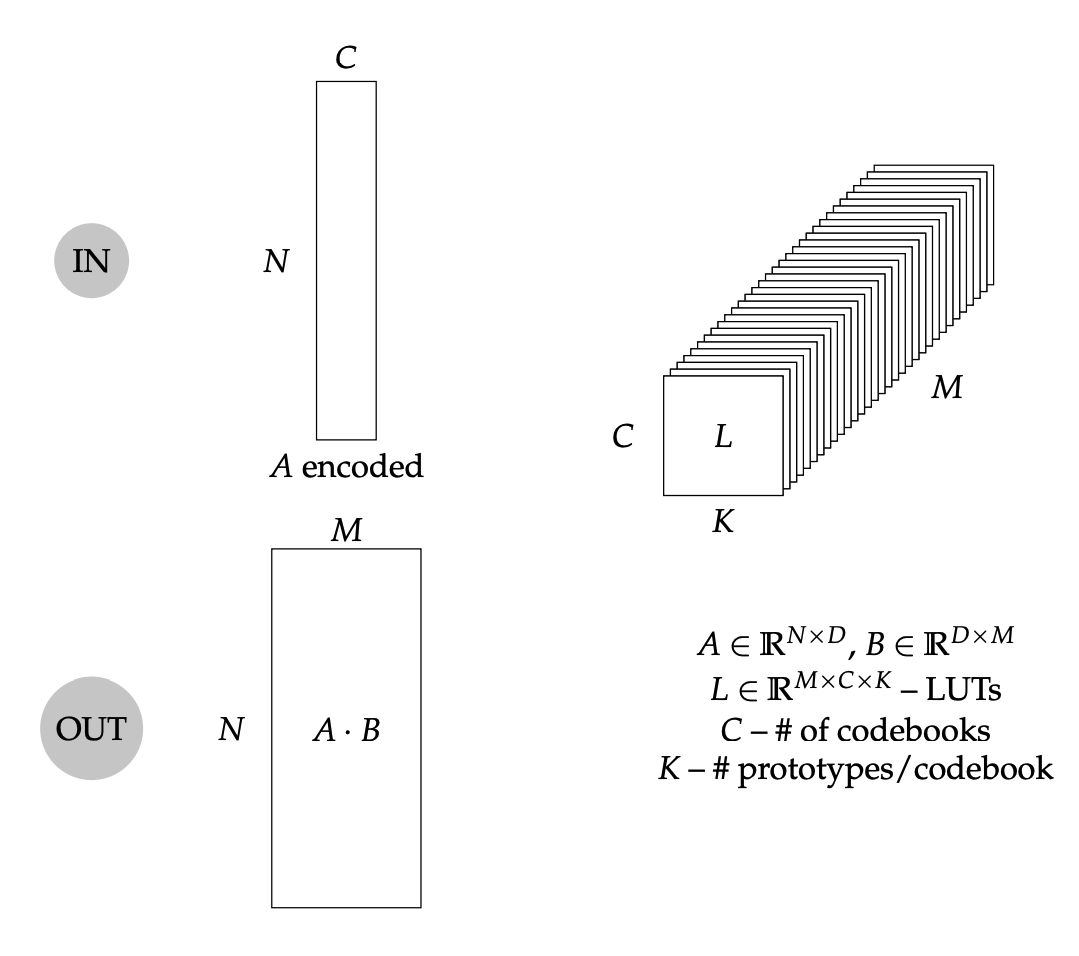

Some definitions about the forward path.