Body-Headline Latent Dirichilet Allocation

This is the course project for STATS2014: Foundations of Graphical Models undertaken by Francois and myself. The C++ code in GibbsSampler is written by Francois.

Motivation

The project aims at finding relevance of article headline to the article body using the extended model of Latent Dirichilet Allocation.

The internet is full of clickbaits. This research aims at finding those articles which have catchy headlines but the content has a little relevance to it. The idea is to infer topic distributions for body and headline separately and find the distance using KL divergence.

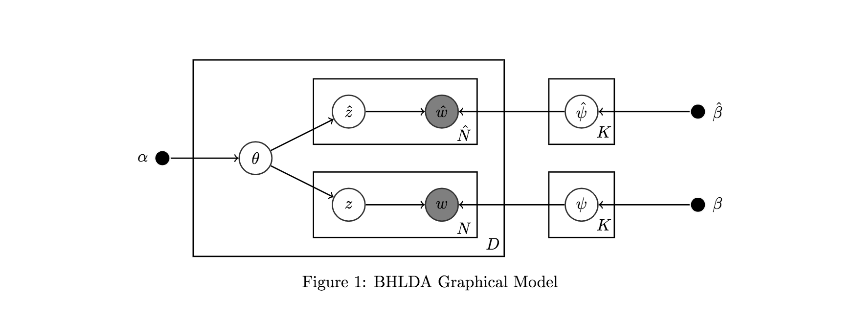

Graphical Model

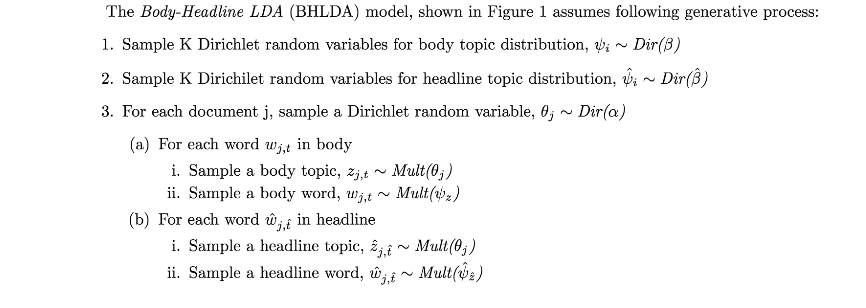

Generative Model

More Description

More about the model, results, and application is discussed in the final paper.

Gibbs Sampling was used for this. The code (C++) is in GibbsSampler.

Dataset

The New York Times Annotated Corpus was used for the project.

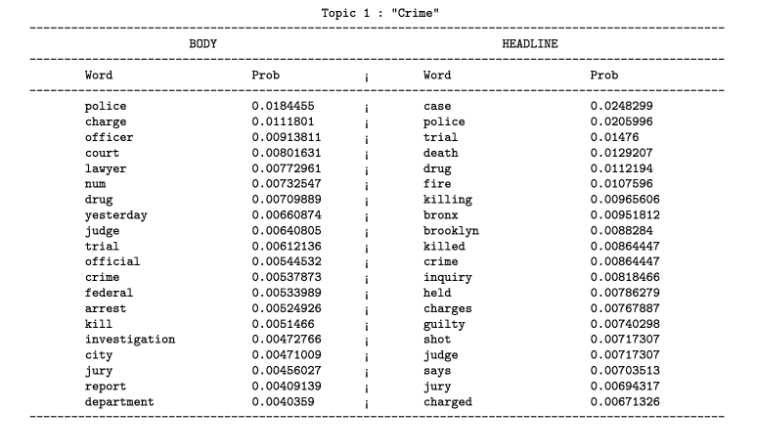

Result Snapsot

The snapshot below shows the word distribution for a body topic and headline topic. These word distributions were manually matched and annotated with a reasonable category.