Implementation of the paper: https://arxiv.org/abs/1702.02285

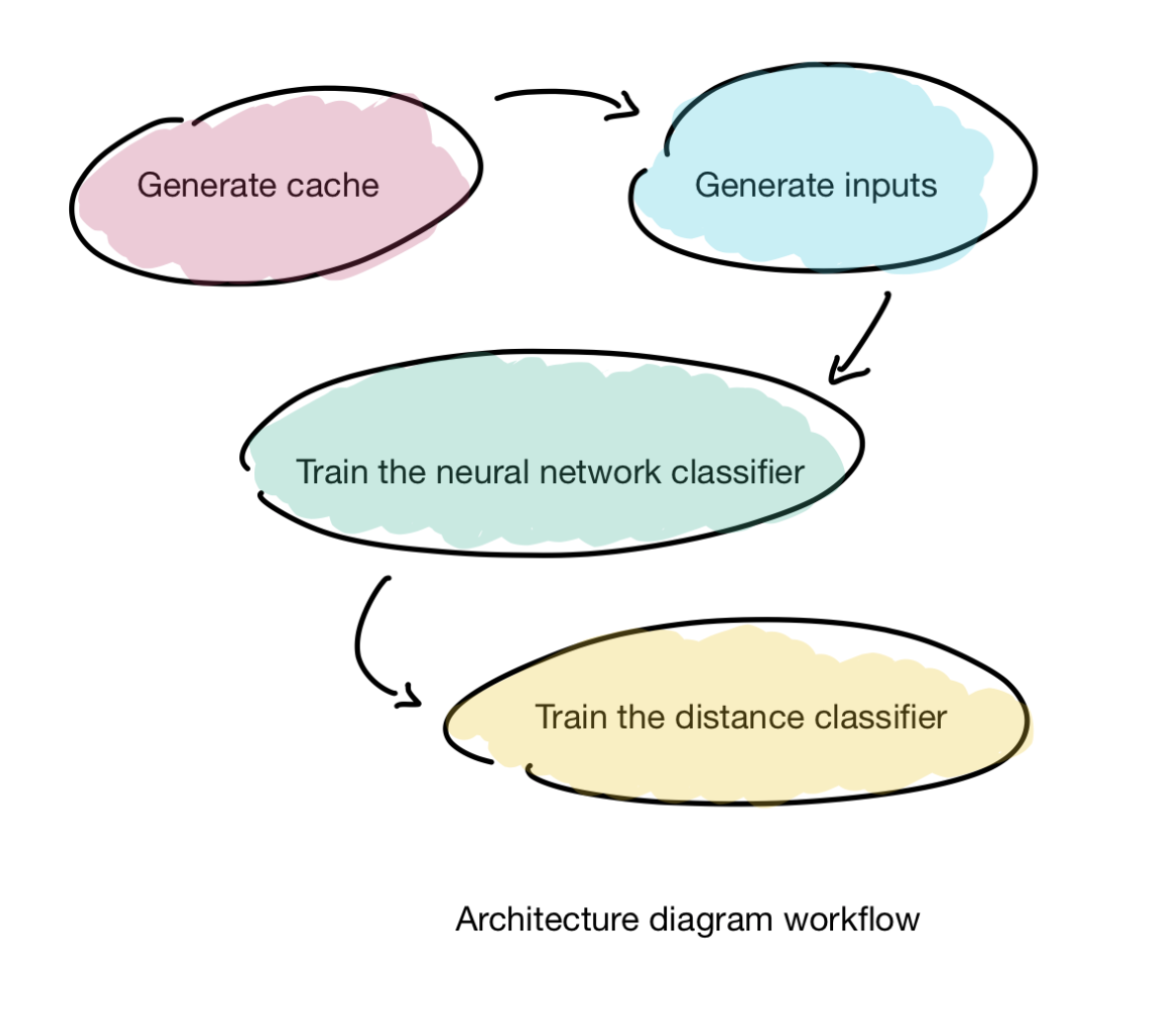

The mechanism proposed here is for real-time speaker change detection in conversations, which firstly trains a neural network text-independent speaker classifier using indomain speaker data.

The accuracy is very high and close to 100%, as reported in the paper.

Because it takes a very long time to generate cache and inputs, I packaged them and uploaded them here:

- Cache uploaded at cache-speaker-change-detection.zip (unzip it in

/tmp/) - speaker-change-detection-data.pkl (place it in

/tmp/) - speaker-change-detection-norm.pkl (place it in

/tmp/)

You should have this:

/tmp/speaker-change-detection-data.pkl/tmp/speaker-change-detection-norm.pkl/tmp/speaker-change-detection/*.pkl

The final plots are generated as /tmp/distance_test_ID.png where ID is the id of the plot.

Be careful you have enough space in /tmp/ because you might run out of disk space there. If it's the case, you can modify all the /tmp/ references inside the codebase to any folder of your choice.

Now run those commands to reproduce the results.

git clone git@github.com:philipperemy/speaker-change-detection.git

cd speaker-change-detection

virtualenv -p python3.6 venv # probably will work on every python3 impl.

source venv/bin/activate

pip install -r requirements.txt

# download the cache and all the files specified above (you can re-generate them yourself if you wish).

cd ml/

export PYTHONPATH=..:$PYTHONPATH; python 1_generate_inputs.py

export PYTHONPATH=..:$PYTHONPATH; python 2_train_classifier.py

export PYTHONPATH=..:$PYTHONPATH; python 3_train_distance_classifier.pyTo regenerate only the VCTK cache, run:

cd audio/

export PYTHONPATH=..:$PYTHONPATH; python generate_all_cache.pyContributions are welcome! Some ways to improve this project:

- Given any audio file, is it possible to test it and detect any speaker change?

-

Given any audio file, is it possible to test it and detect any speaker change? Yes, as long as it follows the same structure as the VCTK Corpus dataset.

-

Is there any way to test the trained model to detect speaker changes of our audio files? Yeah it's possible but it's going to be a bit difficult. I guess you have to choose a dataset and converts it to VCTK format.