Bilinear interpolation behavior inconsistent with TF, CoreML and Caffe

libfun opened this issue · 7 comments

Issue description

Trying to compare and transfer models between Caffe, TF and Pytorch found difference in output of bilinear interpolations between all. Caffe is using depthwise transposed convolutions instead of straightforward resize, so it's easy to reimplement both in TF and Pytorch.

However, there is difference between output for TF and Pytorch with align_corners=False, which is default for both.

Code example

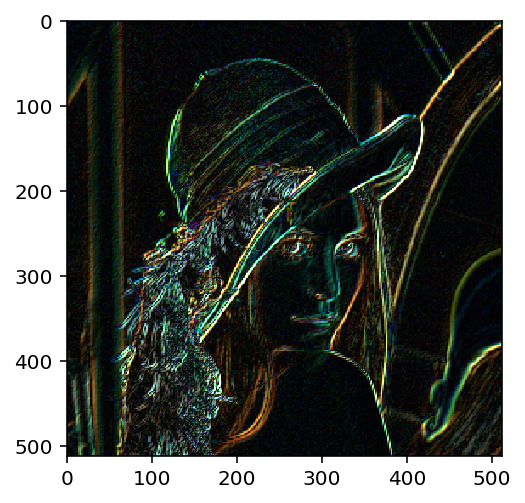

img = cv2.resize(cv2.imread('./lenna.png')[:, :, ::-1], (256, 256))

img = img.reshape(1, 256, 256, 3).astype('float32') / 255.

img = tf.convert_to_tensor(img)

output_size = [512, 512]

output = tf.image.resize_bilinear(img, output_size, align_corners=True)

with tf.Session() as sess:

values = sess.run([output])

out_tf = values[0].astype('float32')[0]

img = img.reshape(1, 256, 256, 3).transpose(0, 3, 1, 2).astype('float32') / 255.

out_pt = nn.functional.interpolate(torch.from_numpy(nimg),

scale_factor=2,

mode='bilinear',

align_corners=True)

out_pt = out_pt.data.numpy().transpose(0, 2, 3, 1)[0]

print(np.max(np.abs(out_pt - out_tf)))

# output 5.6624413e-06But

img = cv2.resize(cv2.imread('./lenna.png')[:, :, ::-1], (256, 256))

img = img.reshape(1, 256, 256, 3).astype('float32') / 255.

img = tf.convert_to_tensor(img)

output_size = [512, 512]

output = tf.image.resize_bilinear(img, output_size, align_corners=False)

with tf.Session() as sess:

values = sess.run([output])

out_tf = values[0].astype('float32')[0]

img = img.reshape(1, 256, 256, 3).transpose(0, 3, 1, 2).astype('float32') / 255.

out_pt = nn.functional.interpolate(torch.from_numpy(nimg),

scale_factor=2,

mode='bilinear',

align_corners=False)

out_pt = out_pt.data.numpy().transpose(0, 2, 3, 1)[0]

print(np.max(np.abs(out_pt - out_tf)))

# output 0.22745097Output of CoreML is consistent with TF, so it seems that there is a bug with implementation of bilinear interpolation with align_corners=False in Pytorch.

Diff is reproducible both on cpu and cuda with cudnn 7.1, cuda 9.1.

Thanks for the report. We will look into this!

I've run a few experiments and it seems that Pytorch with align_corners=False is consistent with python-opencv, PIL and skimage with mode='edge'. I'll look into CoreML closer today.

For pytorch implementation, here's the logic to compute src_idx <-> dst_idx mapping.

https://github.com/pytorch/pytorch/blob/master/aten/src/THNN/generic/linear_upsampling.h#L22

For TF implementation, scale is caculated in the same way. https://github.com/tensorflow/tensorflow/blob/f66daa493e7383052b2b44def2933f61faf196e0/tensorflow/core/kernels/image_resizer_state.h#L41

But the src_idx <-> dst_idx mapping is different. https://github.com/tensorflow/tensorflow/blob/6795a8c3a3678fb805b6a8ba806af77ddfe61628/tensorflow/core/kernels/resize_bilinear_op.cc#L85

I think Pytorch takes into account the pixel center when scaling, while TF doesn't.

For pytorch implementation, here's the logic to compute src_idx <-> dst_idx mapping.

https://github.com/pytorch/pytorch/blob/master/aten/src/THNN/generic/linear_upsampling.h#L22

For TF implementation, scale is caculated in the same way. https://github.com/tensorflow/tensorflow/blob/f66daa493e7383052b2b44def2933f61faf196e0/tensorflow/core/kernels/image_resizer_state.h#L41

But the src_idx <-> dst_idx mapping is different. https://github.com/tensorflow/tensorflow/blob/6795a8c3a3678fb805b6a8ba806af77ddfe61628/tensorflow/core/kernels/resize_bilinear_op.cc#L85

I think Pytorch takes into account the pixel center when scaling, while TF doesn't.

So do you think there is any workaround in Pytorch to make it's interpolation consistent with that of TensorFlow?

I thought it may be possible to try torch.nn.functional.grid_sample() of Pytorch.

For pytorch implementation, here's the logic to compute src_idx <-> dst_idx mapping.

https://github.com/pytorch/pytorch/blob/master/aten/src/THNN/generic/linear_upsampling.h#L22

For TF implementation, scale is caculated in the same way. https://github.com/tensorflow/tensorflow/blob/f66daa493e7383052b2b44def2933f61faf196e0/tensorflow/core/kernels/image_resizer_state.h#L41

But the src_idx <-> dst_idx mapping is different. https://github.com/tensorflow/tensorflow/blob/6795a8c3a3678fb805b6a8ba806af77ddfe61628/tensorflow/core/kernels/resize_bilinear_op.cc#L85

I think Pytorch takes into account the pixel center when scaling, while TF doesn't.So do you think there is any workaround in Pytorch to make it's interpolation consistent with that of TensorFlow? I thought it may be possible to try

torch.nn.functional.grid_sample()of Pytorch.

Using half_pixel_centers = True along with align_corners = False in tf.image.resize_bilinear will work same as torch.nn.functional.interpolate with align_corners = False

This is a PyTorch implementation of tf.compat.v1.image.resize_bilinear function.

The implemented function is consistent with tf.compat.v1.image.resize_bilinear function when half_pixel_centers = False and with align_corners = False.

The default implementation in PyTorch is consistent with the Tensorflow implementation when half_pixel_centers = True.

import torch

import numpy as np

def tf_consistent_bilinear_upsample(imgs, scale_factor=1.0):

b, c, h, w = imgs.shape

assert h == w

N = int(h * scale_factor)

delta = (1.0 / h)

p = int(scale_factor) - 1

xs = torch.linspace(-1.0 + delta, 1.0 - delta, N - p)

ys = torch.linspace(-1.0 + delta, 1.0 - delta, N - p)

grid = torch.meshgrid(xs, ys)

gridy = grid[1]

gridx = grid[0]

gridx = torch.nn.functional.pad(gridx.unsqueeze(0), (0, p, 0, p), mode='replicate')[0]

gridy = torch.nn.functional.pad(gridy.unsqueeze(0), (0, p, 0, p), mode='replicate')[0]

grid = torch.stack([gridy, gridx], dim=-1).unsqueeze(0).repeat(b, 1, 1, 1)

output = torch.nn.functional.grid_sample(imgs, grid, mode='bilinear', padding_mode='zeros')

return outputThe code is tested and the results are consistent with Tensorflow when the scale factor is an integer value.

This is the correct solution, I tested the result on 1 dimension, the loss is less than 0.01.