official implementation of Efficient Spiking Variational Autoencoder.

arxiv: https://arxiv.org/abs/2310.14839

- install dependencies

pip install -r requirements.txt

- initialize the fid stats

python init_fid_stats.py

The following command calculates the Inception score & FID of ESVAE trained on CelebA. After that, it outputs demo_input_esvae.png, demo_recons_esvae.png, and demo_sample_esvae.png.

python demo_esvae.py

python main_esvae exp_name -config NetworkConfigs/esvae/dataset_name.yaml

Training settings are defined in NetworkConfigs/esvae/*.yaml.

args:

- name: [required] experiment name

- config: [required] config file path

- checkpoint: checkpoint path (if use pretrained model)

- device: device id of gpu, default 0

You can watch the logs with below command and access http://localhost:8009/

tensorboard --logdir checkpoint --bind_all --port 8009

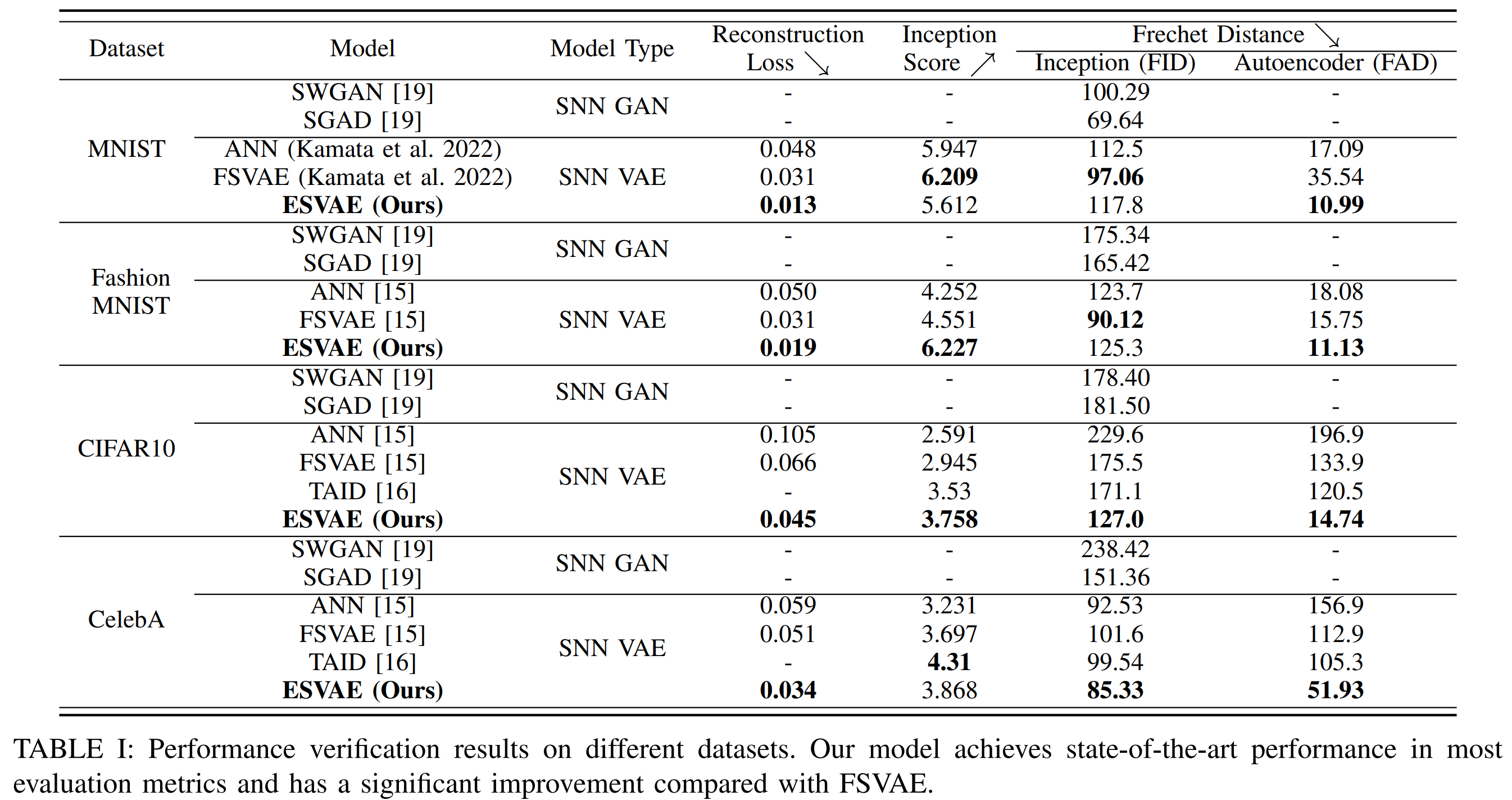

As a comparison method, we prepared vanilla VAEs of the same network architecture built with ANN, and trained on the same settings.

python main_ann_vae exp_name -dataset dataset_name

args:

- name: [required] experiment name

- dataset:[required] dataset name [mnist, fashion, celeba, cifar10]

- batch_size: default 250

- latent_dim: default 128

- checkpoint: checkpoint path (if use pretrained model)

- device: device id of gpu, default 0