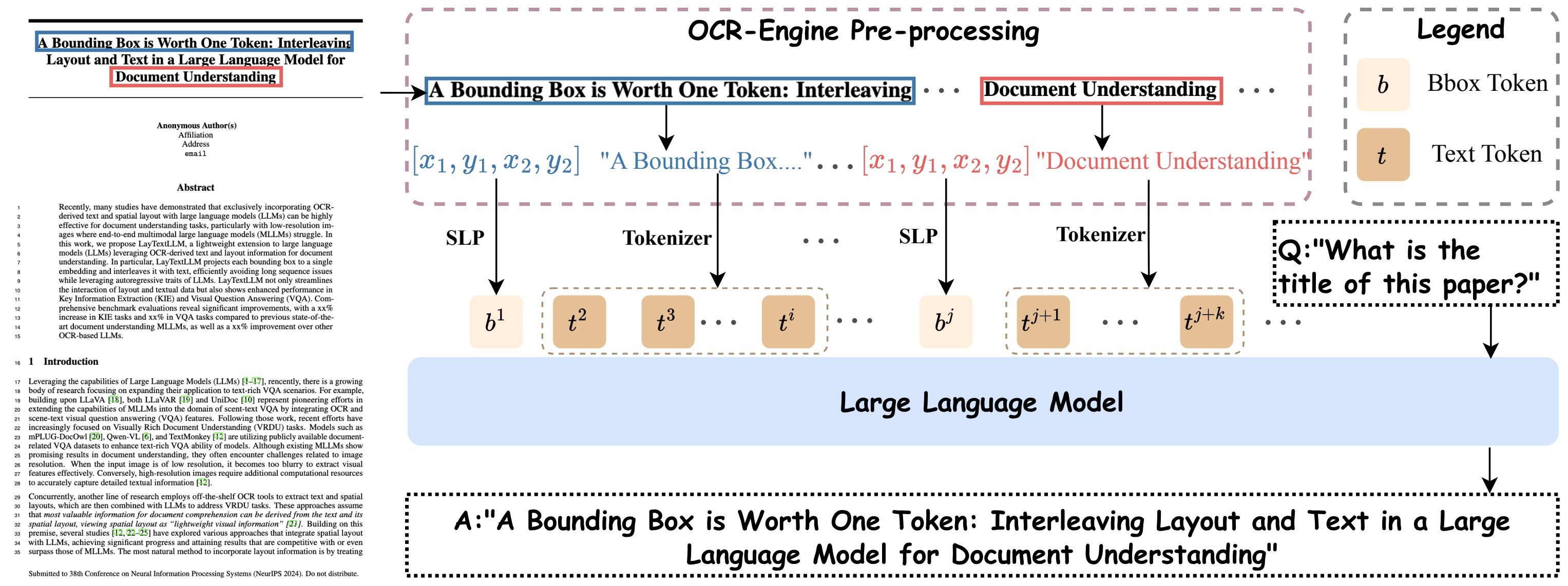

A Bounding Box is Worth One Token: Interleaving Layout and Text in a Large Language Model for Document Understanding

LayTextLLM projects each bounding box to a single embedding and interleaves it with text, efficiently avoiding long sequence issues while leveraging autoregressive traits of LLMs. LayTextLLM not only streamlines the interaction of layout and textual data but also shows enhanced performance in Key Information Extraction (KIE) and Visual Question Answering (VQA).

| Document-Oriented VQA | KIE | ||||||

|---|---|---|---|---|---|---|---|

| DocVQA | VisualMRC | Avg | FUNSD | CORD | SROIE | Avg | |

| Metric | ANLS % / CIDEr | F-score % | |||||

| Text | |||||||

| Llama2-7B-base | 34.0 | 182.7 | 108.3 | 25.6 | 51.9 | 43.4 | 40.3 |

| Llama2-7B-chat | 20.5 | 6.3 | 13.4 | 23.4 | 51.8 | 58.6 | 44.6 |

| Text + Polys | |||||||

| Llama2-7B-basecoor | 8.4 | 3.8 | 6.1 | 6.0 | 46.4 | 34.7 | 29.0 |

| Llama2-7B-chatcoor | 12.3 | 28.0 | 20.1 | 14.4 | 38.1 | 50.6 | 34.3 |

| Davinci-003-175Bcoor | - | - | - | - | 92.6 | 95.8 | - |

| DocLLM~[2] | 69.5* | 264.1* | 166.8 | 51.8* | 67.6* | 91.9* | 70.3 |

| LayTextLLMzero (Ours) | 65.5 | 200.2 | 132.9 | 47.2 | 77.2 | 83.7 | 69.4 |

| LayTextLLMvqa (Ours) | 75.6* | 179.5 | 127.6 | 52.6 | 70.7 | 79.3 | 67.5 |

| LayTextLLMall (Ours) | 77.2* | 277.8* | 177.6 | 64.0* | 96.5* | 95.8* | 85.4 |

| Comparison with other OCR-based methods. * indicates the training set used. | |||||||

Run the corresponding bash file for inference on the target dataset.

bash /run/run_*.sh

More dataset files can be accessed at Google Drive