- Training on CIFAR-100 dataset:

$ sh sv.sh

- We recommend you to use python3.6 as the base python environment. And other dependency libraries are listed in requirements.txt.

$ pip install -r requirements.txt

- Please download the MAE buffer used for training. After download it, please put it in the root folder.

- We use the codebase from DyTox to align some data processing implementations and evaluation metrics. Thanks for their wonderful work!

If you use this code for your research, please consider citing:

@inproceedings{zhai2023masked,

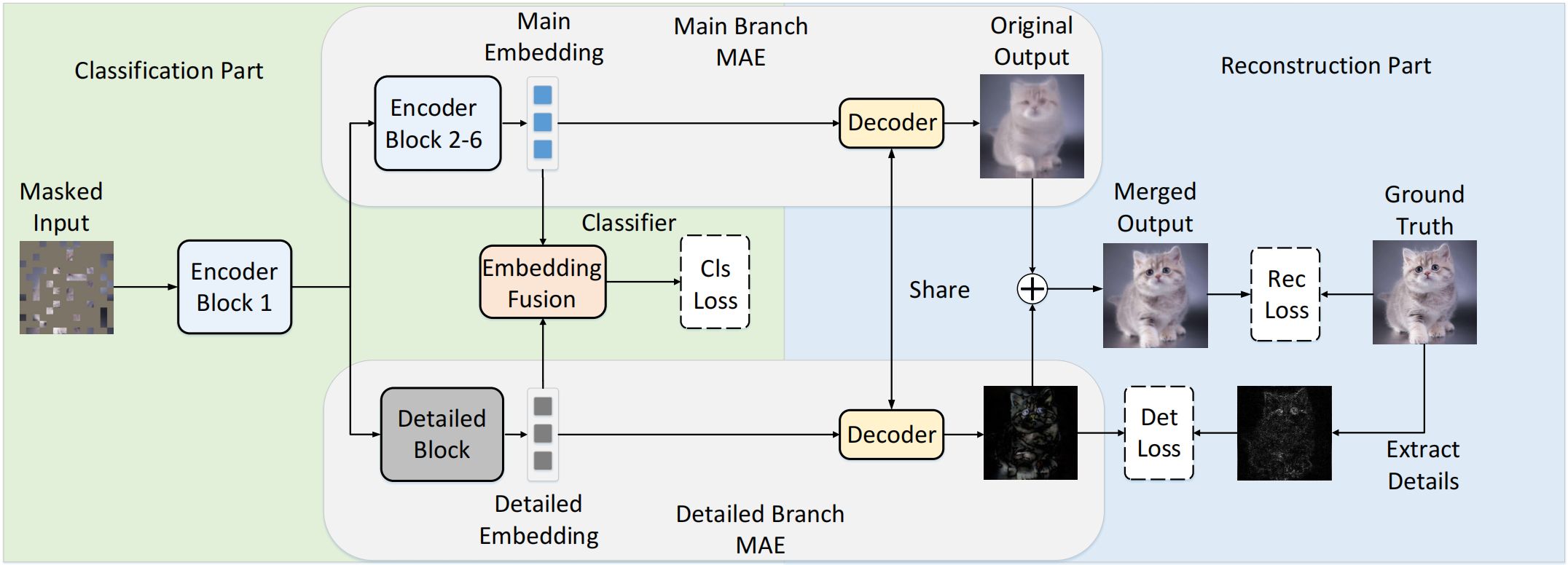

title={Masked autoencoders are efficient class incremental learners},

author={Zhai, Jiang-Tian and Liu, Xialei and Bagdanov, Andrew D and Li, Ke and Cheng, Ming-Ming},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={19104--19113},

year={2023}

}