A Chinese characters recognition repository with tensorrt format supported based on CRNN_Chinese_Characters_Rec and TensorRTx.

| Item | performance |

|---|---|

| time | -65% |

| GPU memory | -25% |

- Ubuntu 18.04

- python 3.7

- PyTorch 1.7.1

- cuda 10.0, cudnn 7.6.5

- yaml, easydict, tensorboardX...

Options: - OpenCV3.4.1

- tensorrt7.0.0

- pycuda>=2019.1.1

- Tensorrt should be installed refer to the official documentation if you want to improve the efficiency and save, the version of tensorrt should match the version of ubuntu,cuda,cudnn. Cuda, cudnn and OpenCV should be installed in the system environment to satisfy the compile work.

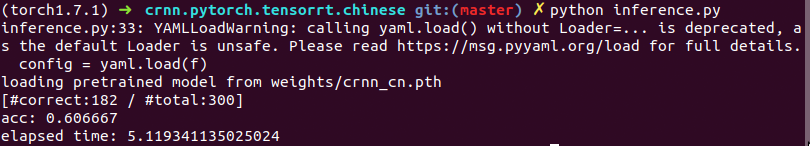

pytorch .pth weights inference:

$ python inference.py

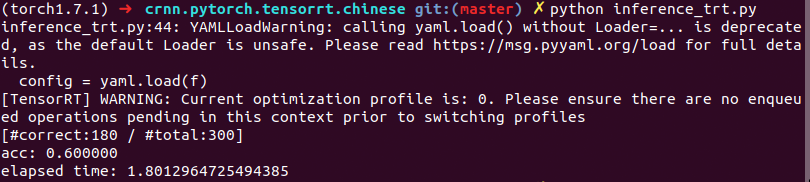

tensorrt .engine weights inference:

$ python inference_trt.py

$ python train.py --cfg lib/config/cn_config.yaml

$ cd output/W_PAD/crnn_cn/xxxx-xx-xx-xx-xx/

$ tensorboard --logdir log

- You should rebuild crnn torch2trt, if you change the size of input or class number. If not, you can start from step4

$ mkdir crnn_trt/build

$ cd crnn_trt/build

$ rm -rf *

-

change the parameters in crnn.cpp to yours, you may change INPUT_H, INPUT_W, NUM_CLASS

-

Rebuild crnn torch2trt

$ cmake ..

$ make

- Go to root path, and transform torch weights .pth file to .wts. You will get crnn.wts in crnn_trt/build.

$ cd ../../

$ python gen_wts.py

- Transform .wts to .engine

$ cd crnn_trt/build

$ sudo ./crnn -s

- Move crnn.engine in crnn_trt/build to weights/

- Run inference.py and inference_trt.py to compare the time use

$ cd ../../

$ python inference.py

$ python inference_trt.py