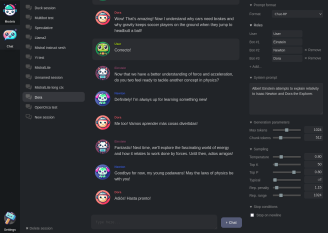

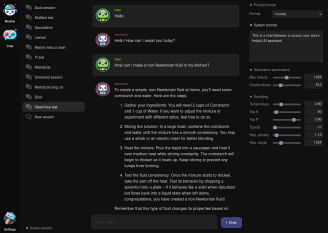

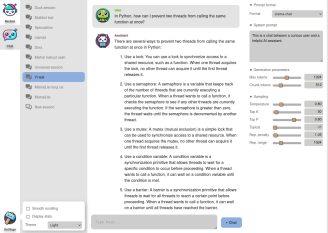

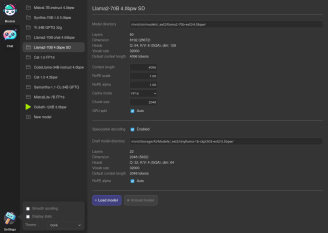

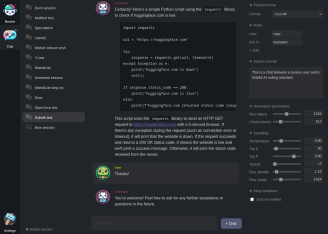

This is a simple, lightweight browser-based UI for running local inference using ExLlamaV2.

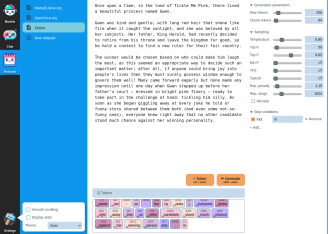

- Friendly, responsive and minimalistic UI

- Persistent sessions

- Multiple instruct formats

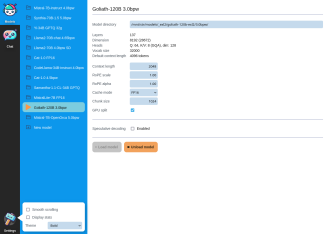

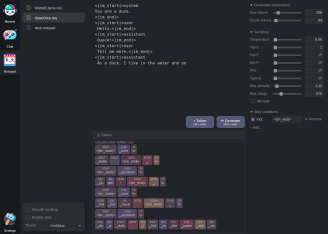

- Speculative decoding

- Supports EXL2, GPTQ and FP16 models

- Notepad mode

First, clone this repository and install requirements:

git clone https://github.com/turboderp/exui

cd exui

pip install -r requirements.txt

Then run the web server with the included server.py:

python server.py

Your browser should automatically open on the default IP/port. Config and sessions are stored in ~/exui by default.

Prebuilt wheels for ExLlamaV2 are available here. Installing the latest version of Flash Attention is recommended.

Stay tuned.