Memory leak issue

trvinh99 opened this issue · 8 comments

I have about 30 cameras and each of them will save into a sled database 5 frames per second. Each frame size is 25kb.

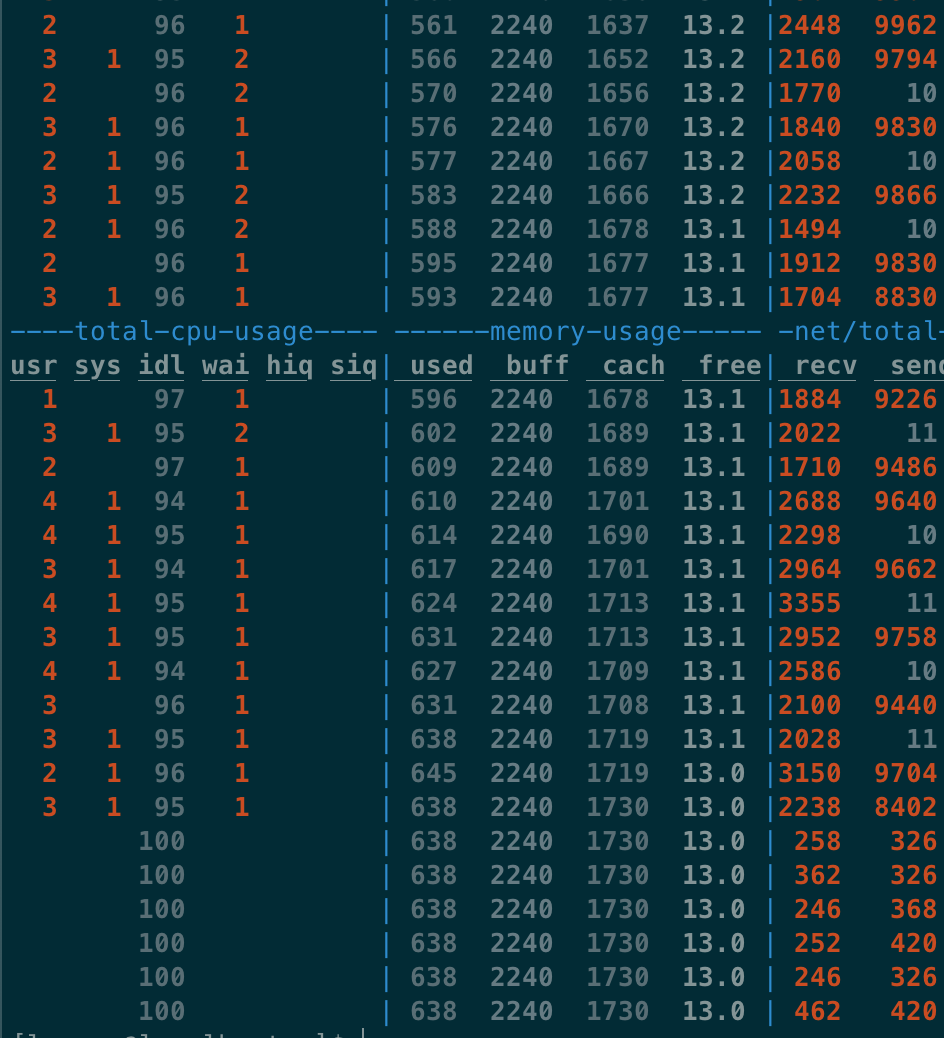

When I concurrently ran these cameras to save frames within 40s, I found the memory leak issue. The memory is increasing more and more and it can’t be released after the loop ends.

Am I missing something in config?

Context:

- Sled version: 0.34.7

- Rustc version: 1.53.0

- Operating system: �CentOS

Maybe you have encountered the same issue I encountered. #1380

You may try to make sure that you have not inserted the exact same k-v pairs in a tree more then 1024 times when you also use a Subscriber of the tree.

Maybe you have encountered the same issue I encountered. #1380

You may try to make sure that you have not inserted the exact same k-v pairs in a tree more then 1024 times when you also use a Subscriber of the tree.

Thanks for the suggestion but I immediately encounter this issue when running an example.

Here's my code sample: https://github.com/trvinh99/Sled-issue

Maybe you have encountered the same issue I encountered. #1380

You may try to make sure that you have not inserted the exact same k-v pairs in a tree more then 1024 times when you also use a Subscriber of the tree.Thanks for the suggestion but I immediately encounter this issue when running an example. Here's my code sample: https://github.com/trvinh99/Sled-issue

I tested your sample code and the memory reduced to 78MB after all the insertions being done.

Seems it's not a sled issue.

Maybe you have encountered the same issue I encountered. #1380

You may try to make sure that you have not inserted the exact same k-v pairs in a tree more then 1024 times when you also use a Subscriber of the tree.Thanks for the suggestion but I immediately encounter this issue when running an example. Here's my code sample: https://github.com/trvinh99/Sled-issue

I tested your sample code and the memory reduced to 78MB after all the insertions being done.

Seems it's not a sled issue.

It's incredible! I have just tested again this code but the memory was still not released.

What OS did you use to test?

@trvinh99 I tested in macOS 12.0.1

Actually, I have already tested on macOS and the memory was released after the process closed while CentOS was not.

But does the sled db not release memory while inserting? That’s a big problem I need to resolve.

@trvinh99 I tested in macOS 12.0.1

Actually, I have already tested on macOS and the memory was released after the process closed while CentOS was not.

But does the sled db not release memory while inserting? That’s a big problem I need to resolve.

You can give jemalloc or valgrind a try :)

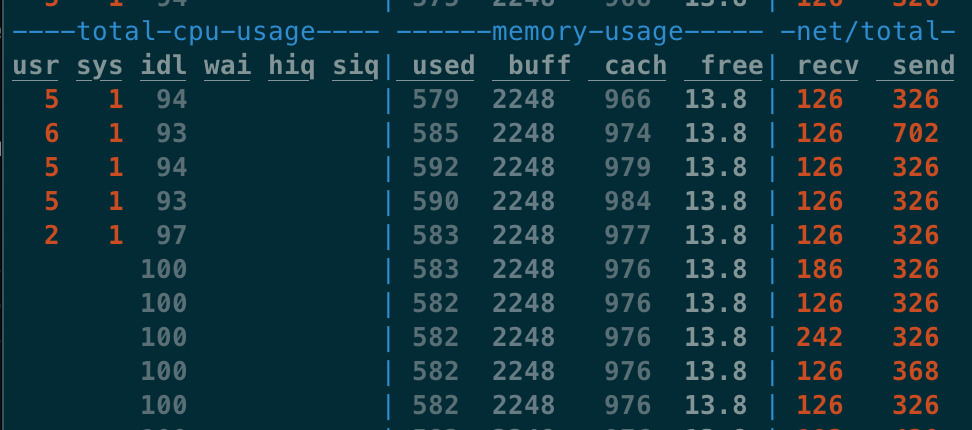

@trvinh99 How did you determine CentOS didn’t free the memory? On Linux any free RAM is used to cache IO, so it won’t show as free, but is still “free” to be used by applications if no other memory is available. So Linux always looks like it has less than it does.

https://serverfault.com/questions/85470/meaning-of-the-buffers-cache-line-in-the-output-of-free