The project consists of three parts.

- Scrapers (Scrapes data from Yelp.com)

- NER model (Model training and inference to get menu items -

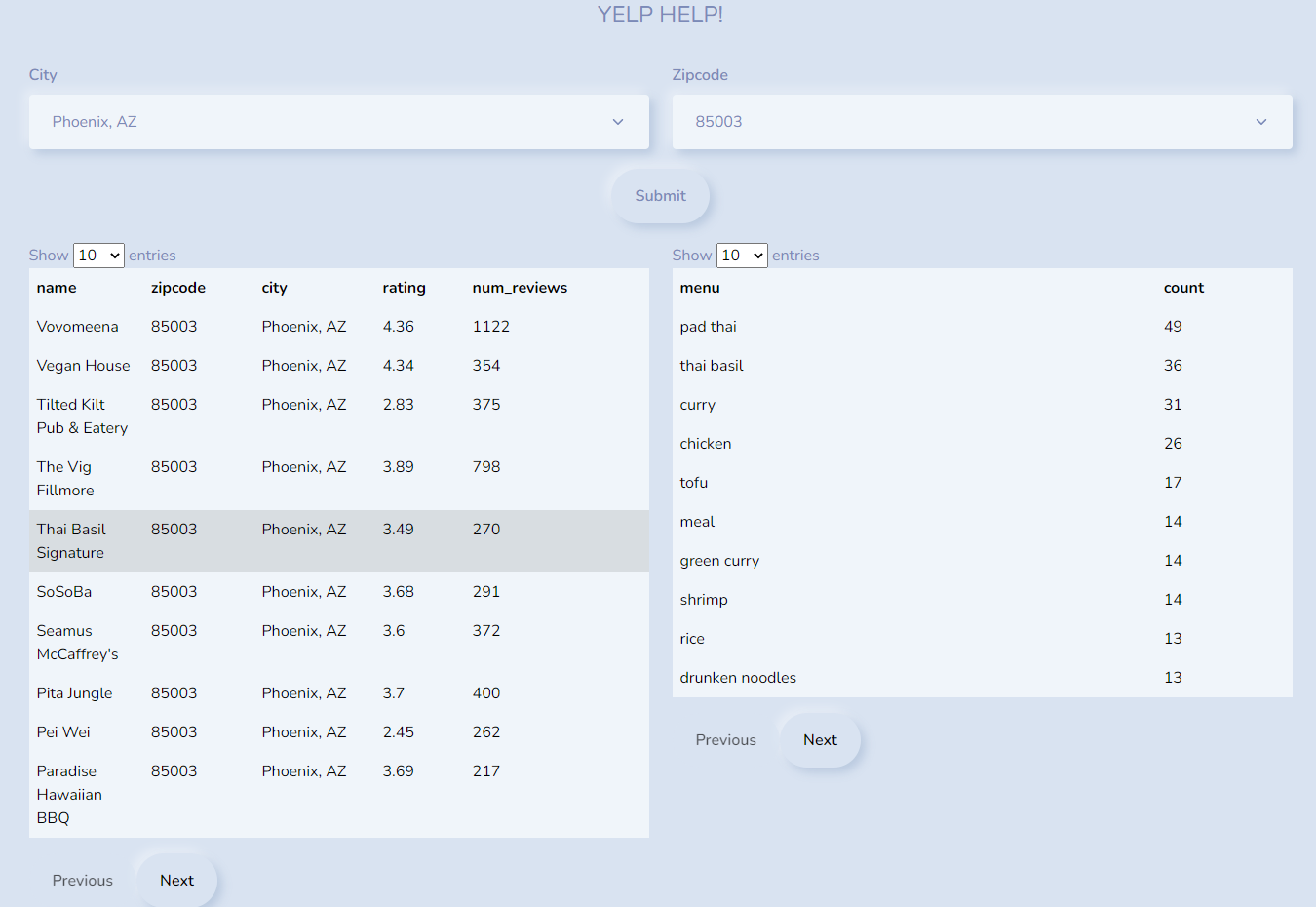

Pseudo-menus) - Web app (Can be used by consumers to look at the generated menus by entering city and zipcode)

Pseudo-menu: This is not the typical menu that we see in restaurants where they might have their own obscure names for common food items. For example "Eggs can’t be cheese" this is a burger. So our Pseudo-menu will just have one menu item named "burger".

Scrape business information, reviews, menus ( if available ) and top food items for all the restaurants from a particular city/location.

Currently the code is tested on

Python 3.6.5

$ git clone https://github.com/Sapphirine/202112-51-YelpHelp.git

$ cd scrapersThen, create a virtual environment

$ conda create --name yelp_scrapers python=3.6.5Activate your environment and install required dependencies from requirements.txt

$ conda activate yelp_scrapers

$ pip install -r requirements.txtThen, setup database by first creating .env file using example .env.example file and then run the following command

$ alembic upgrade headAfter installation, run the scrapers by using following command

$ python run_scraper.py --locations-file-path "locations.csv"For information about positional arguments and available optional arguments,

$ python run_scraper.py --help

usage: run_scraper.py [-h] [--locations-file-path LOCATION_FILE_PATH]

[--scrape-reviews SCRAPE_REVIEWS]

[--business-pages BUSINESS_PAGES]

[--reviews-pages REVIEWS_PAGES]

optional arguments:

-h, --help show this help message and exit

--locations-file-path File path containing locations

--scrape-reviews Scrape reviews? (yes/no)

--business-pages Number of business listing pages to scrape (> 0)

--reviews-pages number of pages of reviews to scrape for each business (> 0)

--locations-file-path- csv file containing location names. column name should be "location"

--scrape-reviews- flag is used to specify whether or not to scrape reviews along with menu URLs and top food items (used inyelp_reviews_spider.py).

--business-pages- specifies number of pages to scrape data from. For example if, forChicago, ILwe got 50 pages worth of businesses listings and, if this argument is set to 5 then only first 5 pages will be scraped out of 50. (used inyelp_businesses_spider.py)

--reviews-pages- similar to--business-pagesbut for reviews (used inyelp_reviews_spider.py)

When the code is executed as mentioned in above section, 3 crawlers scrapes data sequentially. The order of crawl is important here, since, data scraped in one crawler is used by another.

yelp_businesses_spider → yelp_reviews_spider → yelp_menu_items_spider

Note: The reviews crawler and Menus crawler cannot run simultaneously, because the menu URLs are only available after we run reviews crawler.

The business crawler scrapes following information for each restaurant:

business_id

business_name

business_url

overall_rating

num_reviews

location

categories

phone_number

All the scraped data is relayed to pipelines where it is persisted in PostgresDB in restaurants_info table

Note: The restaurants_info table also contains information about state of reviews crawler, Menu URLs and Menu scraped flag and Top food items, which will be populated by following crawlers.

The reviews crawler scrapes following information for each review :

review_id

review

date

rating

business_name

business_id

business_alias

business_location # same as the one provided as CLI input

sentiment # if rating >= 4 then 1 else 0

All the scraped data is relayed to pipelines where it is persisted in GCP Bucket partitioned by location and chunked by time

As mentioned before the crawler also persists the state of review scraper in the restaurants_info table in last_reviews_count and errors_at columns.

Apart from all these, the crawler also scrapes menu URL and Top food items and persists them in menu_url and top_food_items columns respectively in the restaurants_info table.

Note: All the scraped reviews are stored in Gzipped Json lines format in chunks on 1000 and is handled by pipelines. While, all other data is being stored in db in the same code.

The menu items crawler scrapes following information for each restaurant (if available) :

url

menu # Postgres JSON format

All scraped menus data is relayed to pipelines where they are persisted in PostgresDB in restaurants_menus table

The crawler also sets menu_items_scraped_flag in the restaurants_info table and this is done in the same script.

To run the web server, open your terminal and go to webapp/backend

Create a virtual environment

$ conda create --name yelp_api python=3.6.5Activate your environment and install required dependencies from requirements.txt

$ conda activate yelp_api

$ pip install -r requirements.txtAfter installation, you can start the server and see the results from local host on port 7777

$ python yelp_help_api.pyThen, open index.html file from the frontend folder.