The goal of this project is to create a neural network capable of encoding the speaker's identity into an embedding vector, which can be used for speaker verification. Many loss functions can be used (e.g. triplet loss torch.nn.TripletMarginLoss, Angular Softmax Loss).

To create a virtual environment with all the necessary dependencies, run make venv. To use it,

run source venv/bin/activate.

For other helpful targets (such as formatting, launching scripts, ...), see Makefile.

For training and evaluation, we are using the VoxCeleb dataset. This dataset takes around 30GB and can be downloaded

using make download-voxceleb target. This will save the dataset into voxceleb1 directory relative

to project root (the path can be changed by setting KNN_DATASET_DIR environment variable).

On Metacentrum, I have pre-downloaded this dataset into my home directory at

/storage/brno12-cerit/home/tichavskym/voxceleb1.

To train the custom version of ECAPA-TDNN, execute make train. You can use environment variables to parametrize

the job run, namely

KNN_DATASET_DIRwith path pointing to the Voxceleb1 dataset,KNN_MODELwhich model to train (either "ECAPA", "WAVLM_ECAPA", "WAVLM_ECAPA_WEIGHTED", or "WAVLM_ECAPA_WEIGHTED_UNFIXED")MODEL_IN_DIRshould be set only if you want to start training from a saved checkpoint, this expectsecapa_tdnn.state_dict,classifier.state_dictandoptimizer.state_dictfiles to be present in the given directory.KNN_MODEL_OUT_DIRwhere the model, classifier and optimizer checkpoints are stored ater each iterationKNN_DEBUGto mark if you're executing in debug mode,NOF_EPOCHSfor how many epoch you want to train your model,KNN_BATCH_SIZEto set mini-batch size,KNN_VIEW_STEPto set after how many iteration to print the stats

Output of the training is stored in experiments/ directory by default.

Model evaluation can be executed using make evaluate target. This will launches evaluate.py script,

which evaluates the model on the VoxCeleb test set. This script can be parametrized using environment variables, namely

KNN_MODELto choose which model to evaluate, eithermicrosoft/wavlm-base-sv,speechbrain/spkrec-ecapa-voxceleb, orecapa-tdnn,KNN_MODEL_FILENAMEifKNN_MODELis set toecapa-tdnn, this variable contains the path to the model file with weights and biases. This file should be in astate_dictformat,KNN_DATASET_DIRwith path pointing to the Voxceleb1 dataset,EVAL_FIRSTwhen debugging, this variable can be set to number of samples to evaluate (when unset, evaluates on the whole verification set).

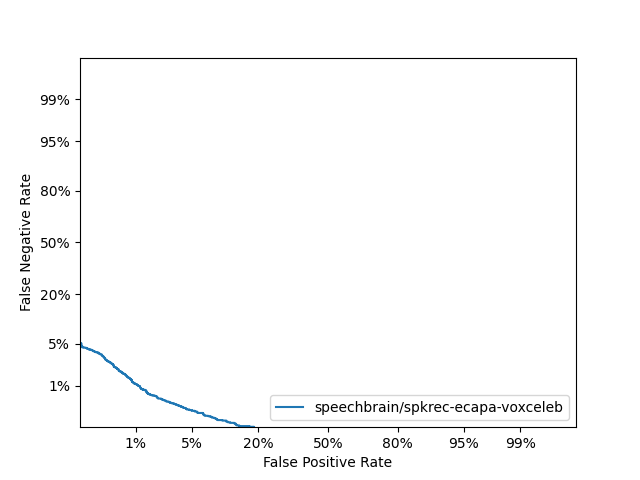

The script will create two files in experimets/{scores, det} with scores for each pair of recordings and DET

curve for the model. It will also print the EER (Equal Error Rate) value to stdout.

If you want to evaluate (or perform any other computation) on Metacentrum infrastructure, check out scripts in metacentrum/ directory.

| Model | EER | minDCF |

|---|---|---|

| speechbrain/spkrec-ecapa-voxceleb | 1.04 % | 0.0036 * |

*: this might be wrong, as I'd expect numbers around 0.06

Computational resources were provided by the e-INFRA CZ project (ID:90254), supported by the Ministry of Education, Youth and Sports of the Czech Republic.