A lightweight, modular, and terminal-first Python web scraper for extracting links, phone numbers, emails (with optional MX verification), and addresses from web pages. Features a clean CLI interface, a startup ASCII banner, and graceful handling of Ctrl+C for quiet exits.

- Modular Design: Extractors for links, phone numbers, emails, and addresses are organized in separate modules under

extractors/. - CLI-Friendly: Supports both interactive and non-interactive modes for flexible usage.

- Graceful Exit: Exits cleanly with code 0 on Ctrl+C.

- Optional MX Verification: Email extraction includes optional domain MX record checks (requires

dnspython). - Responsive: Uses

requestswith timeouts to handle network issues gracefully. - Customizable: Easily extendable with new extractors and configurable settings.

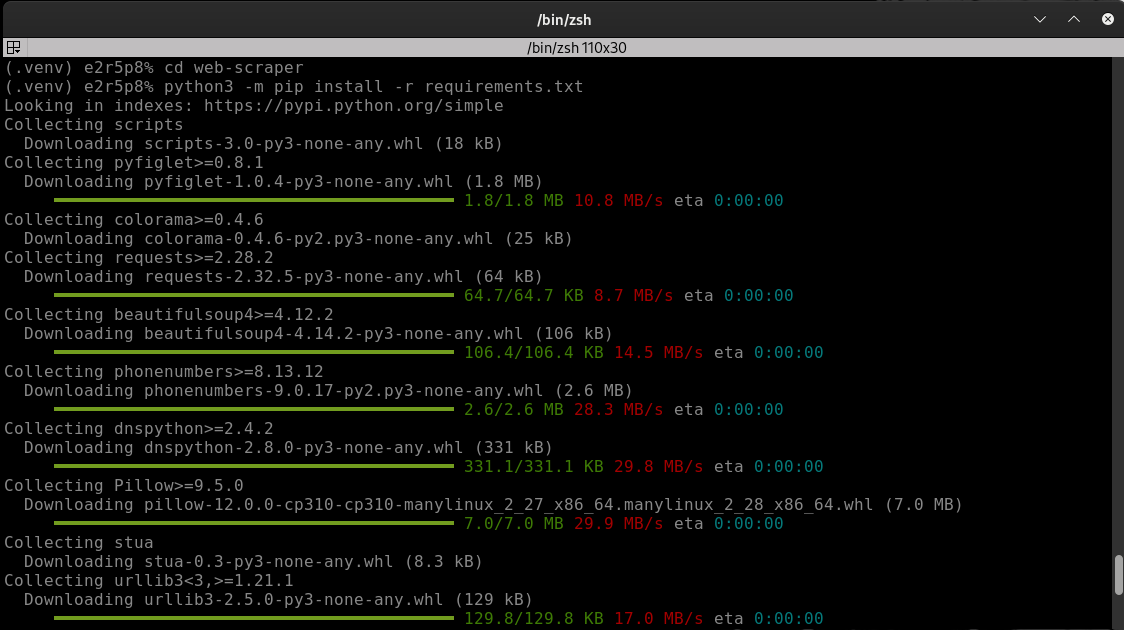

The project includes a requirements.txt file with all necessary dependencies:

pyfiglet>=0.8.1— For ASCII banner generation.colorama>=0.4.6— For colored terminal output.requests>=2.28.2— For HTTP requests.beautifulsoup4>=4.12.2— For HTML parsing.phonenumbers>=8.13.12— For phone number extraction and validation.dnspython>=2.4.2(optional) — For MX record verification during email extraction.Pillow>=9.5.0(optional) — For image processing features (if implemented).

Notes:

dnspythonis only required for email MX verification.Pillowis only needed for image-related features (not used in core scraping).

Ensure Python 3 and pip are installed (administrator privileges may be required):

sudo apt update

sudo apt install -y python3 python3-pipFor other operating systems (e.g., macOS, Windows, or other Linux distributions), install the equivalent Python 3 and pip packages using your package manager or the official Python website.

To avoid conflicts with system Python packages, use a virtual environment:

python3 -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

python3 -m pip install -r requirements.txtFrom the project root, install the required packages:

python3 -m pip install -r requirements.txtTo update or regenerate requirements.txt after adding new dependencies:

- Install new packages in your virtual environment and run:

This pins exact versions for reproducibility.

python3 -m pip freeze > requirements.txt - Alternatively, use

pipreqsto generate a requirements file based on imports:python3 -m pip install pipreqs pipreqs --force .

Run the scraper in either interactive or non-interactive mode.

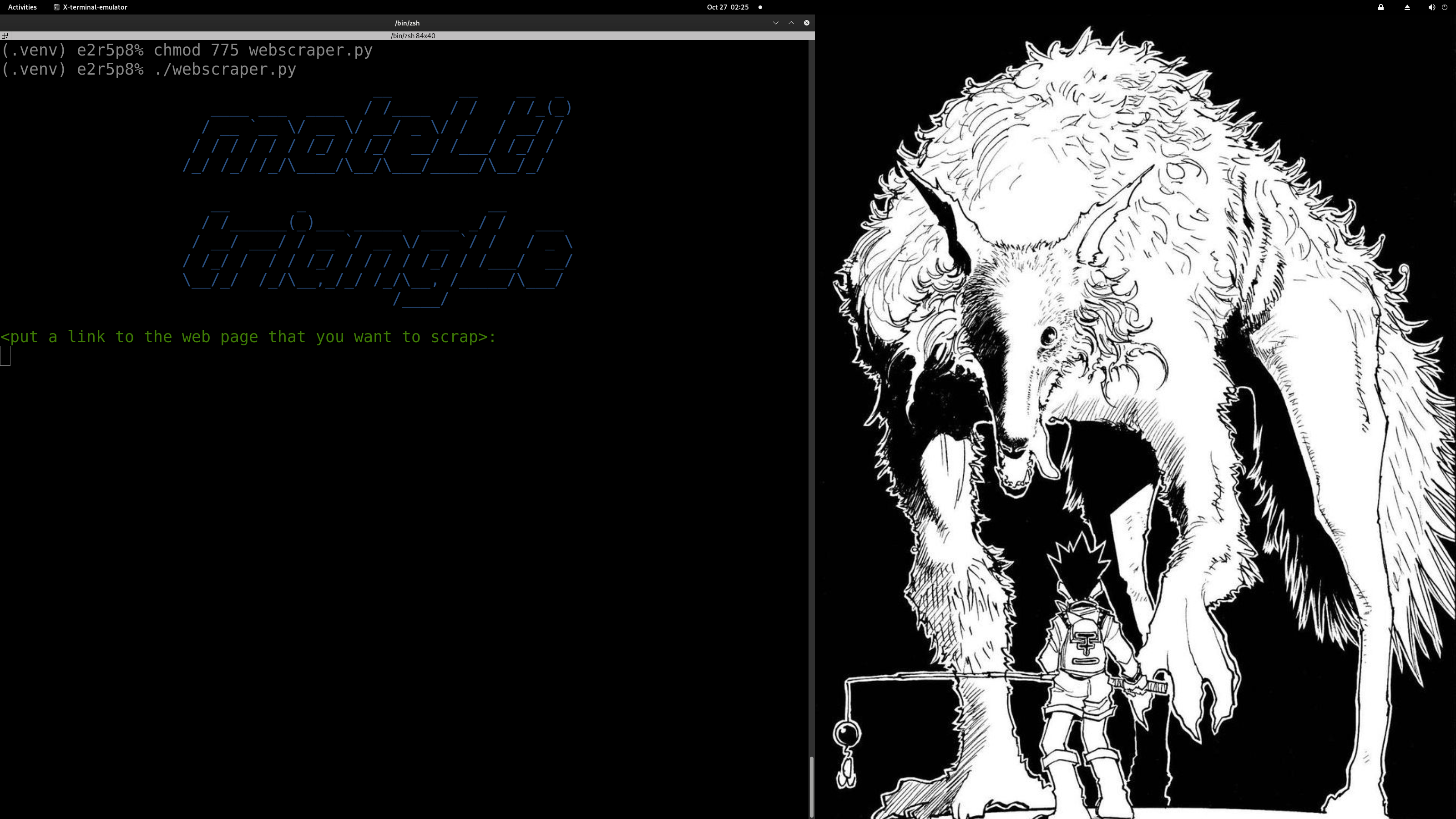

Launch the script and follow the prompts to enter a URL and select an extraction option:

python3 webscraper.pyProvide the URL via stdin and specify an extraction option (1–4):

echo "https://example.com" | python3 webscraper.py --choice 1Extraction Options:

- Links: Extracts all hyperlinks (optionally checks reachability).

- Phone Numbers: Extracts and validates phone numbers using

phonenumbers. - Emails: Extracts email addresses with optional MX record verification (if

dnspythonis installed). - Locations: Extracts addresses using OpenStreetMap Nominatim API.

Press Ctrl+C at any time to exit the program cleanly (exit code 0).

-

Extract links non-interactively:

echo "https://example.com" | python3 webscraper.py --choice 1

-

Extract emails with MX verification:

echo "https://example.com" | python3 webscraper.py --choice 3

-

Run interactively and follow prompts:

python3 webscraper.py

- Missing Dependencies: If you encounter an

ImportError, ensure all packages inrequirements.txtare installed using the same Python interpreter running the script. - Network Issues: The scraper uses timeouts in

requeststo prevent hanging. Network errors are logged but won’t crash the program. - MX Verification: If

dnspythonis not installed, email extraction will skip MX checks and note that verification is unavailable. - Disable ASCII Banner: To remove the startup banner, comment out the

pyfigletimport and call inwebscraper.pyor edittriangle.py. - Logs: Check terminal output for detailed error messages or debugging information.

Contributions are welcome! To contribute:

- Add New Extractors: Place new extractor modules under

extractors/and updaterequirements.txtwith any new dependencies. - Update Dependencies: Use pinned versions (

pip freeze > requirements.txt) for reproducible CI builds orpipreqsfor minimal requirements. - Submit Pull Requests: Fork the repository, create a feature branch, and submit a pull request with clear descriptions of your changes.

- Report Issues: Use the issue tracker to report bugs or suggest improvements.

Happy scraping with Triangle Web Scraper!