This is an official repogitory for the paper "Ambigram Generation by a Diffusion model". This paper is accepted at ICDAR 2023.

- paper link: arXiv

【 UPDATE 】

2023/07/20: ambifusion2 is open. This method can generate ambigrams with any image pair by specifying two prompts as a pair.

tl;dr

- We propose an ambigram generation method by a diffusion model.

- You can generate various ambigram images by specifying a pair of letter classes.

- We propose

ambigramability, an objective measure of how easy it is to generate ambigrams for each letter pair.

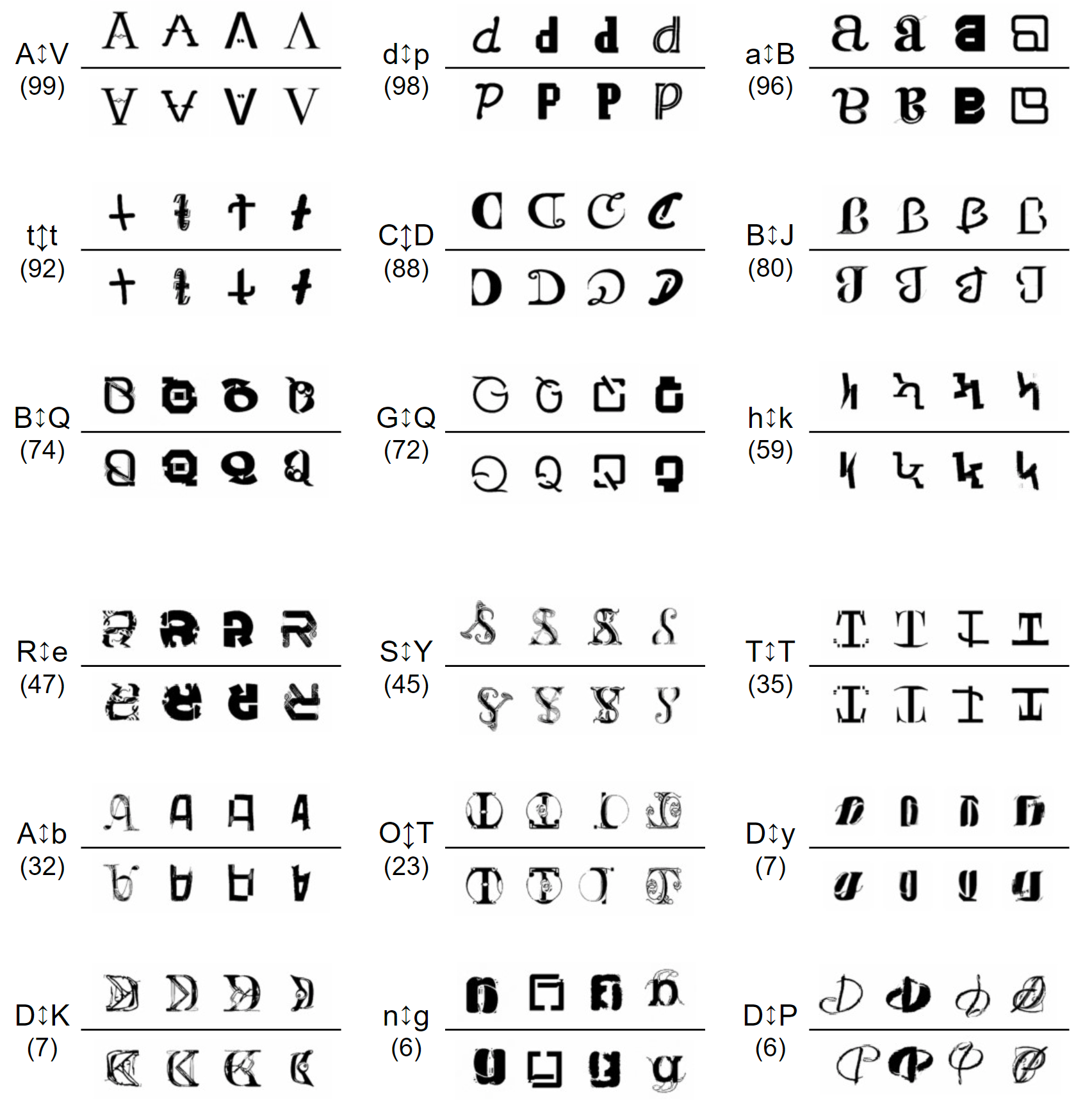

Ambigrams are graphical letter designs that can be read not only from the original direction but also from a rotated direction (especially with 180 degrees). Designing ambigrams is difficult even for human experts because keeping their dual readability from both directions is often difficult. This paper proposes an ambigram generation model. As its generation module, we use a diffusion model, which has recently been used to generate high-quality photographic images. By specifying a pair of letter classes, such as 'A' and 'B', the proposed model generates various ambigram images which can be read as 'A' from the original direction and 'B' from a direction rotated 180 degrees. Quantitative and qualitative analyses of experimental results show that the proposed model can generate high-quality and diverse ambigrams. In addition, we define ambigramability, an objective measure of how easy it is to generate ambigrams for each letter pair. For example, the pair of 'A' and 'V' shows a high ambigramability (that is, it is easy to generate their ambigrams), and the pair of 'D' and 'K' shows a lower ambigramability. The ambigramability gives various hints of the ambigram generation not only for computers but also for human experts.

Our proposed method generates ambigrams like following examples.

The parenthesized number is the ambigramability score (↑) of the letter pair. The upper three rows are rather easy class pairs (with higher ambigramability scores), and the lower three are not.

We tested all codes in Python: 3.8.10.

You can download external libraries with pip as following.

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113

pip install -r requirements.txt

You can also download pre-trained weights.

Replace all pseud-weights file weight_name.txt with downloaded real-weights file.

- weights link: GoogleDrive

- Start gradio web app as following.

python demo.py

- Access

127.0.0.1:11111with your web browser.

- Set

TestConfigsinambigram_random_sample.py. - Run the sampling code as following.

python ambigram_random_sample.py

- Set

TestConfigsincalc_ambigramability.py. - Run the calculation code as following.

python calc_ambigramability.py

- Set

TrainConfigsinconfigs/trainargs.py. If you want, you can changeDA_ambigram_configs.yaml(The details are mentioned at Sec. 3.2 in the paper). - Run the training code as following.

## Run on single gpu

python ambigram_train.py

## Run on multiple gpus

mpiexec -n [NUM_GPUs] python ambigram_train.py

Note[1]: If you want to generate ambigrams by using classifier-free guidance, you have to train both conditional-model and unconditional-model separately.

@article{shirakawa2023ambigram,

title={Ambigram Generation by A Diffusion Model},

author={Shirakawa, Takahiro and Uchida, Seiichi},

booktitle={2023 17th international conference on document analysis and recognition (ICDAR)},

year={2023}

}