All nodes which are allocated for this job are already filled

Opened this issue · 32 comments

I get this weird message when I try to run examples/test_bsp.py on a 4GPU machine:

1.updating ResNet50 params through iterations and

2.exchange the params with BSP(cdd,nccl32)

See output log.

--------------------------------------------------------------------------

All nodes which are allocated for this job are already filled.

--------------------------------------------------------------------------

Rule session 89429 terminated with return code: 0.

Things that I already verified:

- all gpus are free,

2MiB / 16276MiBMemory usage, and 0% Utilisation. - cpu memory free: 300GB.

- it works when the list of devices has only one GPU.

devices=['cudaX'], where X in {0,1,2,3}. - crashes with this error whenever len(devices) > 1.

- the same behavior can be obtained by running EASGD and GSGD.

Any idea how to fix this?

@IstrateRoxana

This message shows up when the allocated slots are fewer than the slots started by mpirun.

You can try with tmlauncher for the same task and enable allocation details by adding the display-allocation option and the problem will be more clear.

Also you may want to check the parallel loading process is spawned on the same hostname here as training process in the display-allocation list. Since ResNet50 trains on ImageNet and ImageNet training needs an additional parallel loading process to accelerate loading and preprocessing.

If you also want to see the exact binding of each core, try enable by adding report-binding option to mpirun command.

I managed to sove it, it was nothing related to the framwork, more with my internal node scheduler.

Is theano-mpi tested with python3? Or python2 only?

@IstrateRoxana

The code was developed on python2. Recently, I added python3 support and debugged some syntax problems. You should be able to run with python3 now. Let me know if you have problems on that.

Hi,

I managed to run up to some point and i discovered these things:

- Enhancement: Imagenet_data class has hardcoded paths to root_dir, and the global variable root_dir is not even used.

- Bug: in build_simpl_block of Resnet50 network, the paramater

namesis a map in Python3 and cannot be indexed (e.g names[0] fails), therefore you have to transform it to list. - When running with one GPU i get this warning,

rank0: bad list is [], extended to 10

rank0: bad list is [], extended to 10

Have you had this warning before?

- After successfully running a few epochs, at epoch 21 I get this error message:

validation cost:12.5297

validation error:0.9948

validation top_5_error:0.9870

global epoch 18 took 0.0019 h

validation cost:13.3466

validation error:1.0000

validation top_5_error:0.9857

global epoch 19 took 0.0019 h

validation cost:13.0445

validation error:1.0000

validation top_5_error:0.9896

weights saved at epoch 20

global epoch 20 took 0.0019 h

validation cost:13.7466

validation error:0.9987

validation top_5_error:0.9909

global epoch 21 took 0.0019 h

--------------------------------------------------------------------------

mpirun noticed that process rank 0 with PID 0 on node zhcc019 exited on signal 9 (Killed).

--------------------------------------------------------------------------

Rule session 10391 terminated with return code: 137.

- Last question is related to the data that gets processed per second. If I want to replicate the results presented in this paper: https://arxiv.org/pdf/1605.08325.pdf, in more detail, i want to check how many images/sec are processed by each gpu, where should I get this information from?

To answer this i first need to know how thinks work inside the framework, so I split this question above into more smaller pieces.

a. The batch_size that is set in resnet50.py is the batch_size per gpu or per all gpus? E.g if I use 4 GPUS, the actual batch size is 4*the batch_size paramater here: https://github.com/uoguelph-mlrg/Theano-MPI/blob/master/theanompi/models/lasagne_model_zoo/resnet50.py#L204? In which part of the code can I measure how much time is spent per bach size per device?

b. What is file_batch_size in resnet50.py? Is this related to the files generated by theano_alexnet project? I am a bit confused by how the data is stored.

E.g I ran the theano_alexnet script generate_toy_data.sh with batch_size 128 and num_div 4 and it generated this content:

total 24

drwxr-x--- 2 roi ccs 4096 Jul 19 10:02 labels

drwxr-x--- 2 roi ccs 4096 Jul 19 10:02 misc

drwxr-x--- 2 roi ccs 4096 Jul 19 10:01 train_hkl_b128_b_128.0

drwxr-x--- 2 roi ccs 4096 Jul 19 10:01 train_hkl_b128_b_64.0

drwxr-x--- 2 roi ccs 4096 Jul 19 10:01 val_hkl_b128_b_128.0

drwxr-x--- 2 roi ccs 4096 Jul 19 10:02 val_hkl_b128_b_64.0

in the imagenet.py i set the train_dir = train_hkl_b128_b_128.0 and val_dir = val_hkl_b128_b_128.0.

Not sure where these folders train_hkl_b128_b_64.0 and val_hkl_b128_b_64.0 are needed.

From my understanding now I think the file_batch_size should be 128 and the batch_size should be 32, am I correct?

However, if I run the test_bsp.py with 4 GPUs I always get a

--------------------------------------------------------------------------

mpirun noticed that process rank 0 with PID 0 on node zhcc015 exited on signal 9 (Killed).

--------------------------------------------------------------------------

Rule session 69242 terminated with return code: 137.

The exact same data but only 2 GPUs works up to a few epochs.

@IstrateRoxana

Thanks for reporting those.

-

Yes, the ImageNet paths should use those global path.

-

I will think about how to go around it in python3.

-

The two lines of warning correspond to sharding training and validation data based on data parallelism. If the number of training/validation batches, say 9, is not divisible by the number of workers, say 4, then the mod, that is 1, equals the length of

bad list(well, I should use a better name) and the training batches will be extended to the closest number, that is 12, that is divisible by the number of workers. In your case, bothbad listare empty, which means number of training/validation batches is divisible by the number of workers (you probably used 1 or 2). So there's not need to extend and the number of training/validation batches is still 10. -

The mpirun message at the end shows that the

mpiruncommand returns due to a termination signal (9). The signal is received here. Usually, when thempiruncommand returns peacefully, it should exit on signal 0. Perhaps some external scheduler has killed your job using signal 9 due to resource limitation.

Hei, many thanks for the answer, however I updated the point 5 in the meantime, can you also check that one?

Many thanks again!!

@IstrateRoxana

b. Yes, file_batch_size corresponds to how many images in a file. batch_size is how actually you use this file. e.g., you can further divide the file into smaller portions to use smaller batch size during training.

If you generate data batches successfully using the preprocessing script from theano_alexnet, then you should have 10008 files in the train_hkl_b128_b_128 folder, i.e., from 0000_0.pkl to 5004_1.pkl`. But it seems you only have 10 files. (Also can be seen from the fact that the epoch time is too short.)

a. If you have more files, then the training goes through more iterations every epoch. For every 5120 images (that is 40 batches of 128), the training will print timing here.

Indeed, i only generated a toy dataset with only 10 batches. What is still not clear from your answer is whether the batch_size is per gpu or not.

My setting has file_batch_size = 128, which is the number of images in one .hkl file. But batch_size = 32. In this case each gpu processes 32 images? Or each gpu processed 32/4=8 images, considering I am running on 8 GPUs?

@IstrateRoxana

Forgot to answer this question. batch_size is per GPU. So the overall batch size will be the number of workers times the batch_size. This is not related to file_batch_size.

@IstrateRoxana

The train_hkl_b128_b_64.0 means every file is of batch size 64. You can use them with file_batch_size=64.

All clear now, many many thanks!

I discovered a new problem, which is practically the reason of seg faults.

1st BUG:

During training of examples/test_bsp.py, the size of the CPU main memory keeps growing until it exceeds the physical limit.

I tried to find the bug but so far with little success. Maybe you can help with this.

My dataset comprises 50 batches of 128 images each. The hkl train folder is 1.2 GB, so there should be no problem in loading it to memory, so I am not sure how 500GB of RAM get filled in a few minutes.

The memory growth starts during the compilation of the functions.

compiling training function...

compiling validation function...

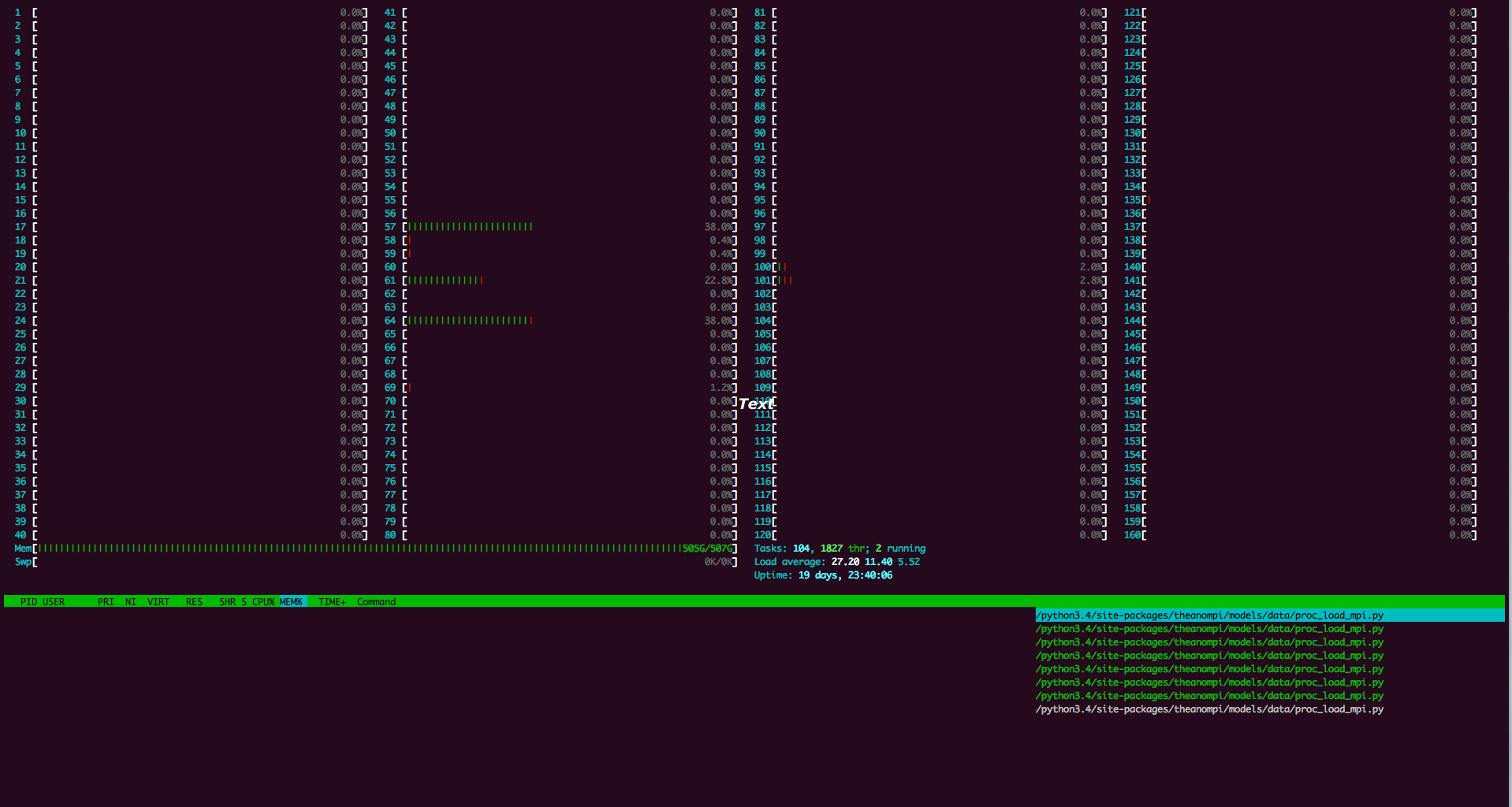

And this is how it ends after only a few epochs (2GPUs and batch_size 64) after only 6 epochs:

Also, although the num_spawn = 1 htop shows around 20 processes running proc_load_mpi.py when devices = ['cuda0', 'cuda1'].

I changed para_loading to False to check if works and I found a

2nd BUG: When using para_loading =False, it fails with

File "python3.4/site-packages/theanompi/models/lasagne_model_zoo/resnet50.py", line 493, in train_iter

arr = hkl.load(img[self.current_t]) #- img_mean

arr = hkl.load(img[self.current_t]) #- img_mean

NameError: name 'hkl' is not defined

NameError: name 'hkl' is not defined

You forgot to import hickle in resnet50.py

Also this method overflows the GPU memory. So how can I solve the memory filling with while having para_load=True?

@IstrateRoxana

Okay. Let me try reproducing your situation on our Minsky. I will get back to you later.

Sure. Tell me if you need more information.

@IstrateRoxana

For some reason I got into a different problem with hickle. I wonder if it is due to the fact that I preprocessed images using python2 version of hickle, but now trying to load it using python3 version of hickle. Maybe I need to ask @telegraphic those guys.

See this log file.

log.txt

Have you tried using python2?

I used hickle with python3, the dev version and it worked. I cannot test with python2, my theano is installed for python3 and i am not the root of the systems, therefore i cannot test this unfortunately..

I had the same problem as you have now, just install hickle dev version, it works with python3

@IstrateRoxana

I see. Do you have any log file of your seg faults?

There was another issue #21 related to python3 and seg faults. It was about updating Theano to the bleeding edge.

Have you tried adding a local PYTHONPATH to use python2

export PYTHONPATH=~/.local_minsky/lib/python2.7/site-packages/

export PATH=$PATH:~/.local_minsky/bin

export CPATH=$CPATH:~/.local_minsky/include

export LIBRARY_PATH=$LIBRARY_PATH:~/.local_minsky/lib

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/.local_minsky/liband installing all packages (theano, hickle, mpi4py, theanompi) locally? For example

$ python2 setup.py install --prefix=~/.local_minskyWe have the bleeding edge Theano and Lasagne. To be honest no, i didn't try yet, and I am preparing some results for a poster which has a deadline in 1 week and 3 days so I am not sure I have time to reinstall everything.

Anyway a few epochs still work, as you can see in the file below so I am going to limit my experiments to this.

If you find what is filling the memory that bad (i guess is a bug in the proc_load_mpi.py which is launched by too many processes) please let me know.

@IstrateRoxana

Okay. I haven't got into the situation of "too many loading processes" problem yet. My guess is that the job scheduler failed to kill some zombie process left over by last run. Not sure about the exceeded host memory issues though and this issue may cause the scheduler to kill the python process causing a 137 return signal which equals 128+9.

Do you have access to run job interactively, e.g., submit an interactive slurm job and go inside the node and start the job.

Also it's recommended to start the job with tmlauncher than in python because of the extra python process outside to wrap a mpirun call for starting workers. Also under some scheduler settings, using python test_bsp.py will automatically bind this first python process and it's child processes to socket 0. And socket 0 is close to GPU0 and GPU1 on a Minsky but far from GPU2 and GPU3 causing performance issues. (It's okay to use python test_bsp.py to start a two GPU job though.)

tmlauncher is a bash script, inside which mpirun will be called. There's also a -bind command line option to bind python process to the correct socket, i.e. the socket close to the GPU it is using.

I have access to run a job interactively. Before all runs i make sure there is no zombie, i actually kill all python processes. All jobs start from 20GB RAM which is how much the system needs to correctly run the queueing system and all other default services, so no other old zombies are using the memory.

I also check with htop how is the memory consumption evolving and always as soon as the first Spawn is called with the proc_load_mpi.py the RAM start growing very fast up until it starts trashing and is killed with 9 SIGKILL as you mentioned.

I tried running tmlauncher -cfg=launch_session.cfg but this runs with python2 behind, no? Because it cannot find some packages that are found by simply running python3 <script.py>

@IstrateRoxana

Okay. I guess now more test needs to be done in python3 to reproduce those memory and extra process issues.

Yeah, your are right. This bash script needs to be re-designed with python3 in mind.

Now, perhaps a quick hack can be

alias python='python3'Or replacing the five python in the tmlauncher script with python3.

After changing all python to python3 in tmlauncher script I get this error

model size 24.373 M floats

--------------------------------------------------------------------------

There are not enough slots available in the system to satisfy the 1 slots

that were requested by the application:

/opt/at9.0/bin/python3

Either request fewer slots for your application, or make more slots available

for use.

--------------------------------------------------------------------------

Traceback (most recent call last):

File "theano/theano-mpi-master-2017-07-18/lib64/python3.4/site-packages/theanompi/worker.py", line 152, in <module>

model = modcls(config)

File "theano/theano-mpi-master-2017-07-18/lib64/python3.4/site-packages/theanompi/models/lasagne_model_zoo/resnet50.py", line 339, in __init__

self.data.spawn_load()

File "theano/theano-mpi-master-2017-07-18/lib64/python3.4/site-packages/theanompi/models/data/imagenet.py", line 255, in spawn_load

info = mpiinfo, maxprocs = num_spawn)

File "MPI/Comm.pyx", line 1559, in mpi4py.MPI.Intracomm.Spawn (src/mpi4py.MPI.c:113260)

mpi4py.MPI.Exception: MPI_ERR_SPAWN: could not spawn processes

Which doesn't appear when running with python3 directly

@IstrateRoxana

As I said earlier, this is probably due to undersubscribed slots when using mpirun, which is a common problem of openmpi. For example, one case might be that more slots need to be declared when using mpirun to start the job. See the OMPI FAQ. You can add something like --host zhcc015,zhcc015,zhcc015,zhcc015 to subscribe 4 slots or provide host file my-hosts like

$ cat my-hosts

zhcc015 slots=4 max_slots=160

anothernode slots=4 max_slots=160to increase the number of subscribed slots. So that whenever an additional process needs to be spawned during program lifetime, there's free slots to do so.

You can try with

tmlauncherfor the same task and enable allocation details by adding the display-allocation option and the problem will be more clear.

Also you may want to check the parallel loading process is spawned on the same hostname here as training process in the display-allocation list. Since ResNet50 trains on ImageNet and ImageNet training needs an additional parallel loading process to accelerate loading and preprocessing.

I recommend doing the first check first: turn on display-allocation and see how many slots were allocated. Each training process counts one and each loading process counts one as well.

Also I kind of suspect your mpirun command is not from the same openmpi library as linked with mpi4py, because of the different behaviours between using tmlauncher and python3 test_bsp.py. You may want to check which mpirun and use check_mpi.py to verify.

Is it possible that with ResNet50 1GPU (Nvidia P100), batch size 32, i get these timings?

model size 24.373 M floats

loading 40958 started

compiling training function...

compiling validation function...

Compile time: 230.488 s

40 7.605549 0.998828

time per 40 batches: 48.80 (train 48.68 sync 0.00 comm 0.00 wait 0.12)

validation cost:7.6619

validation error:0.9984

validation top_5_error:0.9930

weights saved at epoch 0

global epoch 0 took 0.0141 h

40 7.601627 0.998828

time per 40 batches: 47.23 (train 47.14 sync 0.00 comm 0.00 wait 0.09)

validation cost:7.6594

validation error:0.9984

validation top_5_error:0.9938

global epoch 1 took 0.0136 h

40 7.603139 0.998633

time per 40 batches: 47.73 (train 47.60 sync 0.00 comm 0.00 wait 0.13)

if we compute the number of images/sec is 40 * 32 / 47.23 = 27.1 images/sec, while with TensorFlow 1 gpu, same batch_size, i get 140.44 images/sec. Am I doing something wrong?

What is it the bottleneck in this?

@IstrateRoxana

Do you mean the speed bottleneck of single GPU training? That would be attributed to the speed of Theano and cuDNN I assume, since Theano-MPI just wraps theano functions inside.

For multiple GPU training, the speed bottleneck of Theano-MPI are synchronization between GPUs and inter-GPU communication. See the 4GPU ResNet training log here on a IBM Power8 Minsky. The synchronization overhead comes from the execution speed difference between GPUs. The communication overhead is related to the inter-GPU transfer bandwidth and NUMA topology (crossing SMP/QPI is needed when using 4GPU). Hopefully it can be faster if NCCL2 is incorporated when it comes out.

The convergence bottleneck as mentioned in the Facebook paper (#22) is due to the optimization difficulty and generalization error when using large batch SGD.

But when running a training on the same architecture, 1 gpu, 32 batch size, pure theano I can process 100 images in below 2 seconds, going to 73 images/second. I meant, in which part is theano-mpi adding so much overhead?

@IstrateRoxana

Sorry, I just saw your calculations

if we compute the number of images/sec is 40 * 32 / 47.23 = 27.1 images/sec, while with TensorFlow 1 gpu, same batch_size, i get 140.44 images/sec. Am I doing something wrong?

So the time here is per 40 * 128 batch, as can be seen here,

while batch_i <model.data.n_batch_train:The batch_i corresponds to a file batch.

So the timing is per 40 * 128=5120 images. 40*128/ 47.23 = 4 * 27.1 images/sec. You can also check some other benchmark in this notebook.

@IstrateRoxana

The timing code here is designed to be batch_size agnostic. So even if you use batch_size=16 in ResNet50, the timing will be still around 40-50 seconds (a little longer than batch_size=32 because of the inefficiency when using small batch size). This is because, as shown in below code, the outer loop is per file and the inner loop is per sub-batch (which is per 32 in your case). The printing is every outer loop, this is why no matter what is the batch size you choose, e.g., 64, 32, 16, or however many sub batches in the inner loop, the timing is always per 128 batch.

while batch_i <model.data.n_batch_train:

for subb_i in range(model.n_subb):

model.train_iter(batch_i, recorder)

if exch_iteration % exchange_freq == 0:

exchanger.exchange(recorder)

exch_iteration+=1

batch_i+=1

recorder.print_train_info(batch_i*self.size)So the info here that prints is actually per file, and the batches in time per 40 batches: are actually referring to 128 sized files.