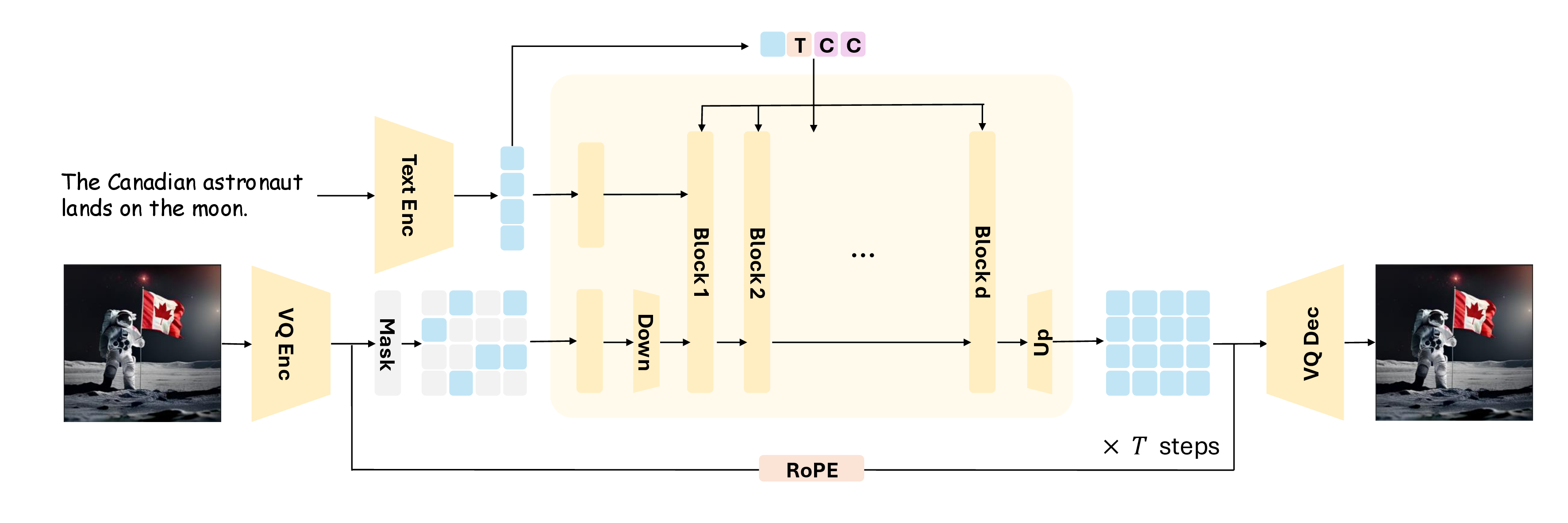

Meissonic: Revitalizing Masked Generative Transformers for Efficient High-Resolution Text-to-Image Synthesis

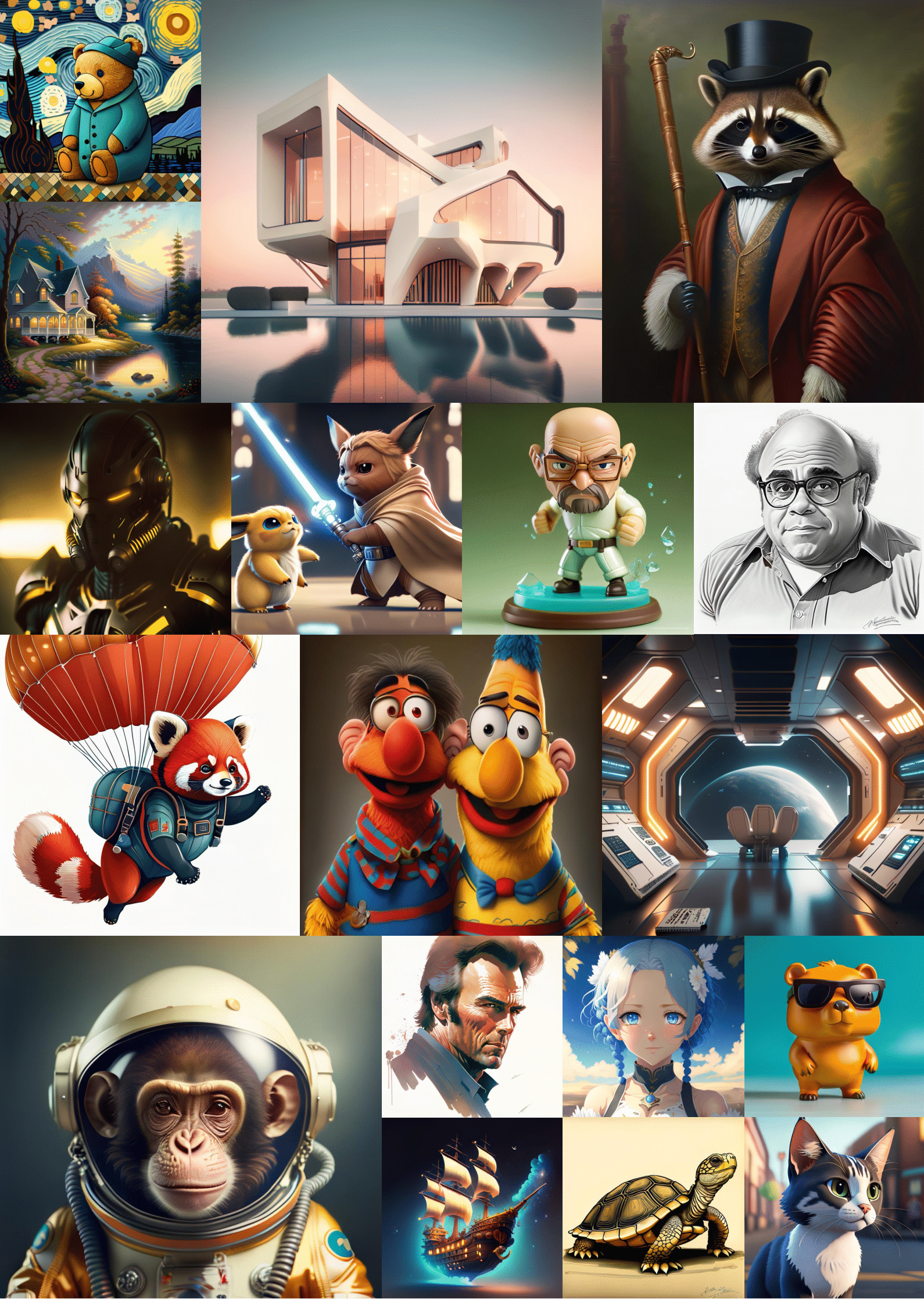

Meissonic is a non-autoregressive mask image modeling text-to-image synthesis model that can generate high-resolution images. It is designed to run on consumer graphics cards.

Key Features:

- 🖼️ High-resolution image generation (up to 1024x1024)

- 💻 Designed to run on consumer GPUs

- 🎨 Versatile applications: text-to-image, image-to-image

git clone https://github.com/viiika/Meissonic/

cd Meissonicconda create --name meissonic python

conda activate meissonic

pip install -r requirements.txtgit clone https://github.com/huggingface/diffusers.git

cd diffusers

pip install -e .python app.pypython inference.py --prompt "Your creative prompt here"python inpaint.py --mode inpaint --input_image path/to/image.jpg

python inpaint.py --mode outpaint --input_image path/to/image.jpgOptimize performance with FP8 quantization:

Requirements:

- CUDA 12.4

- PyTorch 2.4.1

- TorchAO

Note: Windows users install TorchAO using

pip install --pre torchao --index-url https://download.pytorch.org/whl/nightly/cpuCommand-line inference

python inference_fp8.py --quantization fp8Gradio for FP8 (Select Quantization Method in Advanced settings)

python app_fp8.py| Precision (Steps=64, Resolution=1024x1024) | Batch Size=1 (Avg. Time) | Memory Usage |

|---|---|---|

| FP32 | 13.32s | 12GB |

| FP16 | 12.35s | 9.5GB |

| FP8 | 12.93s | 8.7GB |

If you find this work helpful, please consider citing:

@article{bai2024meissonic,

title={Meissonic: Revitalizing Masked Generative Transformers for Efficient High-Resolution Text-to-Image Synthesis},

author={Bai, Jinbin and Ye, Tian and Chow, Wei and Song, Enxin and Chen, Qing-Guo and Li, Xiangtai and Dong, Zhen and Zhu, Lei and Yan, Shuicheng},

journal={arXiv preprint arXiv:2410.08261},

year={2024}

}We thank the community and contributors for their invaluable support in developing Meissonic. We thank apolinario@multimodal.art for making Meissonic Demo. We thank @NewGenAI and @飛鷹しずか@自称文系プログラマの勉強 for making YouTube tutorials. We thank @pprp for making fp8 and int4 quantization. We thank @camenduru for making jupyter tutorial. We thank @chenxwh for making Replicate demo and api. We thank Collov Labs for reproducing Monetico.

Made with ❤️ by the MeissonFlow Research