These projects is a part of the Inamarine 2024 project. The Inamarine 2024 is an exhibition event that will be held in Jakarta, Indonesia. The event will showcase the latest technology in the maritime industry. The Inamarine Vision contains two projects, the Inamarine Vision Gesture and the Inamarine Vision Object Detection. The Inamarine Vision Gesture project is a project that can recognize hand gestures using the MediaPipe library. The Inamarine Vision Object Detection project is a project that can detect buoys using YOLOv5. The weights file originally is on .pt format, but it has been converted to .bin and .xml (OpenVINO format) for Intel NUC compatibility.

To install the Inamarine Vision project general requirements, you need to follow the steps below:

- Create ROS2 workspace

mkdir -p ~/ros2_ws/src

cd ~/ros2_ws/src- Clone the repository

git clone https://github.com/Barunastra-ITS/inamarine-vision- Install the dependencies

pip install -r requirements.txt

sudo apt install ros-humble-vision-msgs- Build the project

cd ~/ros2_ws

colcon build- Source the project

source ~/ros2_ws/install/setup.bashTo install the Inamarine Vision Gesture project, you need to follow the steps below:

- Move the weights file and the label file to the build directory

cp ~/ros2_ws/src/vision_gesture/vision_gesture/model/keypoint_classifier_8_class.tflite ~/ros2_ws/build/lib/vision_gesture/model/

cp ~/ros2_ws/src/vision_gesture/vision_gesture/model/keypoint_classifier_label.csv ~/ros2_ws/build/lib/vision_gesture/model/- Change the label directory in the

vision_gesture.pyfile

with open('{your_home_directory}/ros2_ws/build/vision_gesture/build/lib/vision_gesture/model/keypoint_classifier_label.csv', encoding='utf-8-sig') as f:

self.keypoint_labels = [row[0] for row in csv.reader(f)]- And the weights file directory at

model/keypoint_classifier.py

def __init__(

self,

model_path='{your_home_directory}/ros2_ws/build/vision_gesture/build/lib/vision_gesture/model/keypoint_classifier_8_class.tflite',

num_threads=1,

):To install the Inamarine Vision Object Detection project, you need to follow the steps below:

- Install OpenVINO toolkit version 2023.0.2 from here and also install from pip for the OpenVINO Python API.

pip install openvino-dev==2023.0.2- Move the weights file and the label file to the build directory. There are 2 different trained weights, SGD and Adam. You can choose one of them.

cp ~/ros2_ws/src/yolov5/yolov5/trained/yolov5n_adam ~/ros2_ws/build/lib/yolov5/trained/ # for Adam

cp ~/ros2_ws/src/yolov5/yolov5/trained/yolov5n_SGD ~/ros2_ws/build/lib/yolov5/trained/ # for SGD- Change the weights file directory in the

detect.pyfile

self.weights = '{your_home_directory}/ros2_ws/build/yolov5/build/lib/yolov5/trained/yolov5n_{adam_or_SGD}/yolov5n_yolov5n_{adam_or_SGD}_openvino_model/' - Change the label file directory in the

detect.pyfile

self.data = '{your_home_directory}/ros2_ws/build/yolov5/build/lib/yolov5/trained/yolov5n_{adam_or_SGD}/yolov5n_yolov5n_{adam_or_SGD}_openvino_model/yolov5n_{adam_or_SGD}.yaml'To use the Inamarine Vision Gesture project, just run the vision_gesture.py file.

cd ~/ros2_ws

ros2 run vision_gesture vision_gestureTo use the Inamarine Vision Object Detection project, just run the detect.py file.

cd ~/ros2_ws

ros2 run yolov5 detect/gesture_recognition(std_msgs/String)

/inference/image_raw(sensor_msgs/Image)/inference/object_raw(yolo_msgs/msg/BoundingBoxArray)

String(std_msgs/String)

String(std_msgs/String)BoundingBox2D(vision_msgs/msg/BoundingBox2D)Image(sensor_msgs/msg/Image)Header(std_msgs/msg/Header)

BoundingBox(yolo_msgs/msg/BoundingBox)stringclass_namevision_msgs/BoundingBox2Dbounding_box

BoundingBoxArray(yolo_msgs/msg/BoundingBoxArray)std_msgs/HeaderheaderBoundingBox[]bounding_box_array

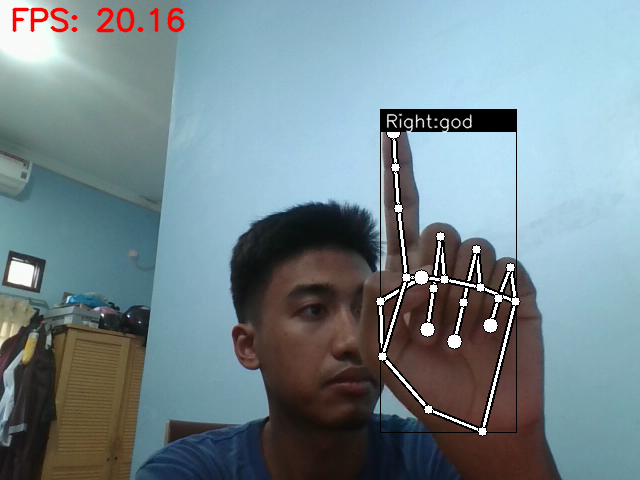

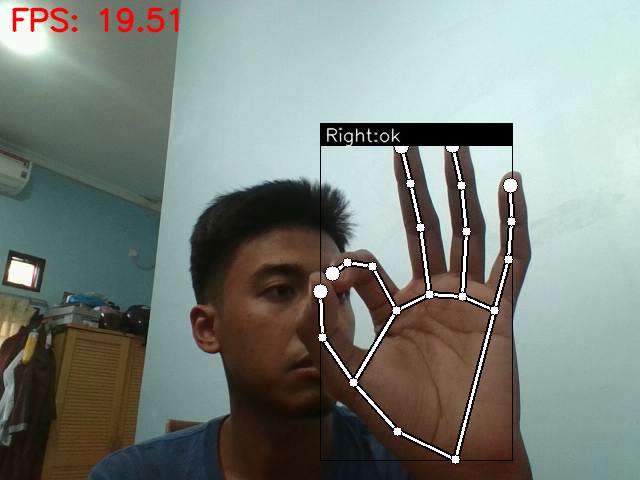

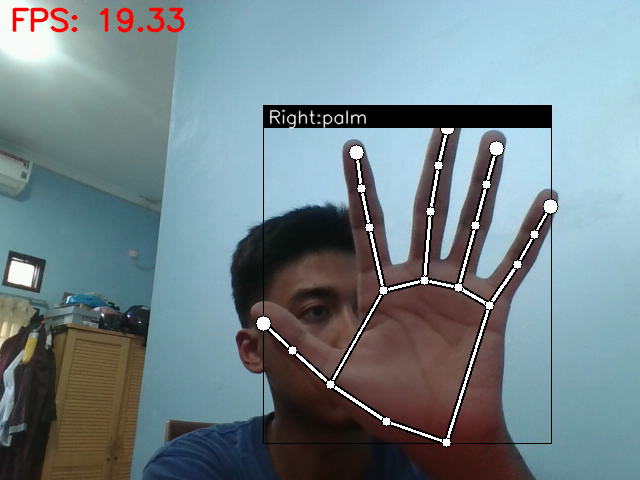

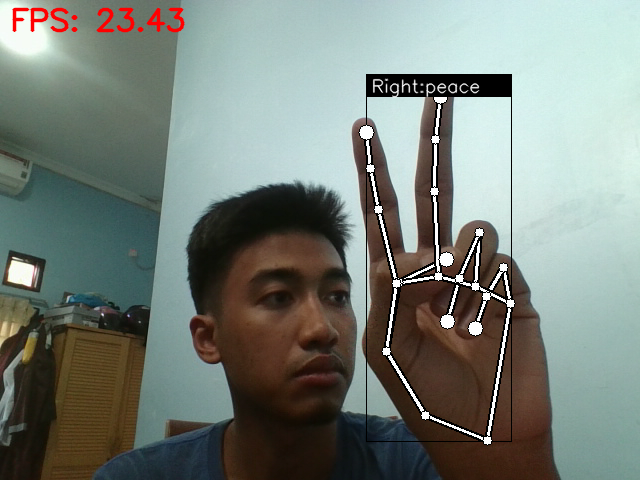

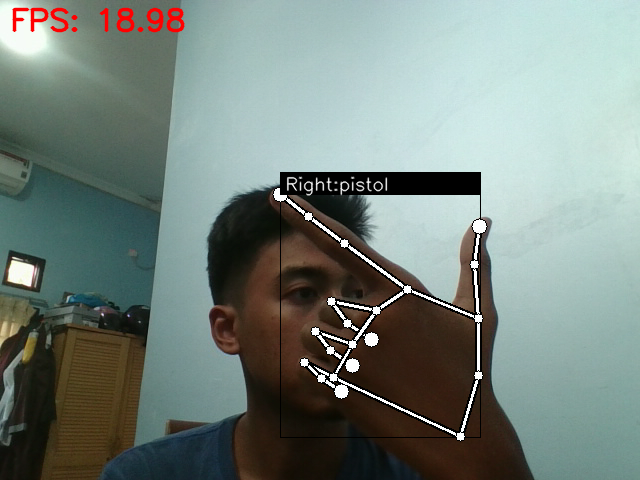

The Inamarine Vision Gesture project can recognize 8 hand gestures. The gestures are:

| Class Name | Class ID | Example |

|---|---|---|

| fist | 0 |  |

| god | 1 |  |

| handsome | 2 |  |

| ok | 3 |  |

| palm | 4 |  |

| peace | 5 |  |

| pistol | 6 |  |

| thumbs_up | 7 |  |

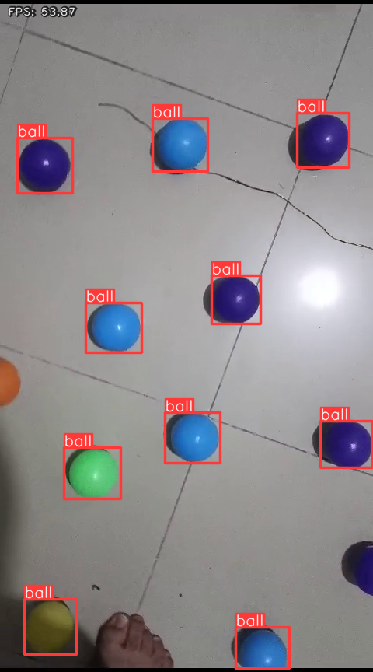

The Inamarine Vision Object Detection project can only recognize 1 object. The object is:

| Class Name | Class ID | Example |

|---|---|---|

| buoy | 0 |  |

-

In Inamarine Vision Object Detection, if you do inference to a video file with CPU, you should change the model shape from

(1, 3, 640, 640)to(1, 3, 480, 640). You can change the model shape usingexport.pyfile.- Modify the

export.pyfile

def parse_opt(known=False): ... parser = argparse.ArgumentParser() ... parser.add_argument("--imgsz", "--img", "--img-size", nargs="+", type=int, default=[640, 640], help="image (h, w)") # change the default value to [480, 640]

- Run the

export.pyfile

python export.py --weights yolov5n_{adam_or_SGD}.pt --device cpu --include openvino - Modify the

This project is licensed under the MIT License - see the LICENSE file for details.