A collection of papers on Large Language Model Agents

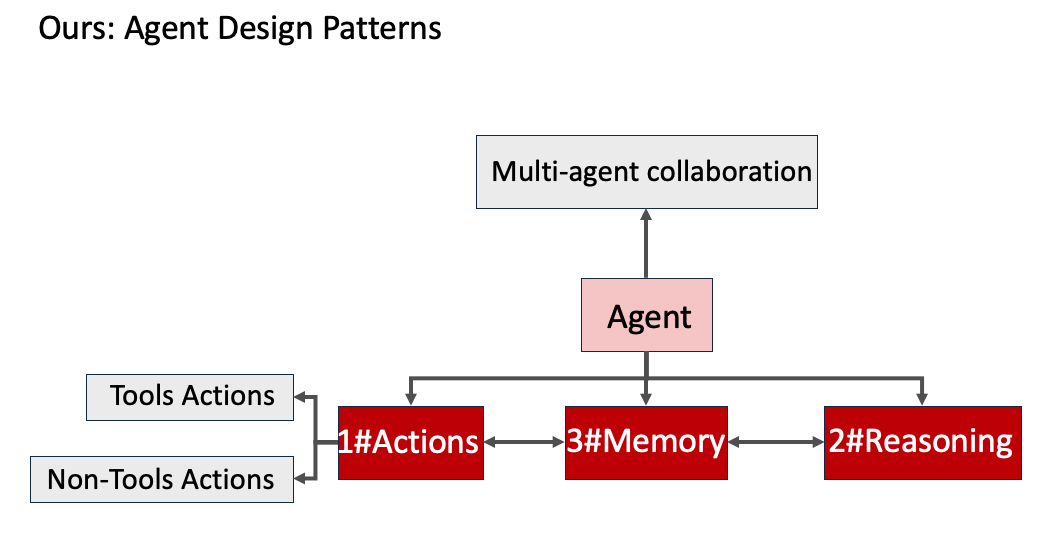

Our collection of papers focuses on various aspects of LLM-Agent Design Patterns. The design patterns can be abstracted and illustrated as shown in the figure below. The collection is categorized into actions, memory, reasoning, agents, and multi-agent collaboration.

Besides the aspects shown in the figure, this repository will also include: overview of LLM-Agent, benchmarks, prompt design.

- Tulip Agent -- Enabling LLM-Based Agents to Solve Tasks Using Large Tool Libraries 2024.7

- Reason for Future, Act for Now: A Principled Architecture for Autonomous LLM Agents ICML2024

- Multi-Programming Language Sandbox for LLMs 2024.10

- 360° REA: Towards A Reusable Experience Accumulation with Assessment for Multi-Agent System ACL2024

- Symbolic Working Memory Enhances Language Models for Complex Rule Application 2024.8

- MemoRAG: Moving towards Next-Gen RAG Via Memory-Inspired Knowledge Discovery 2024.9

- AGENT WORKFLOW MEMORY 2024.9

- AgentGen: Enhancing Planning Abilities for Large Language Model based Agent via Environment and Task Generation 2024.8

- Optimus-1: Hybrid Multimodal Memory Empowered Agents Excel in Long-Horizon Tasks 2024.8

- Position: LLMs Can’t Plan, But Can Help Planning in LLM-Modulo Frameworks ICML2024

- Rethinking the Bounds of LLM Reasoning: Are Multi-Agent Discussions the Key? ACL2024

- Strategic Chain-of-Thought: Guiding Accurate Reasoning in LLMs through Strategy Elicitation 2024.9

- Self-Harmonized Chain of Thought 2024.9

- Iteration of Thought: Leveraging Inner Dialogue for Autonomous Large Language Model Reasoning 2024.9

- Textualized Agent-Style Reasoning for Complex Tasks by Multiple Round LLM Generation 2024.9

- Logic-of-Thought: Injecting Logic into Contexts for Full Reasoning in Large Language Models 2024.9

- BEATS: OPTIMIZING LLM MATHEMATICAL CAPABILITIES WITH BACKVERIFY AND ADAPTIVE DISAMBIGUATE BASED EFFICIENT TREE SEARCH 2024.9

- HDFLOW: ENHANCING LLM COMPLEX PROBLEMSOLVING WITH HYBRID THINKING AND DYNAMIC WORKFLOWS 2024.9

- CoMAT: Chain of Mathematically Annotated Thought Improves Mathematical Reasoning 2024.10

- Optimizing Chain-of-Thought Reasoning: Tackling Arranging Bottleneck via Plan Augmentation 2024.10

- From LLMs to LLM-based Agents for Software Engineering: A Survey of Current, Challenges and Future 2024.8

- MathLearner: A Large Language Model Agent Framework for Learning to Solve Mathematical Problems 2024.8

- Executable Code Actions Elicit Better LLM Agents ICML2024

- Agent Q: Advanced Reasoning and Learning for Autonomous AI Agents 2024.8

- SciAgents: Automating scientific discovery through multi-agent intelligent graph reasoning 2024.9

- Experiential Co-Learning ACL2024

- Should we be going MAD? A Look at Multi-Agent Debate Strategies for LLMs ICML2024

- Improving Factuality and Reasoning in Language Models through Multiagent Debate ICML2024

- Synergistic Simulations: Multi-Agent Problem Solving with Large Language Models

-

CFBench: A Comprehensive Constraints-Following Benchmark for LLMs 2024.8

-

TravelPlanner: A Benchmark for Real-World Planning with Language Agents ICML 2024

-

LLMs Still Can't Plan; Can LRMs? A Preliminary Evaluation of OpenAI's o1 on PlanBench 2024.9

-

Omni-MATH: A Universal Olympiad Level Mathematic Benchmark For Large Language Models

This repository will be continuously updated as new research on LLM Agents is conducted. Stay tuned for the latest papers, benchmarks, and innovations in the field.