How did you convert the frozen inference model to tfcorml model?

Opened this issue · 3 comments

Hello Yin,

I tried to convert the frozen inference model to tfcoreml model.

I have followed the tutorial mentioned here:

https://github.com/tf-coreml/tf-coreml

But I really get some problems during the converting task,

ValueError: output name: input_to_float, was provided, but the Tensorflow graph does not contain a tensor with this name.

What did you use to convert your model to tfcoreml ( ios )?

+ Update:

I have successfully converted the model but I don't know if the model is valid or not:

I have got this output log:

Core ML input(s):

[name: "input_to_float__0"

type {

multiArrayType {

shape: 3

shape: 112

shape: 112

dataType: DOUBLE

}

}

]

Core ML output(s):

[name: "logits__BiasAdd__0"

type {

multiArrayType {

shape: 80

dataType: DOUBLE

}

}

]

Should I get :

shape:1

shape:112

shape:112

shape:3

instead of:

shape:3

shape:112

shape:112

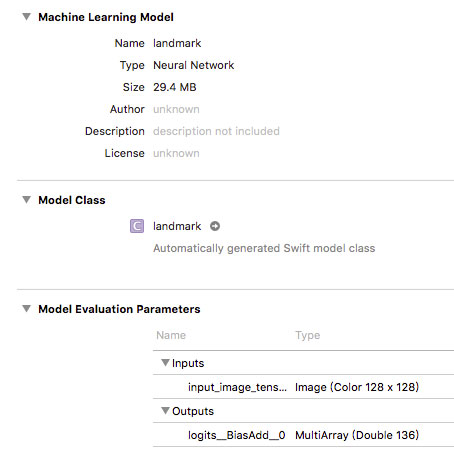

I think the model is valid. Xcode could recognize a converted model if you specify the input data type as image like this:

Note the Xcode project is published a year ago and many things may have changed during this time. Please refer to the latest Apple development documentation for detailed instructions.

Ok, Thanks for your answer.

I have tested your project with the model that you provided and it works fine, but when I integrate my model, I have got an exception.

I understand this exception but I don't know really how can I solve it.

The exception says that the input should be type of an image, not Multiarray.

Do you have any idea how can I set or change the type of input tensor before conversion?

I have solved my problem.

The project "face marks" works fine in real time with good value of fps with integration of my model