Yinyu Nie, Angela Dai, Xiaoguang Han, Matthias Nießner

in CVPR 2023

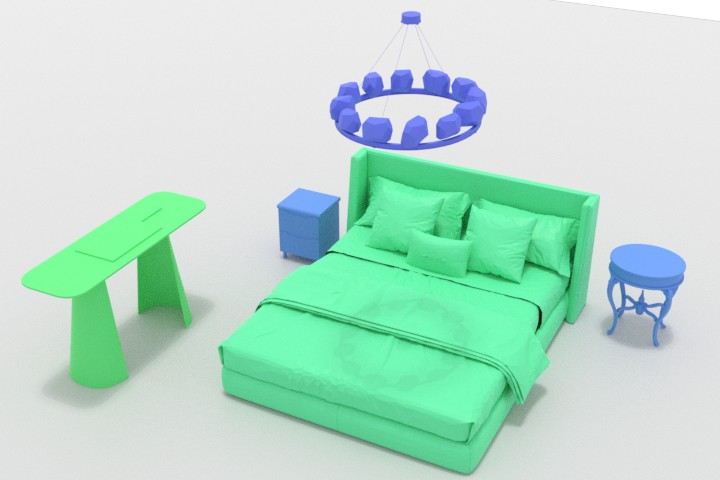

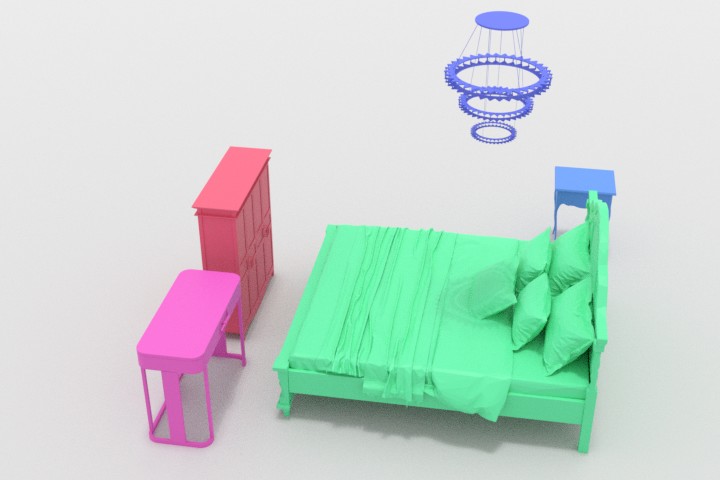

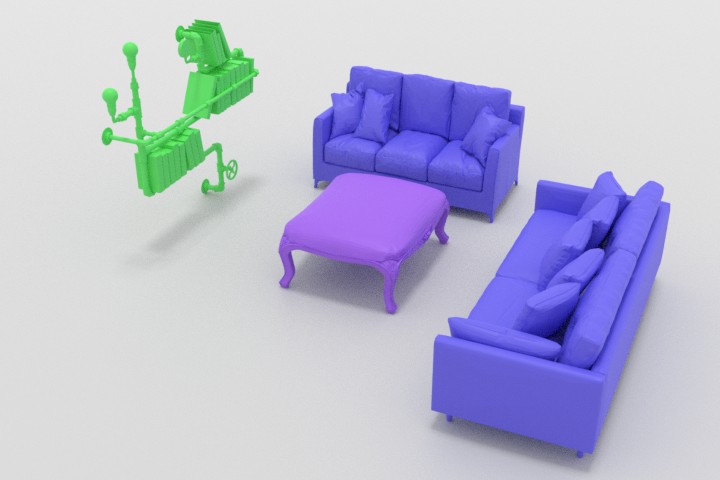

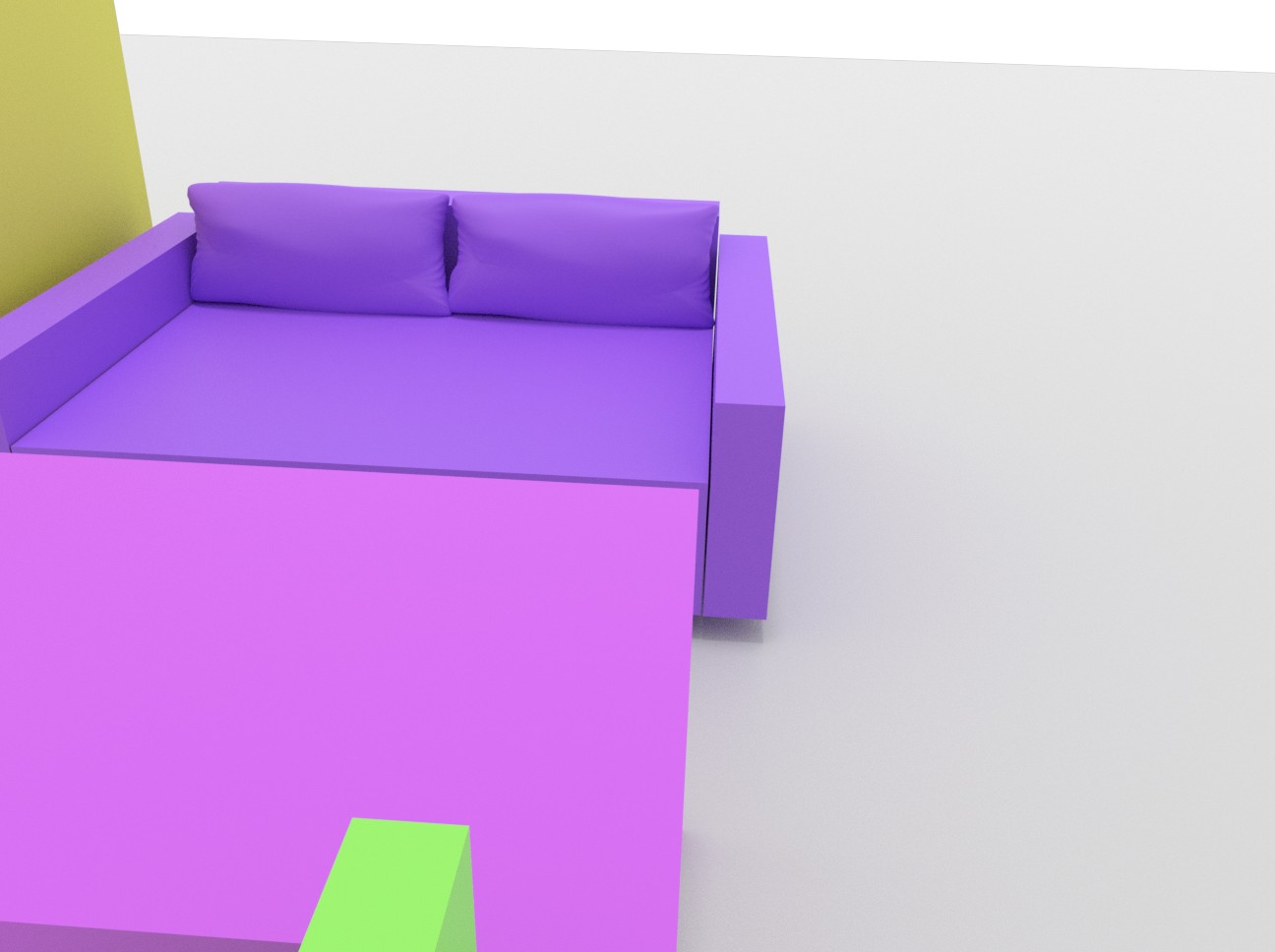

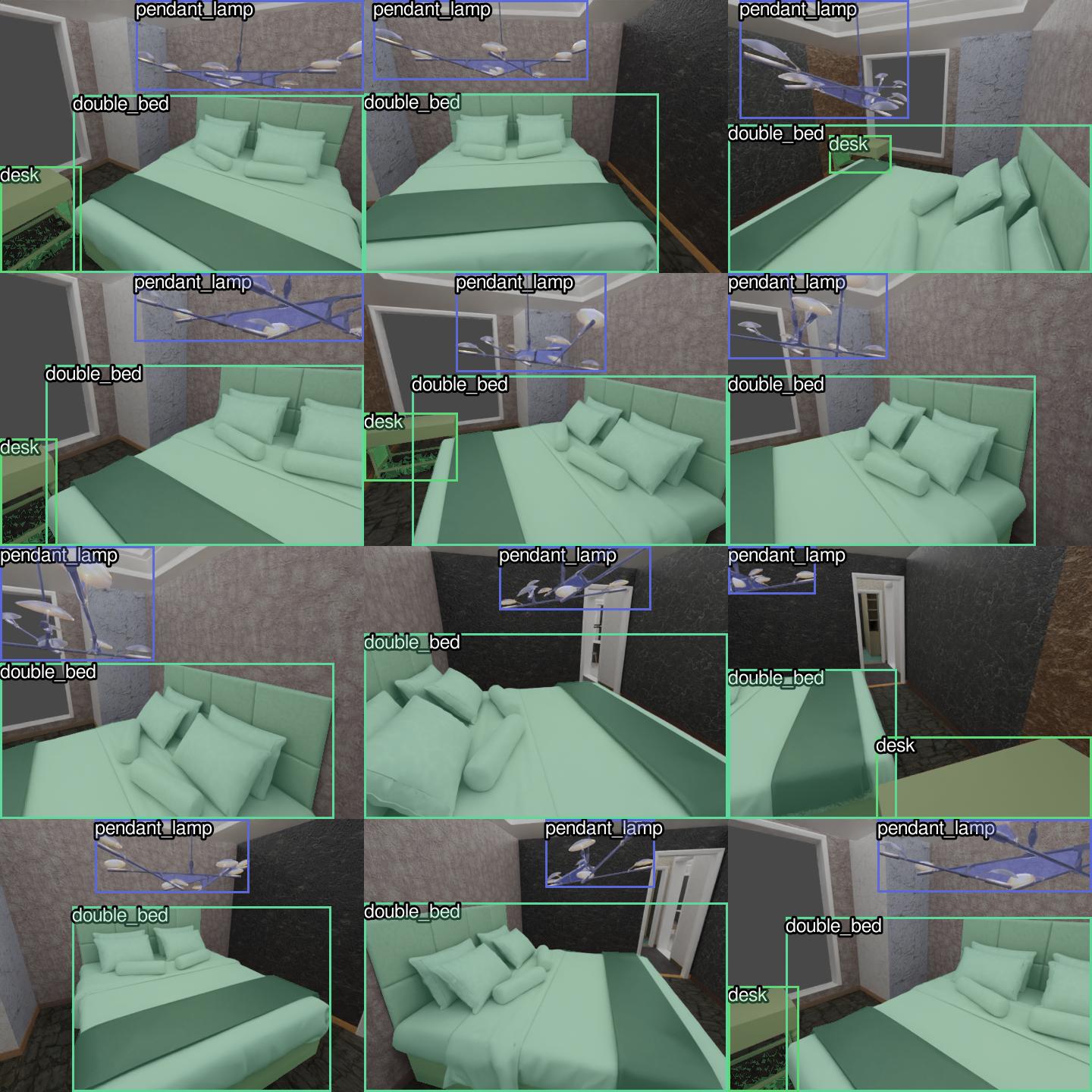

3D Scene Generation

|

|

|

|

|---|

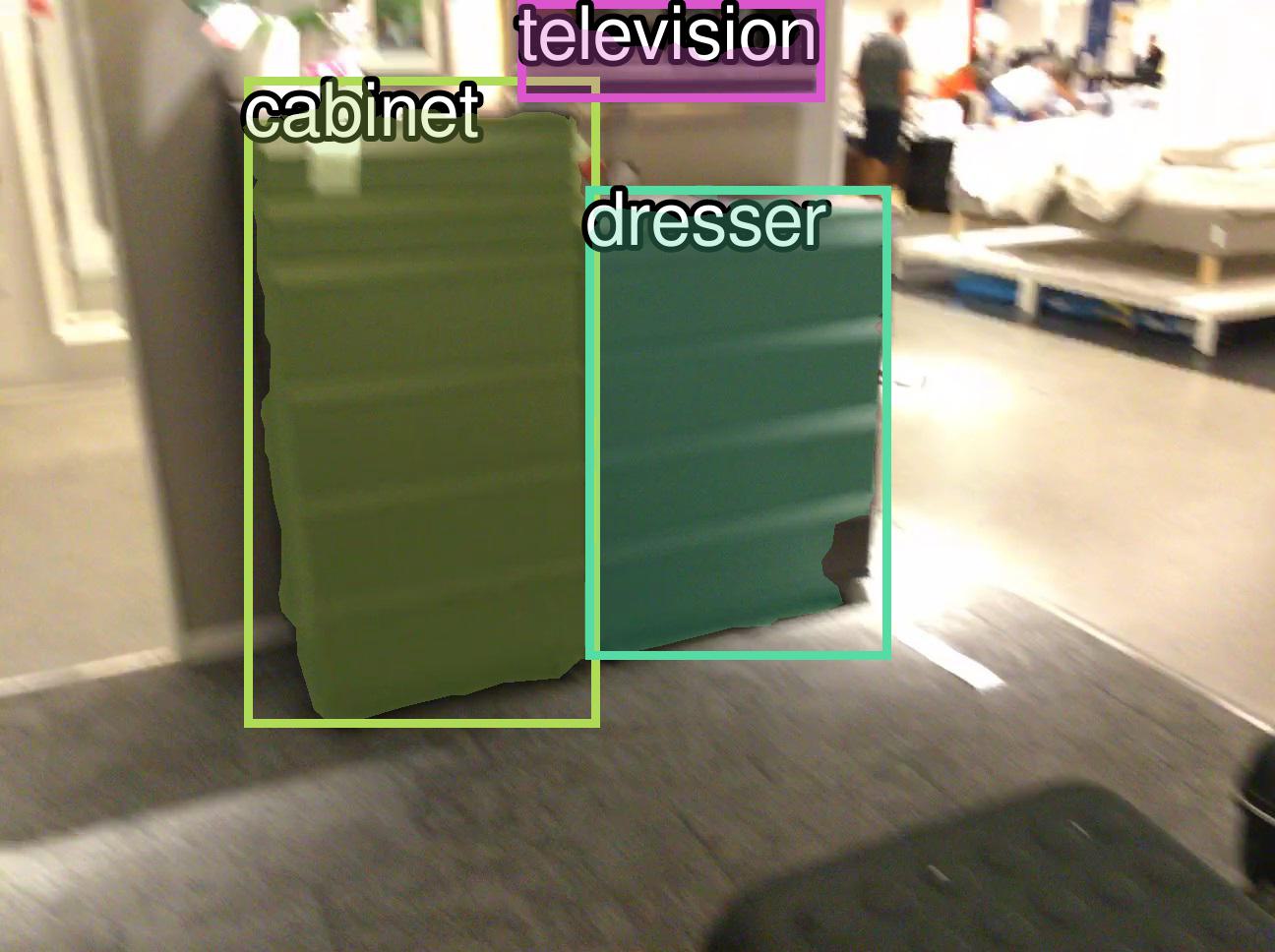

Single View Reconstruction

| Input | Pred | Input | Pred |

|---|---|---|---|

|

|

|

|

Our codebase is developed under Ubuntu 20.04 with PyTorch 1.12.1.

-

We recommend to use conda to deploy our environment by

cd ScenePriors conda create env -f environment.yml conda activate sceneprior -

Install Fast Transformers by

cd external/fast_transformers python setup.py build_ext --inplace cd ../.. -

Please follow link to install the prerequisite libraries for PyTorch3D. Then install PyTorch3D from our local clone by

cd external/pytorch3d pip install -e . cd ../..Note: After installed all prerequisite libraries in link, please do not install prebuilt binaries for PyTorch3D.

-

Apply & Download the 3D-Front dataset and link them to the local directory as follows:

datasets/3D-Front/3D-FRONT datasets/3D-Front/3D-FRONT-texture datasets/3D-Front/3D-FUTURE-model -

Render 3D-Front scenes following my rendering pipeline and link the rendering results (in

renderingsfolder) todatasets/3D-Front/3D-FRONT_renderings_improved_matNote: you can comment out

bproc.renderer.enable_depth_output(activate_antialiasing=False)inrender_dataset_improved_mat.pysince we do not need depth information. -

Preprocess 3D-Front data by

python utils/threed_front/1_process_viewdata.py --room_type ROOM_TYPE --n_processes NUM_THREADS python utils/threed_front/2_get_stats.py --room_type ROOM_TYPE- The processed data for training are saved in

datasets/3D-Front/3D-FRONT_samples. - We also parsed and extracted the 3D-Front data for visualization into

datasets/3D-Front/3D-FRONT_scenes. ROOM_TYPEcan be'bed'(bedroom) or'living'(living room).- You can set

NUM_THREADSto your CPU core number for parallel processing.

- The processed data for training are saved in

-

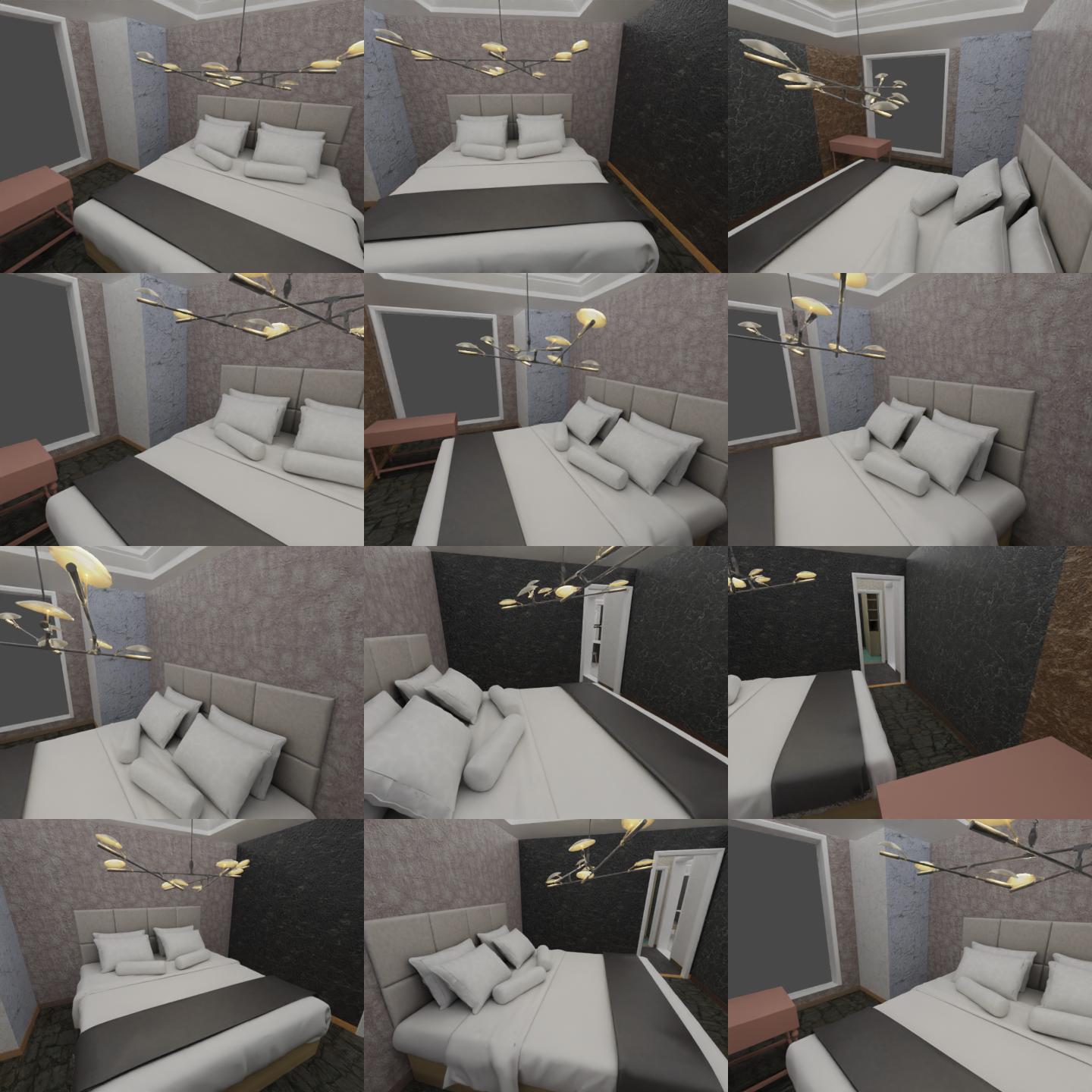

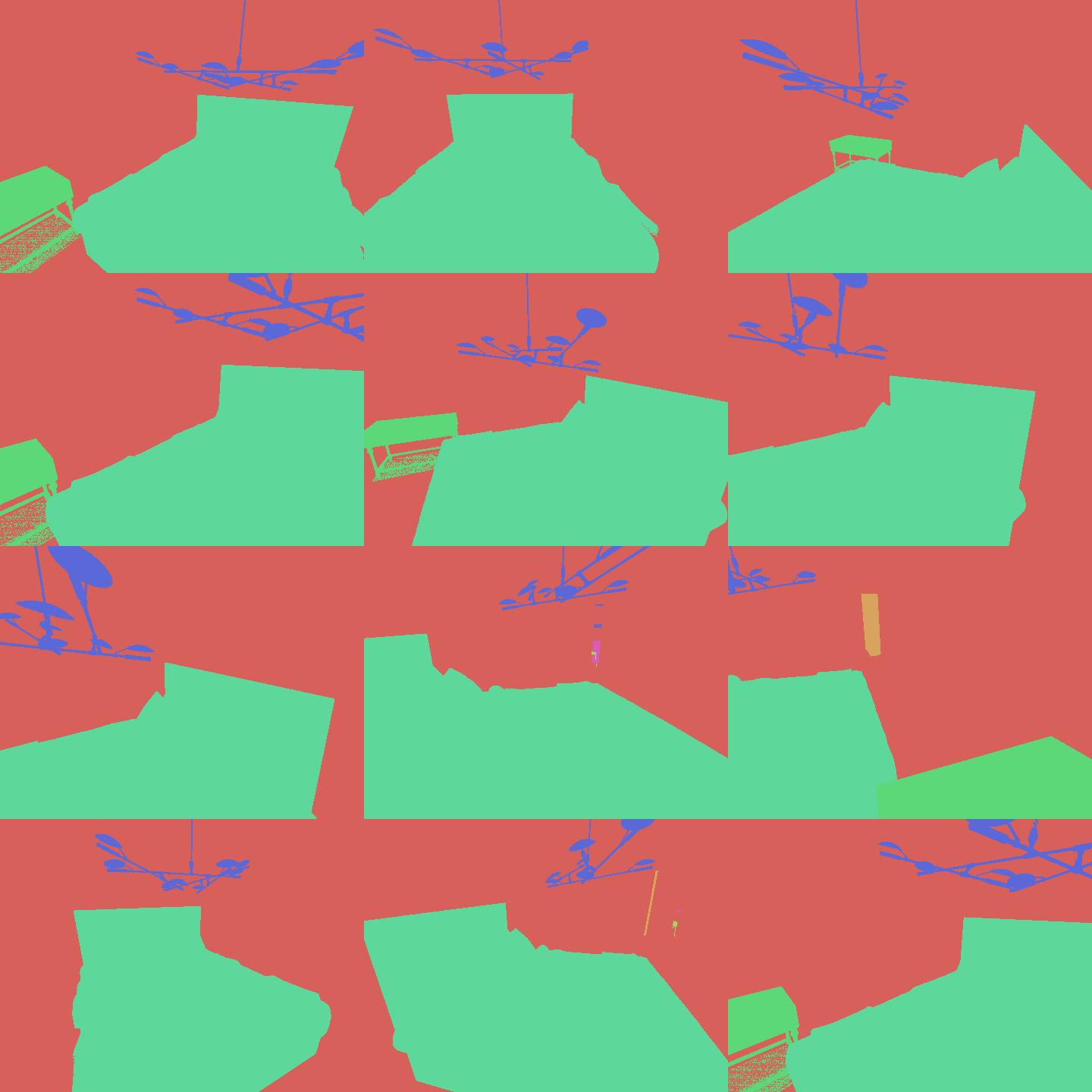

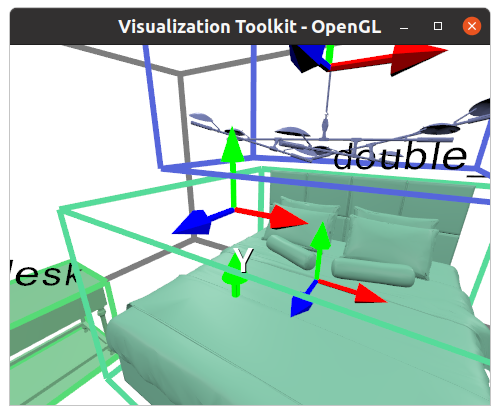

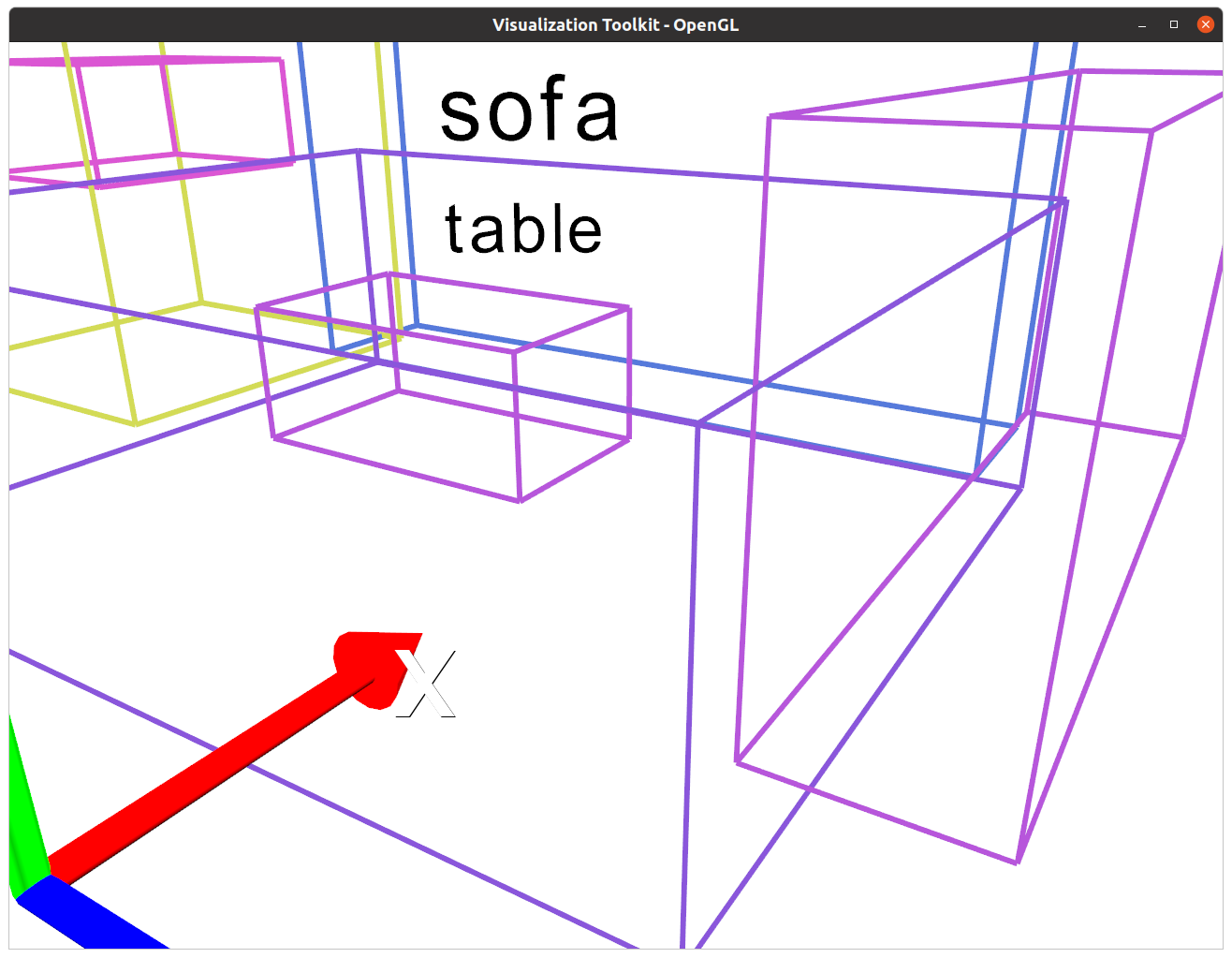

Visualize processed data for verification by (optional)

python utils/threed_front/vis/vis_gt_sample.py --scene_json SCENE_JSON_ID --room_id ROOM_ID --n_samples N_VIEWSSCENE_JSON_IDis the ID of a scene, e,g,6a0e73bc-d0c4-4a38-bfb6-e083ce05ebe9.ROOM_IDis the room ID in this scene, e.g.,MasterBedroom-2679.N_VIEWSis the number views to visualize., e.g.12.

If everything goes smooth, there will pop five visualization windows as follows.

RGB |

Semantics |

Instances |

3D Box Projections |

CAD Models (view #1) |

|---|---|---|---|---|

|

|

|

|

|

Note: X server is required for visualization.

-

Apply and download ScanNet into

datasets/ScanNet/scans. Since we need 2D data, the*.sensshould also be downloaded for each scene. -

Extract

*.sensfiles to obtain RGB/semantics/instance/camera pose frame data bypython utils/scannet/1_unzip_sens.pyThen the folder structure in each scene looks like:

./scene* |--color (folder) |--instance-filt (folder) |--intrinsic (folder) |--label-filt (folder) |--pose (folder) |--scene*.aggregation.json |--scene*.sens |--scene*.txt |--scene*_2d-instance.zip ... |--scene*_vh_clean_2.ply -

Process ScanNet data by

python utils/scannet/2_process_viewdata.pyThe processed data will be saved in

datasets/ScanNet/ScanNet_samples. -

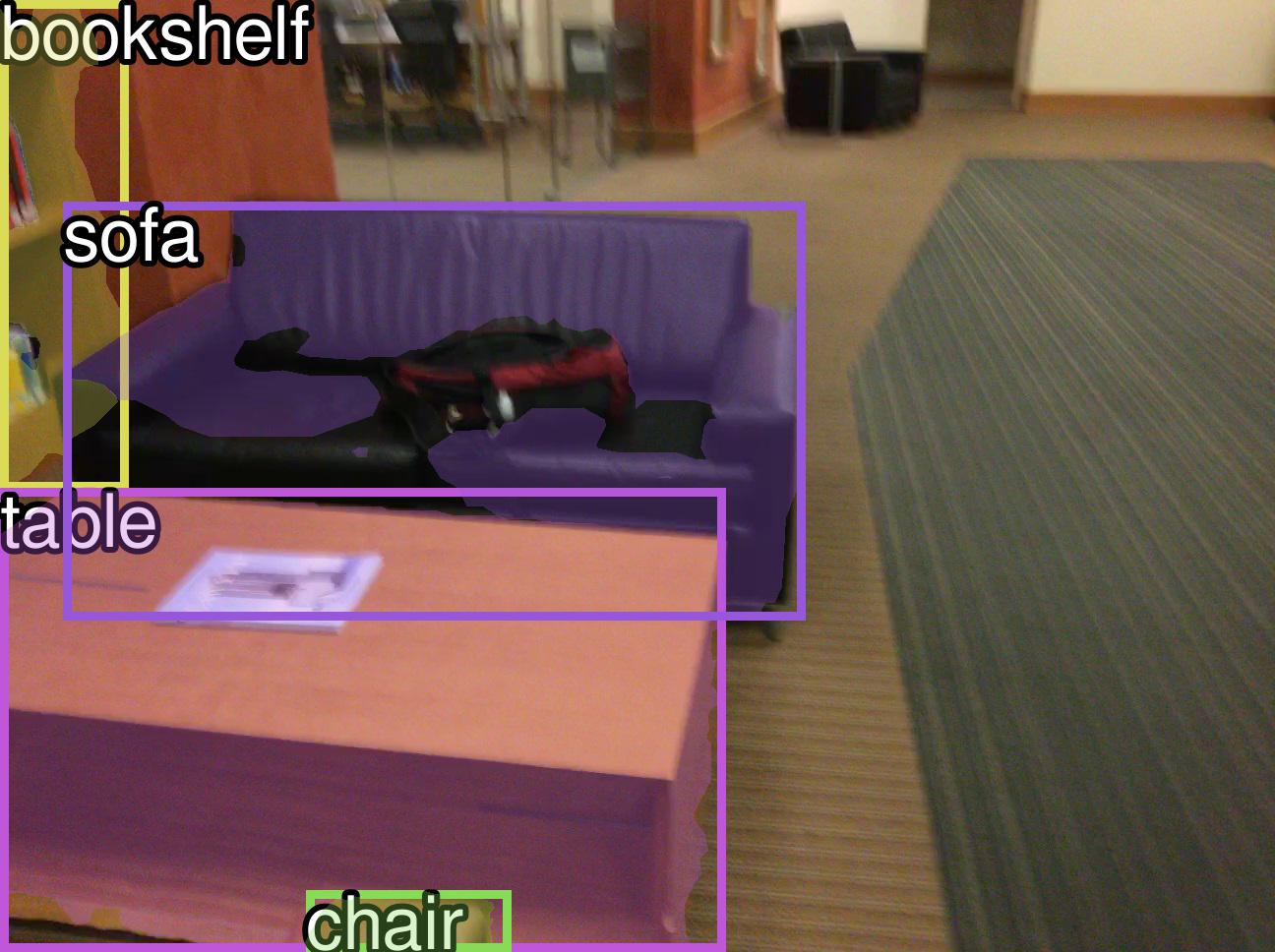

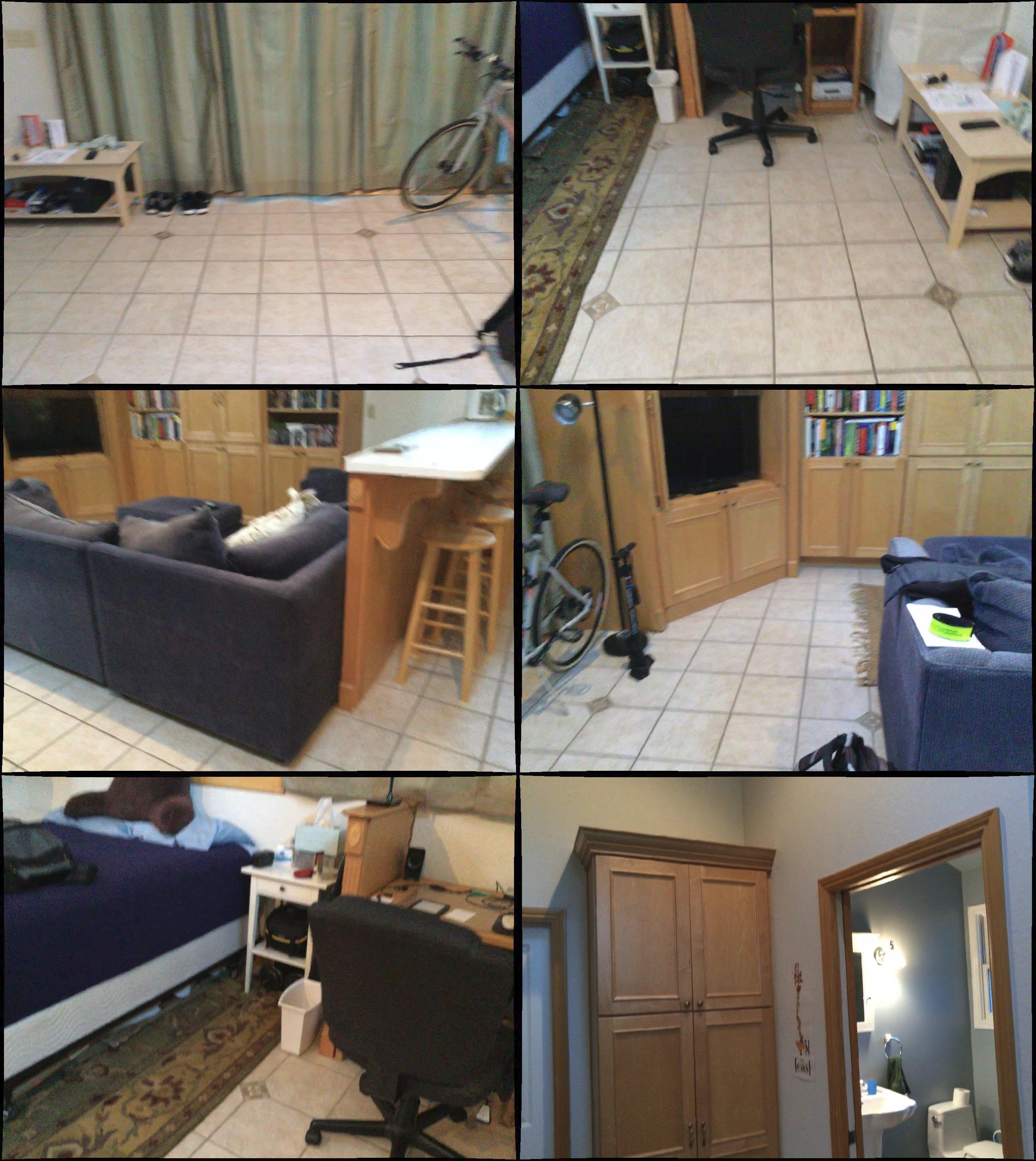

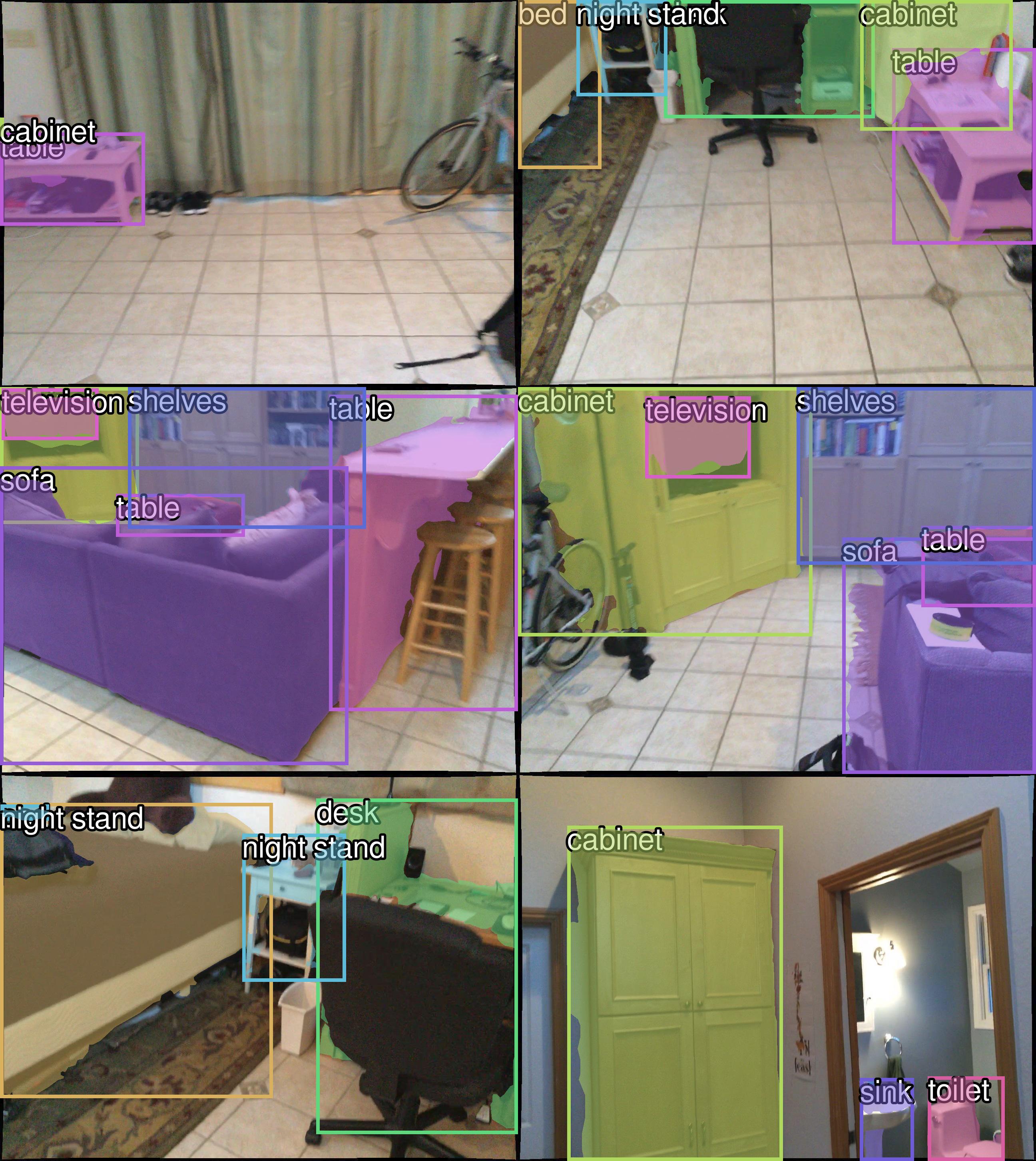

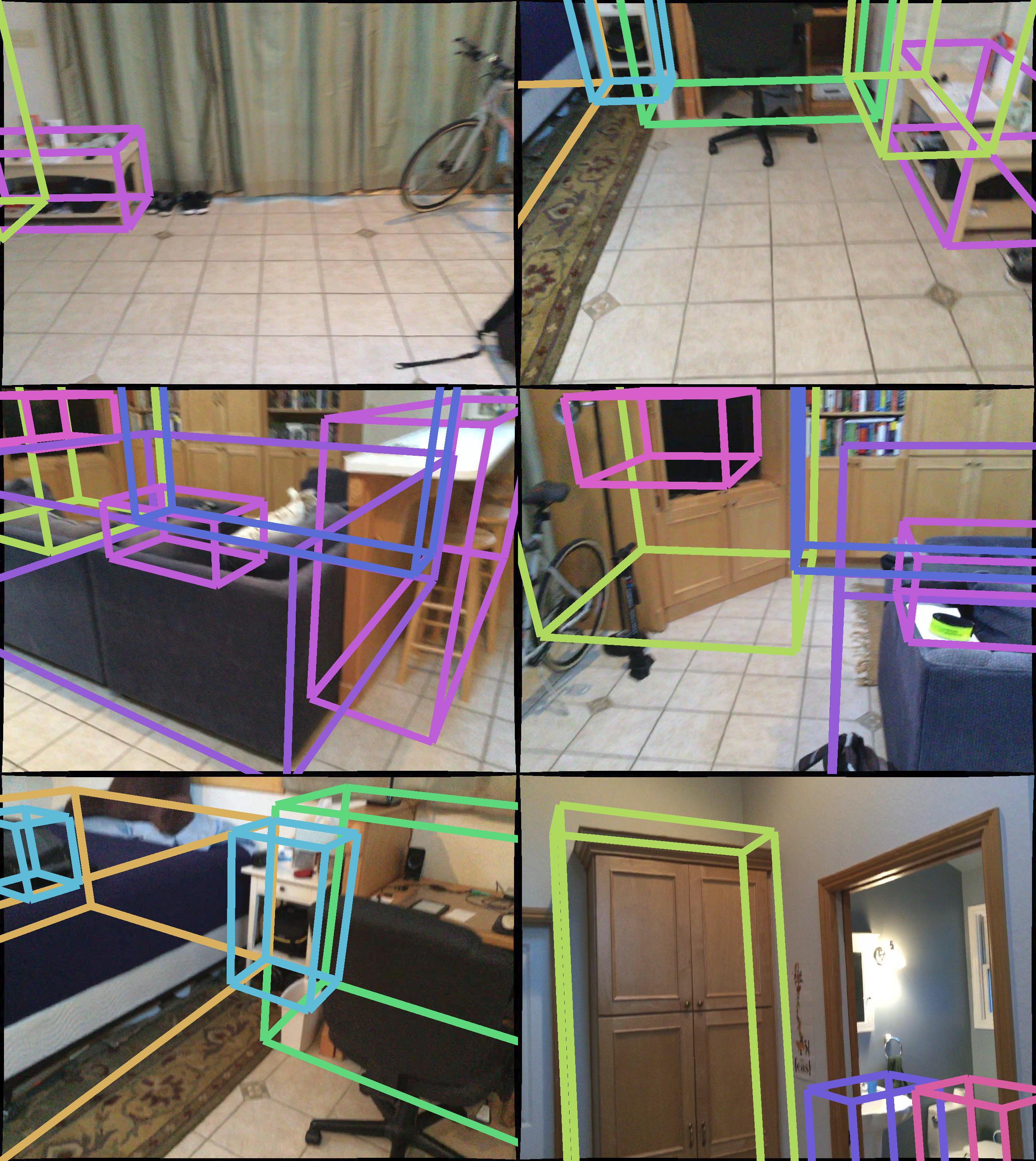

Visualize the processed data by(optional)

python utils/scannet/vis/vis_gt.py --scene_id SCENE_ID --n_samples N_SAMPLES- SCENE_ID is the scene ID in scannet, e.g.,

scene0000_00 - N_SAMPLES is the number of views to visualize, e.g.,

6

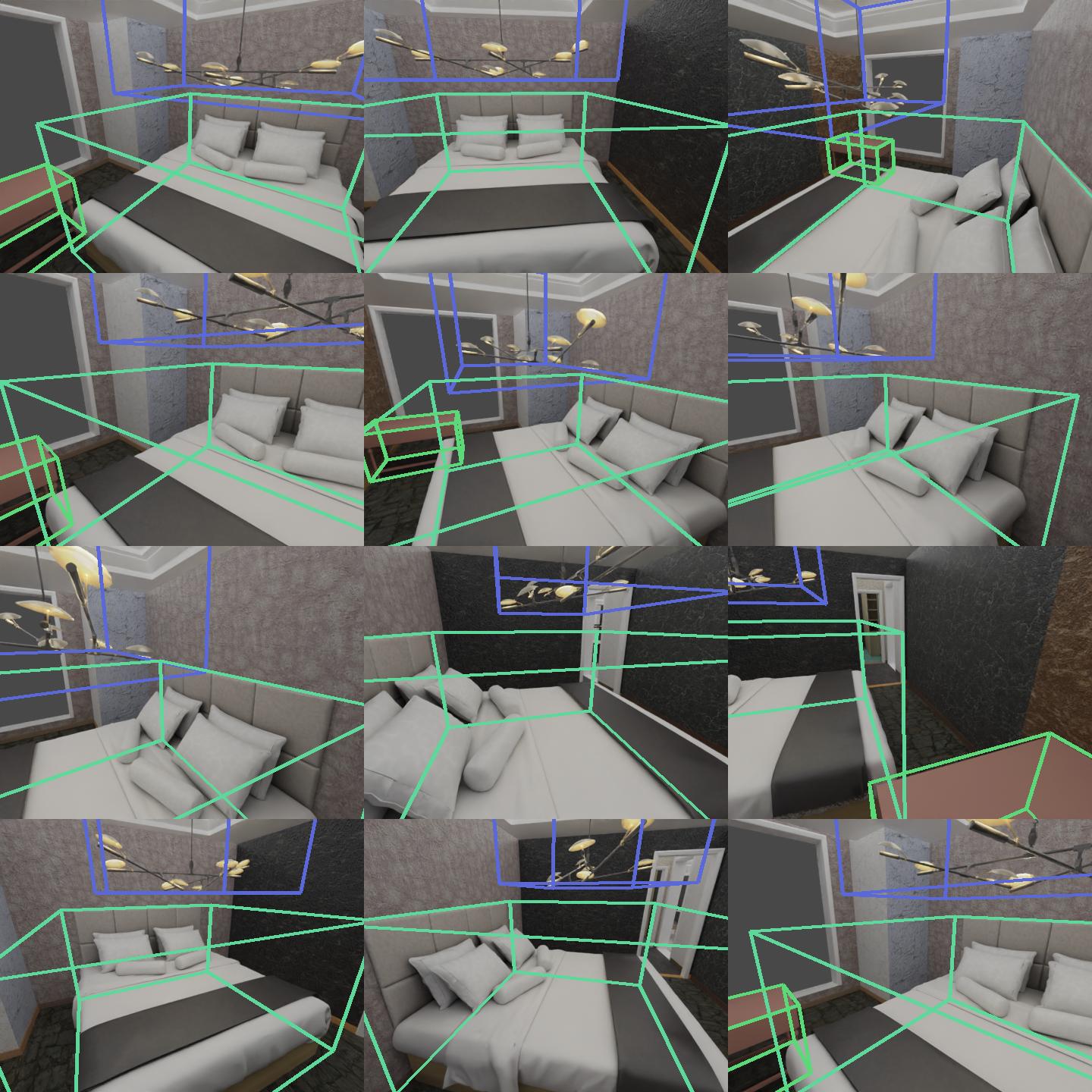

If everything goes smooth, it will pop out five visualization windows like

- SCENE_ID is the scene ID in scannet, e.g.,

RGB |

Semantics |

Instances |

3D Box Projections |

3D Boxes (view #3) |

|---|---|---|---|---|

|

|

|

|

|

Note: X server is required for visualization.

Note: we use SLURM to manage multi-GPU training. For backend setting, please check slurm_jobs.

Here we use bedroom data as an example. Training on living rooms is the same.

- Start layout pretraining by

The network weight will be saved in

python main.py \ start_deform=False \ resume=False \ finetune=False \ weight=[] \ distributed.num_gpus=4 \ data.dataset=3D-Front \ data.split_type=bed \ data.n_views=20 \ data.aug=False \ device.num_workers=32 \ train.batch_size=128 \ train.epochs=800 \ train.freeze=[] \ scheduler.latent_input.milestones=[400] \ scheduler.generator.milestones=[400] \ log.if_wandb=True \ exp_name=pretrain_3dfront_bedroomoutputs/3D-Front/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth. - Shape training - We start shape training after the layout training converged. Please replace the

weightkeyword below with the pretrained weight path.Still, the refined network weight will be saved inpython main.py \ start_deform=True \ resume=False \ finetune=True \ weight=['outputs/3D-Front/train/YEAR-MONTH-DAY/HOUR-MINITE-SECOND/model_best.pth'] \ distributed.num_gpus=4 \ data.dataset=3D-Front \ data.n_views=20 \ data.aug=False \ data.downsample_ratio=4 \ device.num_workers=16 \ train.batch_size=16 \ train.epochs=500 \ train.freeze=[] \ scheduler.latent_input.milestones=[300] \ scheduler.generator.milestones=[300] \ log.if_wandb=True \ exp_name=train_3dfront_bedroomoutputs/3D-Front/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth.

-

Start layout pretraining by

python main.py \ start_deform=False \ resume=False \ finetune=False \ weight=[] \ distributed.num_gpus=4 \ data.dataset=ScanNet \ data.split_type=all \ data.n_views=40 \ data.aug=True \ device.num_workers=32 \ train.batch_size=64 \ train.epochs=500 \ train.freeze=[] \ scheduler.latent_input.milestones=[500] \ scheduler.generator.milestones=[500] \ log.if_wandb=True \ exp_name=pretrain_scannetThe network weight will be saved in

outputs/ScanNet/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth. -

Shape training - We start shape training after the layout training converged. Please replace the weight keyword below with the pretrained weight path.

python main.py \

start_deform=True \

resume=False \

finetune=True \

weight=['outputs/ScanNet/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth'] \

distributed.num_gpus=4 \

data.dataset=ScanNet \

data.split_type=all \

data.n_views=40 \

data.downsample_ratio=4 \

data.aug=True \

device.num_workers=8 \

train.batch_size=8 \

train.epochs=500 \

train.freeze=[] \

scheduler.latent_input.milestones=[300] \

scheduler.generator.milestones=[300] \

log.if_wandb=True \

exp_name=train_scannet

Still, the refined network weight will be saved in outputs/ScanNet/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth.

Please replace the keyword weight below with your trained weight path.

-

Scene Generation (with 3D-Front)

python main.py \ mode=generation \ start_deform=True \ data.dataset=3D-Front \ finetune=True \ weight=outputs/ScanNet/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth \ generation.room_type=bed \ data.split_dir=splits \ data.split_type=bed \ generation.phase=generationThe generated scenes will be saved in

outputs/3D-Front/generation/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND. -

Single view reconstruction (with ScanNet). Since this process involves test-time optimization, it would be very slow. Here we test in parallel by dividing the whole test set into

batch_numbatches. You should run this script multiple times to finish the whole testing, where for each script, you should set an individualbatch_idnumber,batch_id=0,1,...,batch_num-1. If you not want to run in parallel, you can keep the default setting as below.python main.py \ mode=demo \ start_deform=True \ finetune=True \ data.n_views=1 \ data.dataset=ScanNet \ data.split_type=all \ weight=outputs/ScanNet/train/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/model_best.pth \ optimizer.method=RMSprop \ optimizer.lr=0.01 \ scheduler.latent_input.milestones=[1200] \ scheduler.latent_input.gamma=0.1 \ demo.epochs=2000 \ demo.batch_id=0 \ demo.batch_num=1 \ log.print_step=100 \ log.if_wandb=False \The results will be saved in

outputs/ScanNet/demo/output. -

Similarly, you can do single view reconstruction with 3D-Front as well.

python main.py \ mode=demo \ start_deform=True \ finetune=True \ data.n_views=1 \ data.dataset=3D-Front \ data.split_type=bed \ weight=outputs/3D-Front/train/2022-09-06/02-37-24/model_best.pth \ optimizer.method=RMSprop \ optimizer.lr=0.01 \ scheduler.latent_input.milestones=[1200] \ scheduler.latent_input.gamma=0.1 \ demo.epochs=2000 demo.batch_id=0 \ demo.batch_num=1 \ log.print_step=100 \ log.if_wandb=FalseThe results will be saved in

outputs/3D-Front/demo/output.

Note: you may need X-server to showcase the visualization windows from VTK.

-

Scene Generation (with 3D-Front).

python utils/threed_front/vis/render_pred.py --pred_file outputs/3D-Front/generation/YEAR-MONTH-DAY/HOUR-MINUTE-SECOND/vis/bed/sample_X_X.npz [--use_retrieval] -

Single-view Reconstruction (with ScanNet)

python utils/scannet/vis/vis_prediction_scannet.py --dump_dir demo/ScanNet/output --sample_name all_sceneXXXX_XX_XXXX -

Single-view Reconstruction (with 3D-Front)

python utils/threed_front/vis/vis_svr.py --dump_dir demo/3D-Front/output --sample_name [FILENAME IN dump_dir]

If you find our work is helpful, please cite

@InProceedings{Nie_2023_CVPR,

author = {Nie, Yinyu and Dai, Angela and Han, Xiaoguang and Nie{\ss}ner, Matthias},

title = {Learning 3D Scene Priors With 2D Supervision},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {792-802}

}