Recommendation

- Our GAN based work for facial attribute editing - https://github.com/LynnHo/AttGAN-Tensorflow.

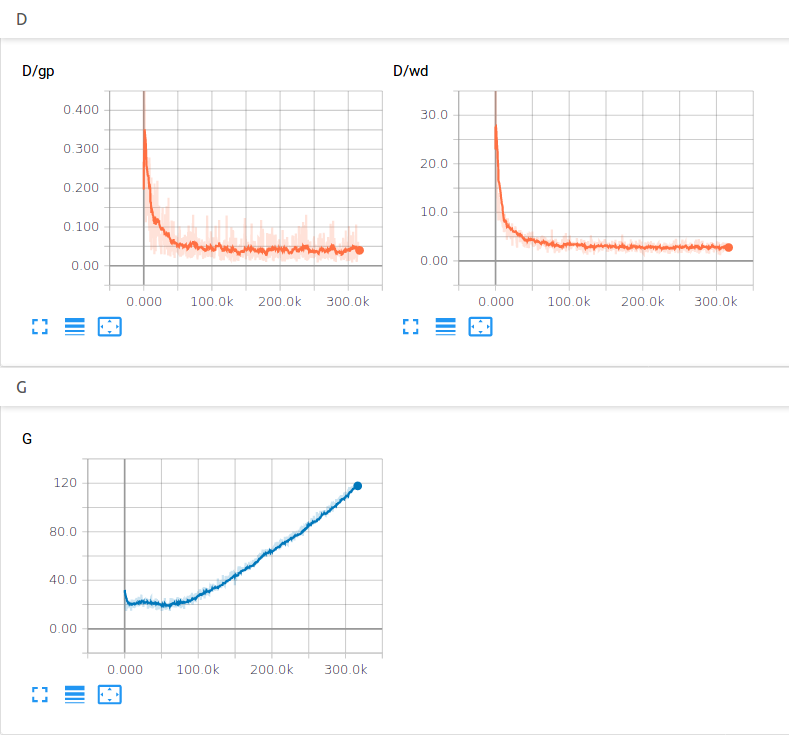

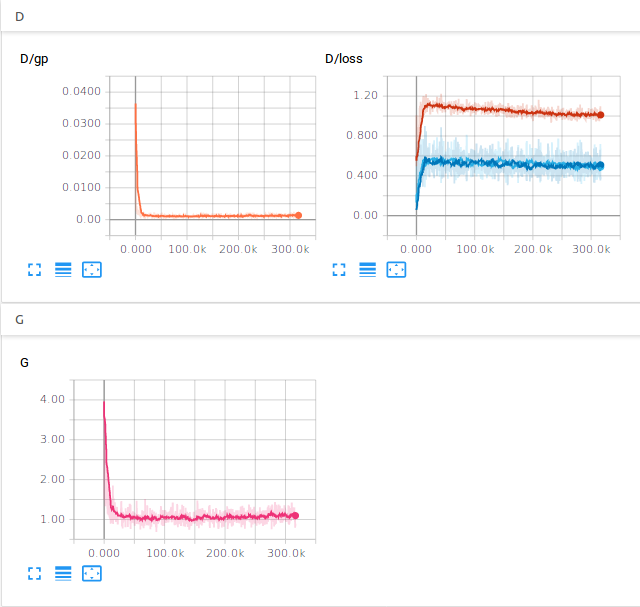

Pytorch implementation of WGAN-GP and DRAGAN, both of which use gradient penalty to enhance the training quality. We use DCGAN as the network architecture in all experiments.

WGAN-GP: Improved Training of Wasserstein GANs

DRAGAN: On Convergence and Stability of GANs

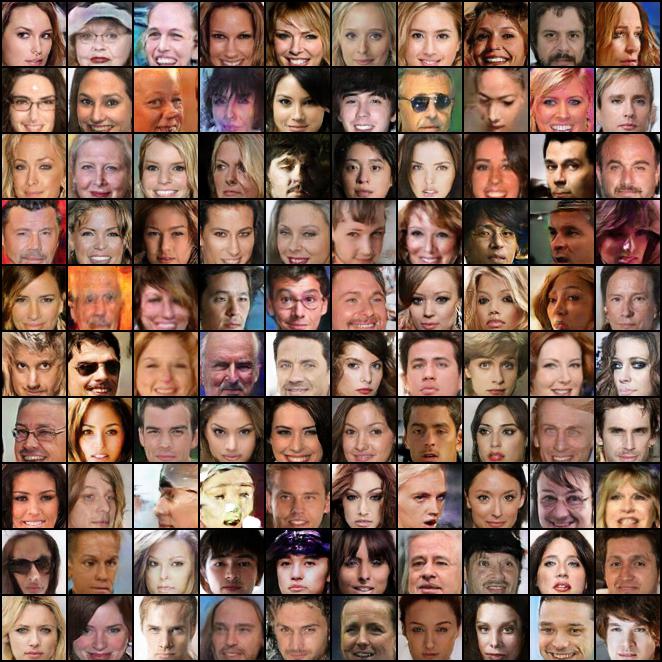

left: WGAN-GP 100 epoch, right: DRAGAN 100 epoch

left: WGAN-GP 100 epoch, right: DRAGAN 100 epoch

- pytorch 0.2

- tensorboardX https://github.com/lanpa/tensorboard-pytorch

- python 2.7

You can directly change some configurations such as gpu_id and learning rate etc. in the head of each code.

python train_celeba_wgan_gp.py

python train_celeba_dragan.py

...

If you have installed tensorboard, you can use it to have a look at the loss curves.

tensorboard --logdir=./summaries/celeba_wgan_gp --port=6006

...

- Celeba should be prepared by yourself in ./data/img_align_celeba/img_align_celeba/

- Download the dataset: https://www.dropbox.com/sh/8oqt9vytwxb3s4r/AAB06FXaQRUNtjW9ntaoPGvCa?dl=0

- the above links might be inaccessible, the alternative is