Quantifying Knee Cartilage Shape and Lesion: From Image to Metrics

AMAI’24 (MICCAI workshop) (in press)

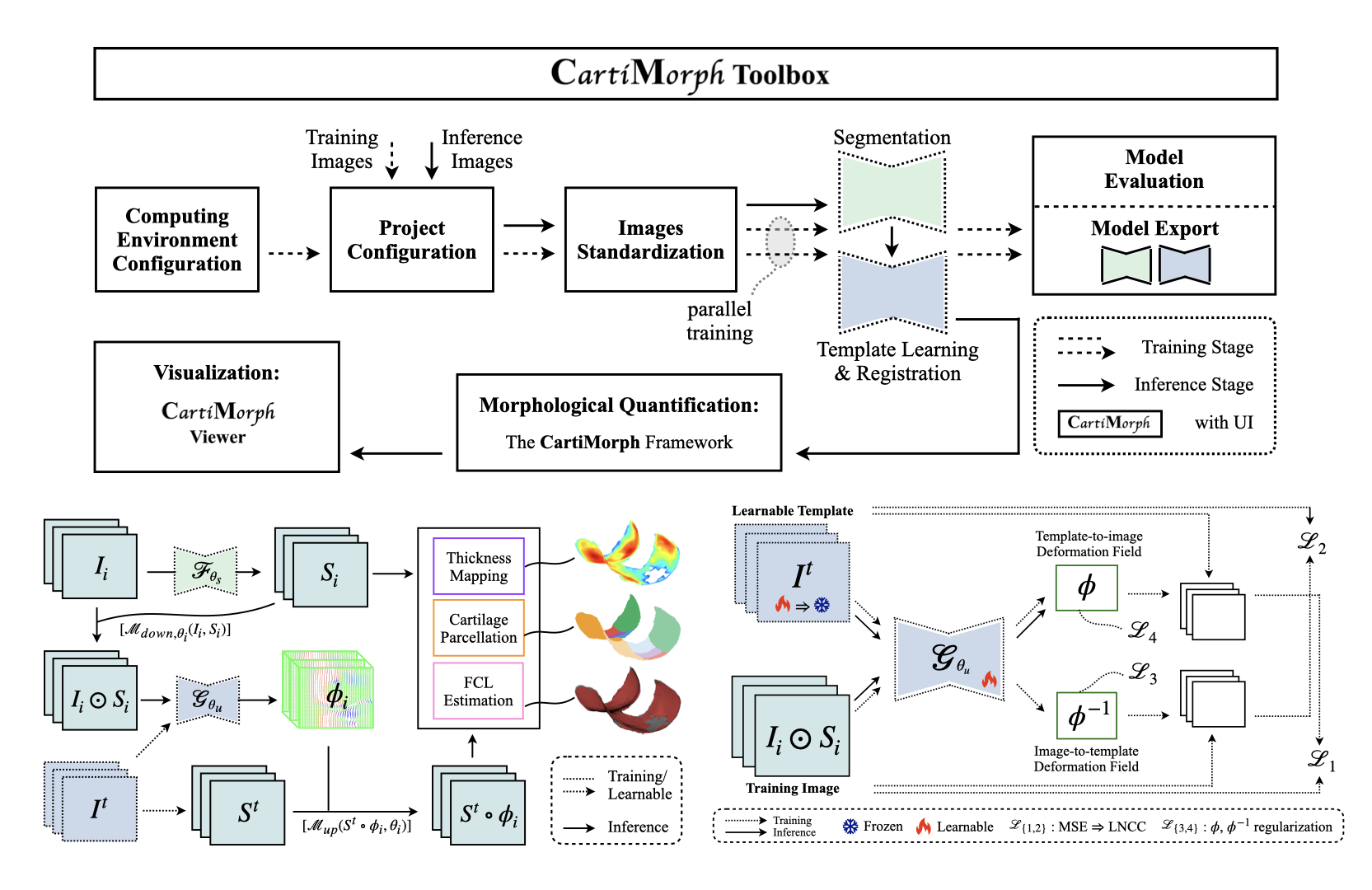

CMT, a toolbox for knee MRI analysis, model training, and visualization.

- Joint Template-Learning and Registration Mode – CMT-reg

- CartiMorph Toolbox (CMT)

- models (both for segmentation and registration) for this work – can be loaded into CMT

- more models from the CMT models page

- For model evaluation and the training of other SoTA models:

git clone https://github.com/YongchengYAO/CMT-AMAI24paper.git

cd CMT-AMAI24paper

conda create --name CMT_AMAI24paper --file env.txt- For model training in CMT, see the instructions for CMT

We compared the proposed CMT-reg with other template learning and/or registration models – Aladdin and LapIRN.

- Code for Aladdin training, inference, and evaluation (for reproducing the results in Tables 2 & 3)

- Code for LapIRN training, inference, and evaluation (for reproducing the results in Tables 2 & 3)

- Code for CMT evaluation (for reproducing the results in Table 3)

- Training, inference, and evaluation of CMT-reg are implemented in CMT, set these parameters in CMT:

- Cropped Image Size: 64, 128, 128

- Training Epoch: 2000

- Network Width: x3

- Loss: MSE+LNCC

This is the data used for reproducing Tables 2 & 3.

Data for this repo for model training, inference, and evaluation

# data folder structure

├── Code

├── Aladdin

├── Model

├── LapIRN

├── Model

├── Data

├── Aladdin

├── CMT_data4AMAI

├── LapIRN

- How to use files in the

Datafolder?- clone this repo:

CMT-AMAI24paper - put the

Datafolder underCMT-AMAI24paper/

- clone this repo:

- How to use files in the

Codefolder?- clone this repo:

CMT-AMAI24paper - put corresponding

Modelfolders toCMT-AMAI24paper/Code/Aladdin/CMT-AMAI24paper/Code/LapIRN/

- clone this repo:

- MR Image: OAI

- Annotation: OAI-ZIB

Data Information: here (link CMT-ID to OAI-SubjectID)

This is the data used for training CMT-reg and nnUNet in CMT

If you use the processed data, please note that the manual segmentation annotations come from this work:

- Automated Segmentation of Knee Bone and Cartilage combining Statistical Shape Knowledge and Convolutional Neural Networks: Data from the Osteoarthritis Initiative (https://doi.org/10.1016/j.media.2018.11.009)

(conference proceedings in press)

@misc{yao2024quantifyingkneecartilageshape,

title={Quantifying Knee Cartilage Shape and Lesion: From Image to Metrics},

author={Yongcheng Yao and Weitian Chen},

year={2024},

eprint={2409.07361},

archivePrefix={arXiv},

primaryClass={eess.IV},

url={https://arxiv.org/abs/2409.07361},

}

The training, inference, and evaluation code for Aladdin and LapIRN are adapted from these GitHub repos:

- Aladdin: https://github.com/uncbiag/Aladdin

- LapIRN: https://github.com/cwmok/LapIRN

CMT is based on CartiMorph: https://github.com/YongchengYAO/CartiMorph

@article{YAO2024103035,

title = {CartiMorph: A framework for automated knee articular cartilage morphometrics},

journal = {Medical Image Analysis},

author = {Yongcheng Yao and Junru Zhong and Liping Zhang and Sheheryar Khan and Weitian Chen},

volume = {91},

pages = {103035},

year = {2024},

issn = {1361-8415},

doi = {https://doi.org/10.1016/j.media.2023.103035}

}