Automatic soundscape captioner (SoundSCaper): Soundscape Captioning using Sound Affective Quality Network and Large Language Model

Paper link: https://arxiv.org/abs/2406.05914

ResearchGate: SoundSCaper

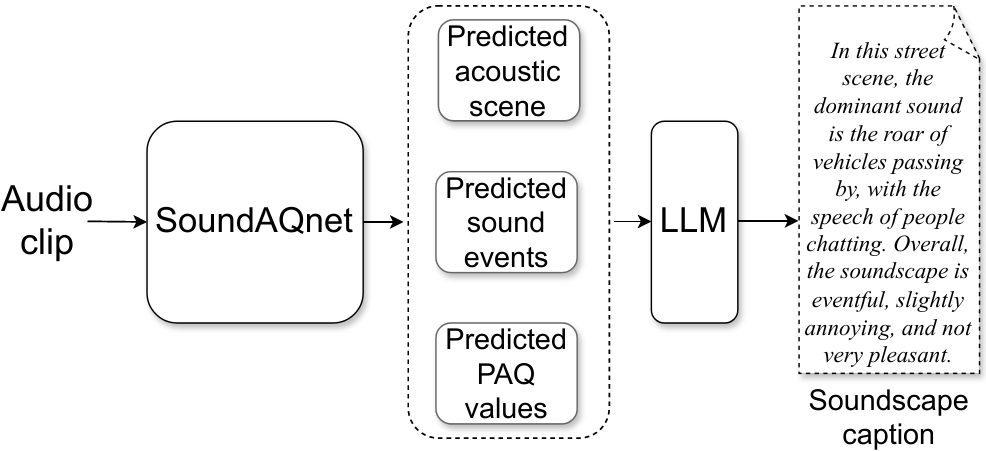

- SoundSCaper

- Introduction

- Figure

- 1. Overall framework of the automatic soundscape captioner (SoundSCaper)

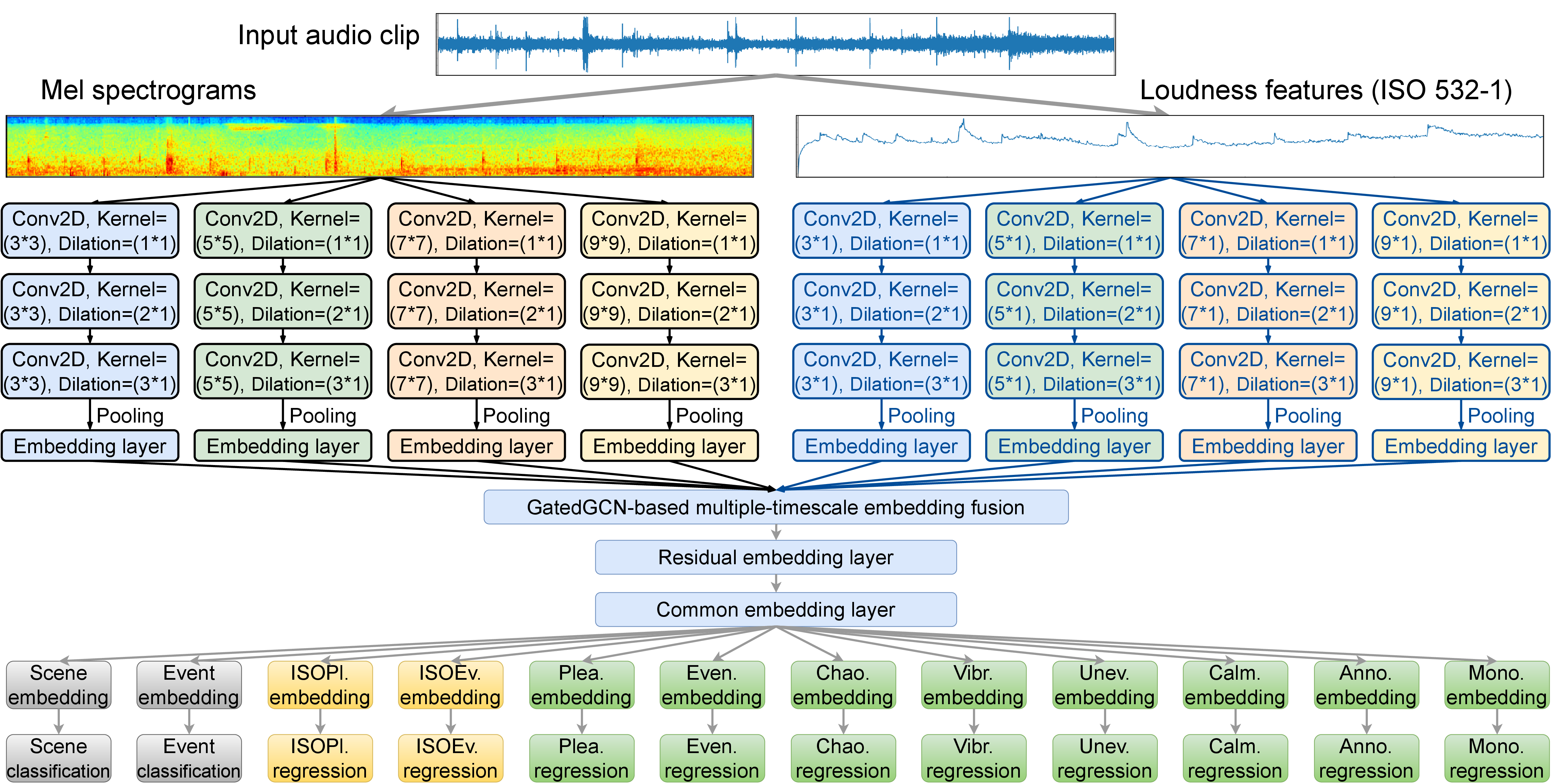

- 2. The acoustic model SoundAQnet simultaneously models acoustic scene (AS), audio event (AE), and emotion-related affective quality (AQ)

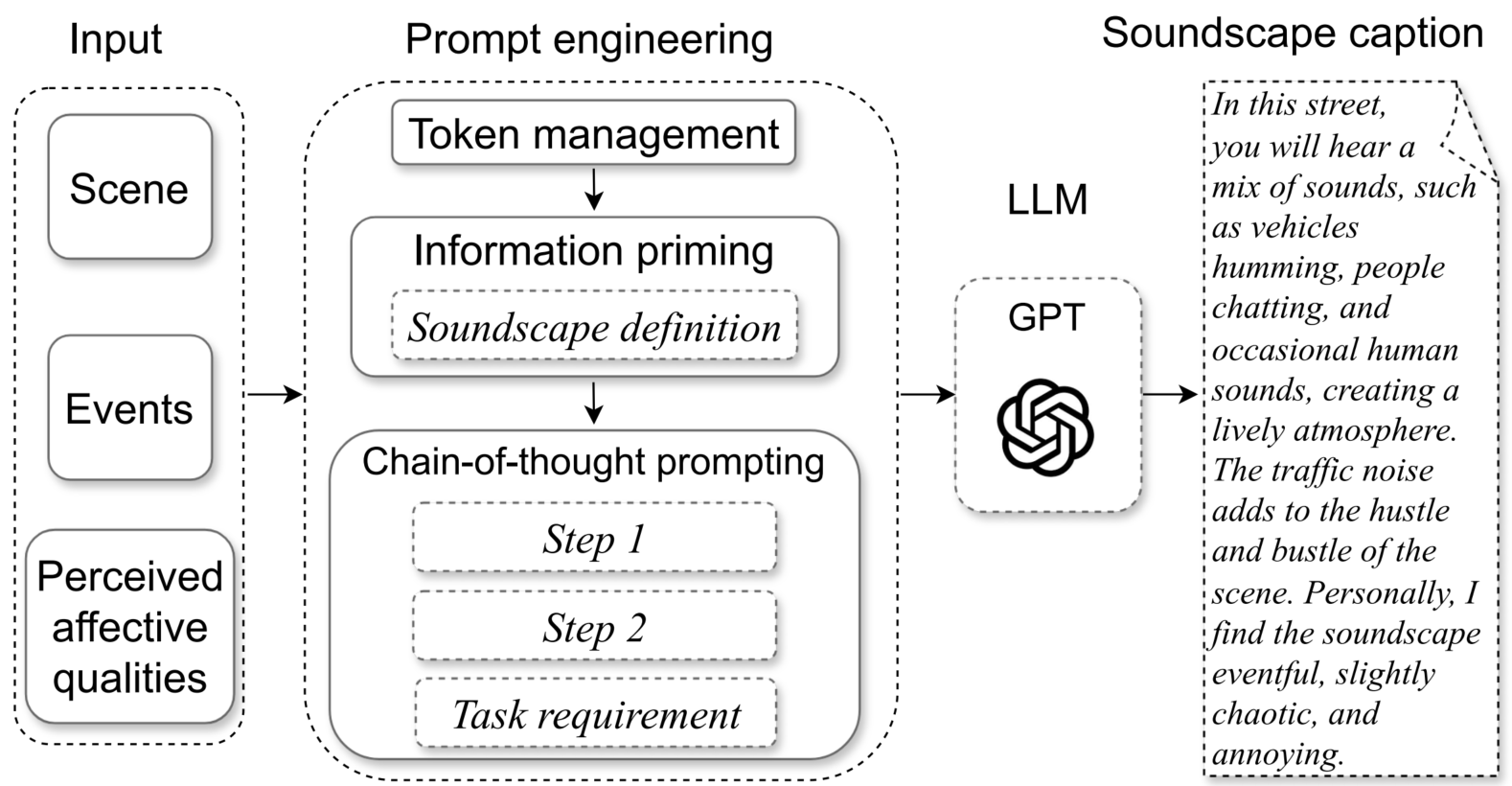

- 3. Process of the LLM part in the SoundSCaper

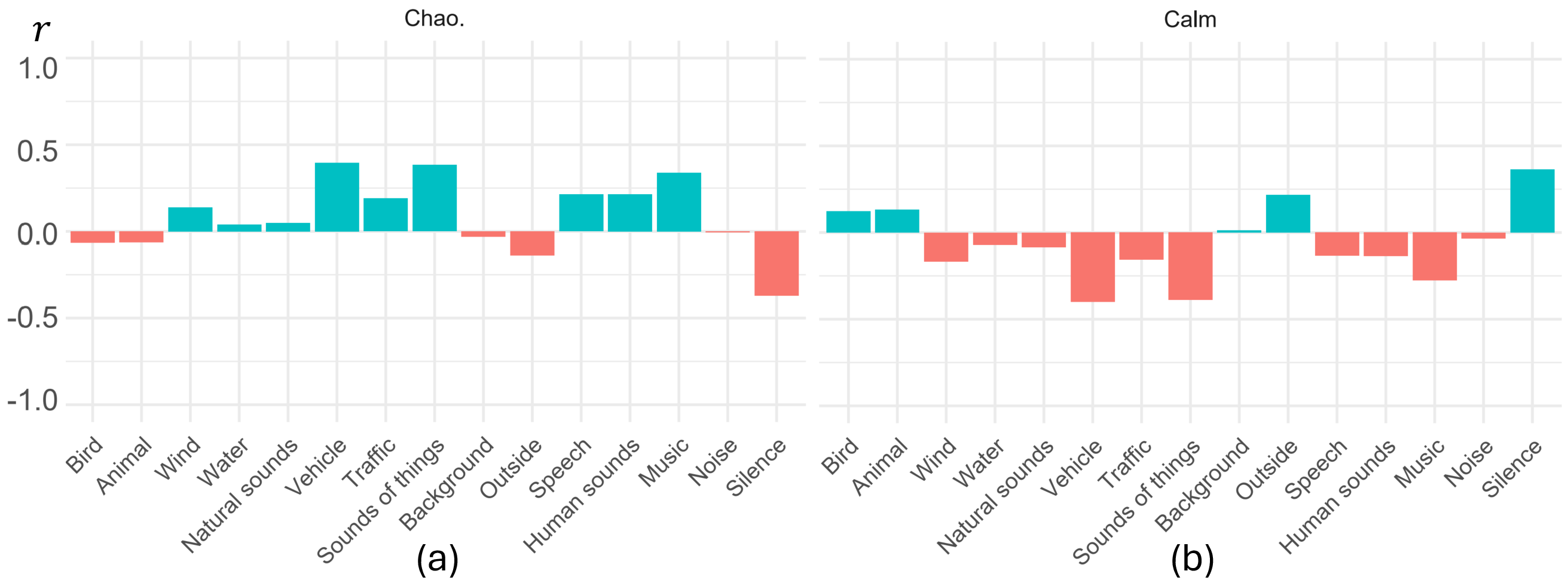

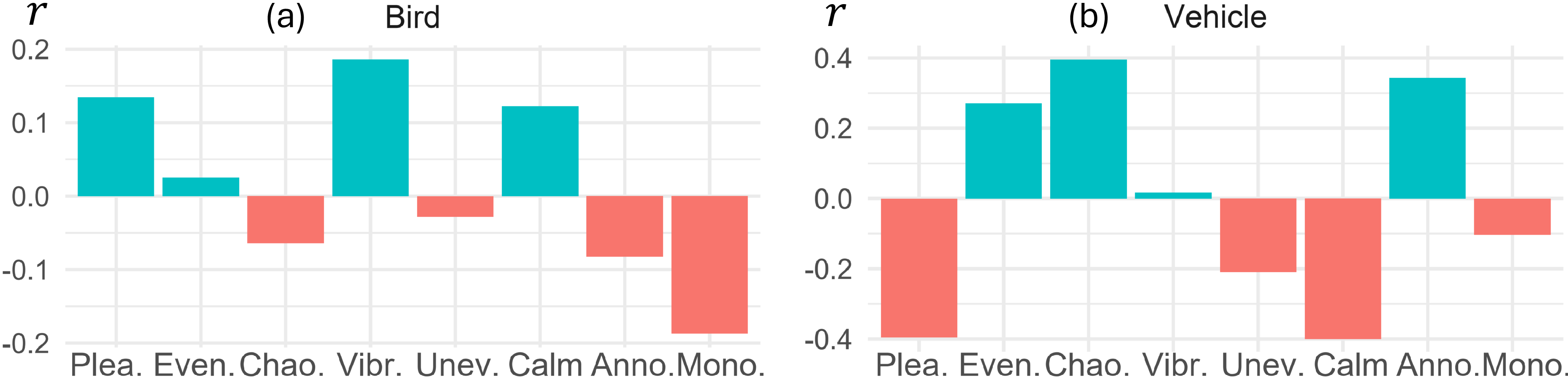

- 4. Spearman's rho correlation between different AQs and AEs predicted by SoundAQnet

- 5. Spearman's rho correlation between different AEs and 8D AQs predicted by SoundAQnet

- Run Sound-AQ models to predict the acoustic scene, audio event, and human-perceived affective qualities

1) Dataset preparation

-

Download and place the ARAUS dataset (ARAUS_repository) into the Dataset_all_ARAUS directory or the Dataset_training_validation_test directory (recommended)

-

Follow the ARAUS steps (ARAUS_repository) to generate the raw audio dataset. The dataset is about 53 GB, please reserve enough space when preparing the dataset. (If it is in WAV, it may be about 134 GB.)

-

Split the raw audio dataset according to the training, validation, and test audio file IDs in the Dataset_training_validation_test directory.

The labels of our annotated acoustic scenes and audio events, for the audio clips in the ARAUS dataset, are placed in the Dataset_all_ARAUS directory and the Dataset_training_validation_test directory.

- Acoustic feature extraction

-

Log Mel spectrogram Use the code in Feature_log_mel to extract log mel features.

- Place the dataset into the

Datasetfolder. - If the audio file is not in

.wavformat, please run theconvert_flac_to_wav.pyfirst. (This may generate ~132 GB of data as WAV files.) - Run

log_mel_spectrogram.py

- Place the dataset into the

-

Loudness features (ISO 532-1) Use the code in Feature_loudness_ISO532_1 to extract the ISO 532-1:2017 standard loudness features.

- Download the ISO_532-1.exe file, (which has already been placed in the folder

ISO_532_bin). -Please place the audio clip files in.wavformat to be processed into theDataset_wavfolder -If the audio file is not in.wavformat, please use theconvert_flac_to_wav.pyto convert it. (This may generate ~132 GB of data as WAV files.) -RunISO_loudness.py

- Download the ISO_532-1.exe file, (which has already been placed in the folder

3) Training SoundAQnet

- Prepare the training, validation, and test sets according to the corresponding files in the Dataset_training_validation_test directory

- Modify the

DataGenerator_Mel_loudness_graphfunction to load the dataset - Run

Training.pyin theapplicationdirectory

This part Inferring_soundscape_clips_for_LLM aims to convert the soundscape audio clips into the predicted audio event probabilities, the acoustic scene labels, and the ISOP, ISOE, and emotion-related PAQ values.

This part bridges the acoustic model and the language model, organising the output of the acoustic model in preparation for the input of the language model.

1) Data preparation

-

Place the log Mel features files from the

Feature_log_meldirectory into theDataset_meldirectory -

Place the ISO 532-1 loudness feature files from the

Feature_loudness_ISO532_1directory into theDataset_wav_loudnessdirectory

2) Run the inference script

-

cd

application, pythonInference_for_LLM.py -

The results, which will be fed into the LLM, will be automatically saved into the corresponding directories:

SoundAQnet_event_probability,SoundAQnet_scene_ISOPl_ISOEv_PAQ8DAQs -

There are four similar SoundAQnet models in the

system/modeldirectory; please feel free to use them- SoundAQnet_ASC96_AEC94_PAQ1027.pth

- SoundAQnet_ASC96_AEC94_PAQ1039.pth

- SoundAQnet_ASC96_AEC94_PAQ1041.pth

- SoundAQnet_ASC96_AEC95_PAQ1052.pth

3) Inference with other models

This part Inferring_soundscape_clips_for_LLM uses SoundAQnet to infer the values of audio events, acoustic scenes, and emotion-related AQs.

If you want to replace SoundAQnet with another model to generate the soundscape captions,

- replace

using_model = SoundAQnetinInference_for_LLM.pywith the code for that model, - and place the corresponding trained model into the

system/modeldirectory.

4) Demonstration

Please see details here.

This part, LLM_scripts_for_generating_soundscape_caption, loads the acoustic scene, audio events, and PAQ 8-dimensional affective quality values corresponding to the soundscape audio clip predicted by SoundAQnet, and then outputs the corresponding soundscape descriptions.

Please fill in your OpenAI username and password in LLM_GPT_soundscape_caption.py.

1) Data preparation

-

Place the matrix file of audio event probabilities predicted by the SoundAQnet into the

SoundAQnet_event_probabilitydirectory -

Place the SoundAQnet prediction file, including the predicted acoustic scene label, ISOP value, ISOE value, and the 8D AQ values, into the

SoundAQnet_scene_ISOPl_ISOEv_PAQ8DAQsdirectory

2) Generate soundscape caption

-

Replace the "YOUR_API_KEY_HERE" in line 26 of the

LLM_GPT_soundscape_caption.pyfile with your OpenAI API key -

Run

LLM_GPT_soundscape_caption.py

3) Demonstration

Please see details here.

Human_assessment contains

-

a call for experiment

- assessment audio dataset

- participant instruction file

- local and online questionnaires

Here are the assessment statistical results from a jury composed of 16 audio/soundscape experts.

There are two sheets in the file SoundSCaper_expert_evaluation_results.xlsx.

-

Sheet 1 is the statistical results of 16 human experts and SoundSCaper on the evaluation dataset D1 from the test set.

-

Sheet 2 is the statistical results of 16 human experts and SoundSCaper on the model-unseen mixed external dataset D2, which has 30 samples randomly selected from 5 external audio scene datasets with varying lengths and acoustic properties.

The trained models of the other 7 models in the paper have been attached to their respective folders.

-

If you want to train them yourself, please follow the SounAQnet training steps.

-

If you want to test or evaluate these models, please run the model inference here.

2. The acoustic model SoundAQnet simultaneously models acoustic scene (AS), audio event (AE), and emotion-related affective quality (AQ)

For full prompts and the LLM script, please see here.

For all 8D AQ results, please see here.

For all 15 AE results, please see here.

Run Sound-AQ models to predict the acoustic scene, audio event, and human-perceived affective qualities

Please download the testing set (about 3 GB) from here, and place it under the Dataset folder.

cd Other_AD_CNN/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Number of 3576 audios in testing

Parameters num: 0.521472 M

ASC Acc: 89.30 %

AEC AUC: 0.84

PAQ_8D_AQ MSE MEAN: 1.137

pleasant_mse: 0.995 eventful_mse: 1.174 chaotic_mse: 1.155 vibrant_mse: 1.135

uneventful_mse: 1.184 calm_mse: 1.048 annoying_mse: 1.200 monotonous_mse: 1.205cd Other_Baseline_CNN/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

ASC Acc: 88.37 %

AEC AUC: 0.92

PAQ_8D_AQ MSE MEAN: 1.250

pleasant_mse: 1.030 eventful_mse: 1.233 chaotic_mse: 1.452 vibrant_mse: 1.074

uneventful_mse: 1.254 calm_mse: 1.095 annoying_mse: 1.637 monotonous_mse: 1.222cd Other_Hierarchical_CNN/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 1.009633 M

ASC Acc: 90.68 %

AEC AUC: 0.88

PAQ_8D_AQ MSE MEAN: 1.240

pleasant_mse: 0.993 eventful_mse: 1.518 chaotic_mse: 1.259 vibrant_mse: 1.162

uneventful_mse: 1.214 calm_mse: 1.251 annoying_mse: 1.349 monotonous_mse: 1.171cd Other_MobileNetV2/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 2.259164 M

ASC Acc: 88.79 %

AEC AUC: 0.92

PAQ_8D_AQ MSE MEAN: 1.139

pleasant_mse: 0.996 eventful_mse: 1.120 chaotic_mse: 1.179 vibrant_mse: 1.011

uneventful_mse: 1.273 calm_mse: 1.148 annoying_mse: 1.183 monotonous_mse: 1.199cd Other_YAMNet/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 3.2351 M

ASC Acc: 89.17 %

AEC AUC: 0.91

PAQ_8D_AQ MSE MEAN: 1.197

pleasant_mse: 1.013 eventful_mse: 1.265 chaotic_mse: 1.168 vibrant_mse: 1.103

uneventful_mse: 1.404 calm_mse: 1.125 annoying_mse: 1.220 monotonous_mse: 1.277cd Other_CNN_Transformer/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 12.293996 M

ASC Acc: 93.10 %

AEC AUC: 0.93

PAQ_8D_AQ MSE MEAN: 1.318

pleasant_mse: 1.112 eventful_mse: 1.333 chaotic_mse: 1.324 vibrant_mse: 1.204

uneventful_mse: 1.497 calm_mse: 1.234 annoying_mse: 1.365 monotonous_mse: 1.478- Please download the trained model PANNs_AS_AE_AQ ~304MB

- unzip it

- put the model

PANNs_AS_AE_AQ.pthunder theOther_PANNs\application\system\model

cd Other_PANNs/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 79.731036 M

ASC Acc: 93.28 %

AEC AUC: 0.89

PAQ_8D_AQ MSE MEAN: 1.139

pleasant_mse: 1.080 eventful_mse: 1.097 chaotic_mse: 1.110 vibrant_mse: 1.087

uneventful_mse: 1.267 calm_mse: 1.160 annoying_mse: 1.086 monotonous_mse: 1.227cd SoundAQnet/application/

python inference.py

-----------------------------------------------------------------------------------------------------------

Parameters num: 2.701812 M

ASC Acc: 96.09 %

AEC AUC: 0.94

PAQ_8D_AQ MSE MEAN: 1.027

pleasant_mse: 0.880 eventful_mse: 1.029 chaotic_mse: 1.043 vibrant_mse: 0.972

uneventful_mse: 1.126 calm_mse: 0.969 annoying_mse: 1.055 monotonous_mse: 1.140