LMUFormer: Low Complexity Yet Powerful Spiking Model With Legendre Memory Units, ICLR 2024

鹏北海,凤朝阳。又携书剑路茫茫。明年此日青云去,却笑人间举子忙。

@article{liu2024lmuformer,

title={LMUFormer: Low Complexity Yet Powerful Spiking Model With Legendre Memory Units},

author={Zeyu Liu and Gourav Datta and Anni Li and Peter Anthony Beerel},

year={2024},

journal={arXiv preprint arXiv:2402.04882},

}

Paper: LMUFormer: Low Complexity Yet Powerful Spiking Model With Legendre Memory Units

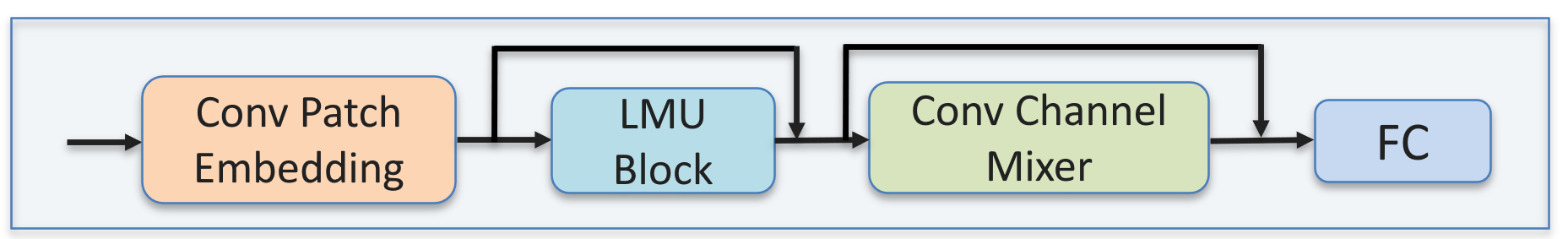

The overall structure of our LMUFormer:

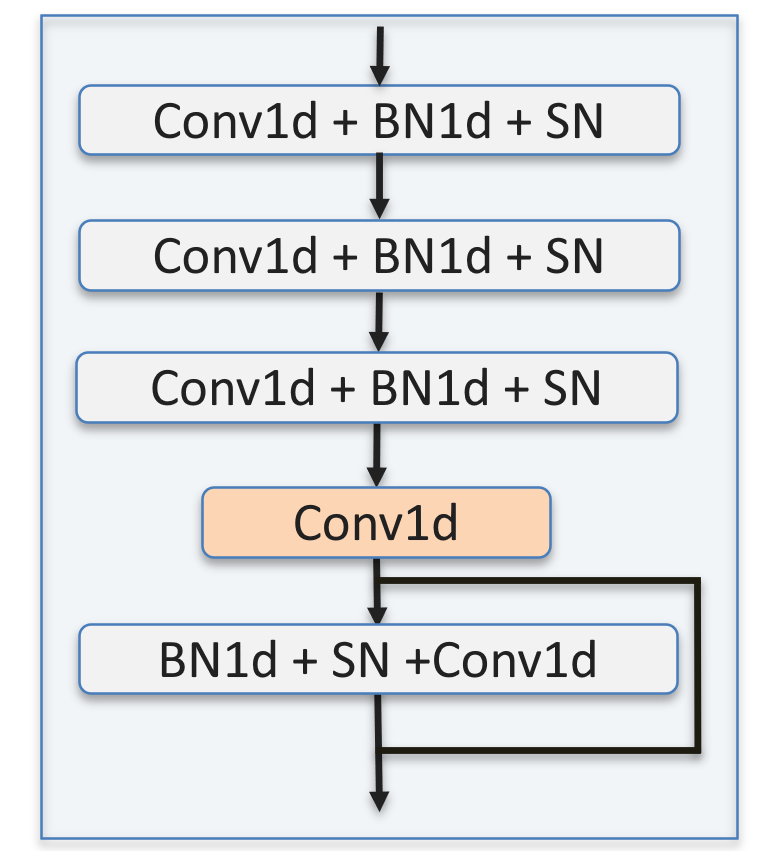

Details of the Conv1d patch embedding module:

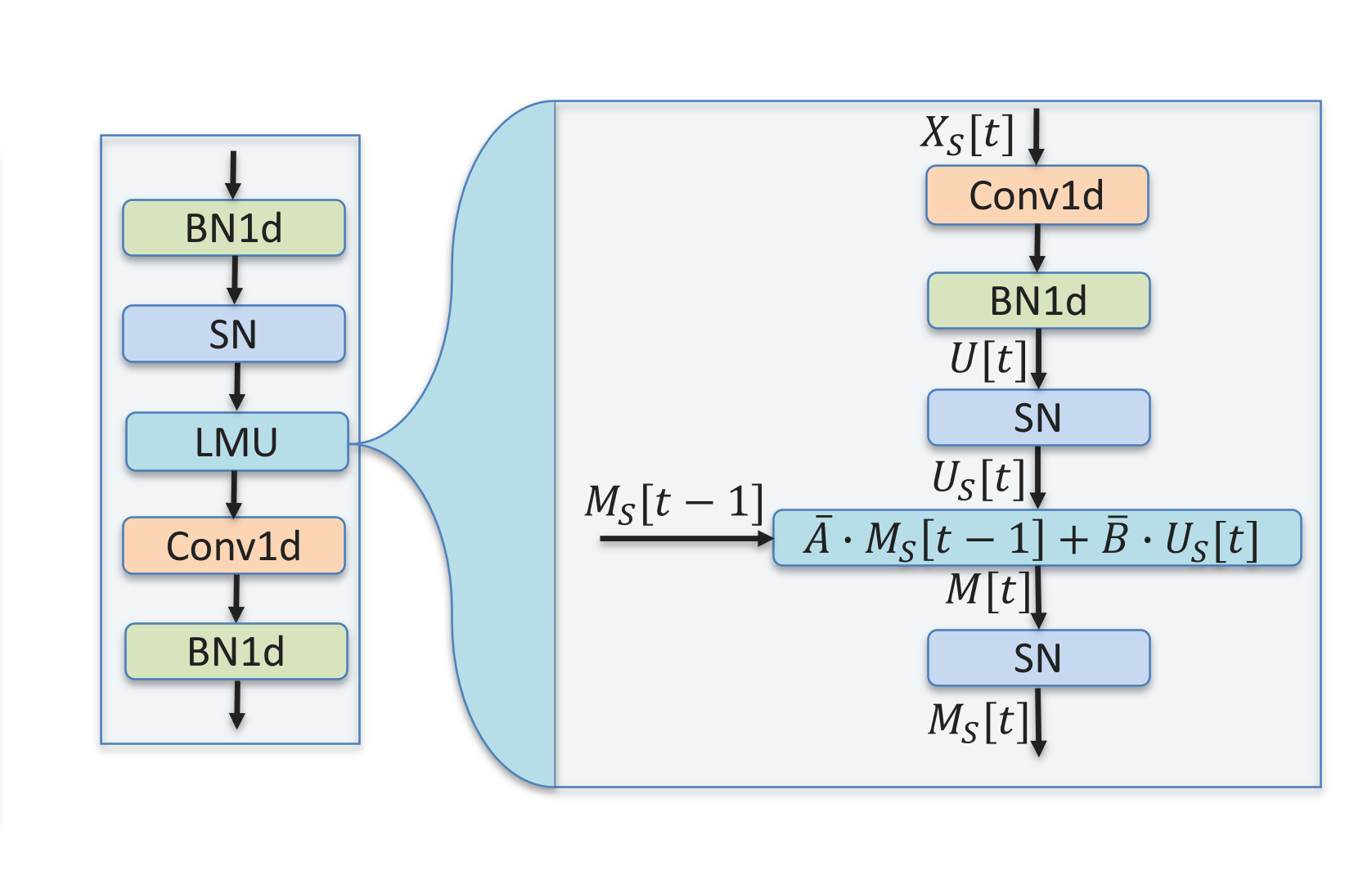

Spiking LMU block:

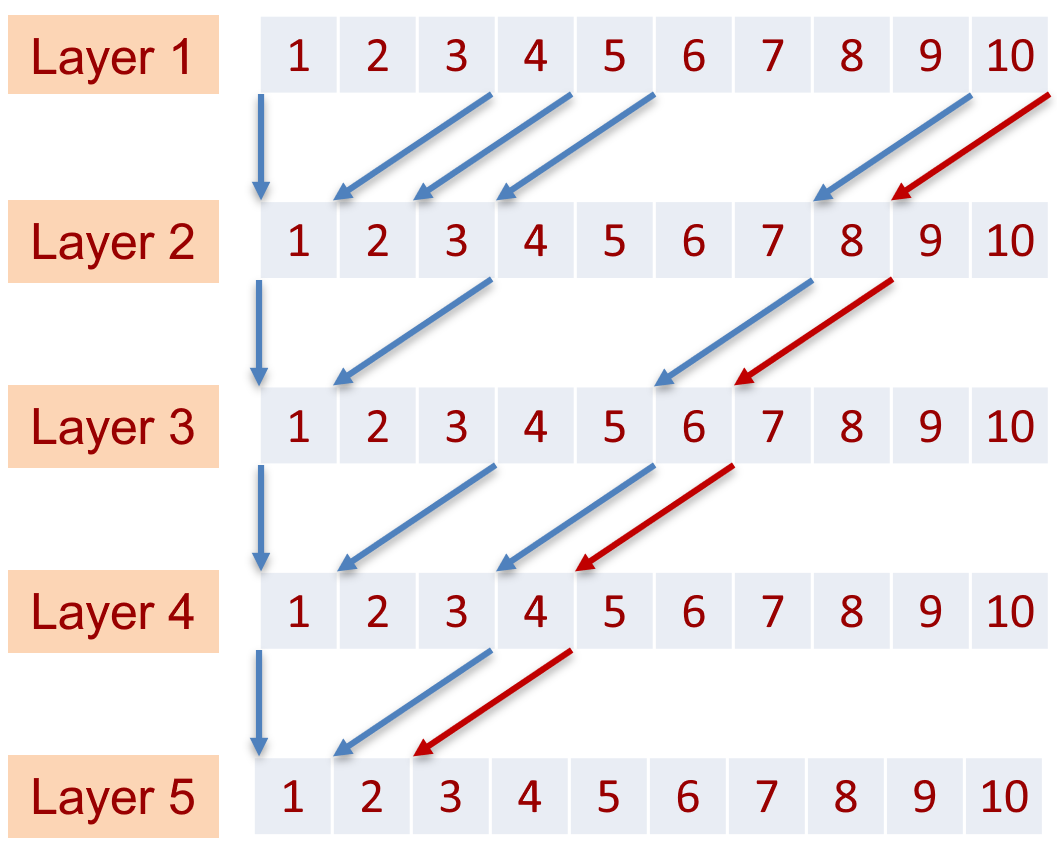

Delay analysis of the convolutional patch embedding:

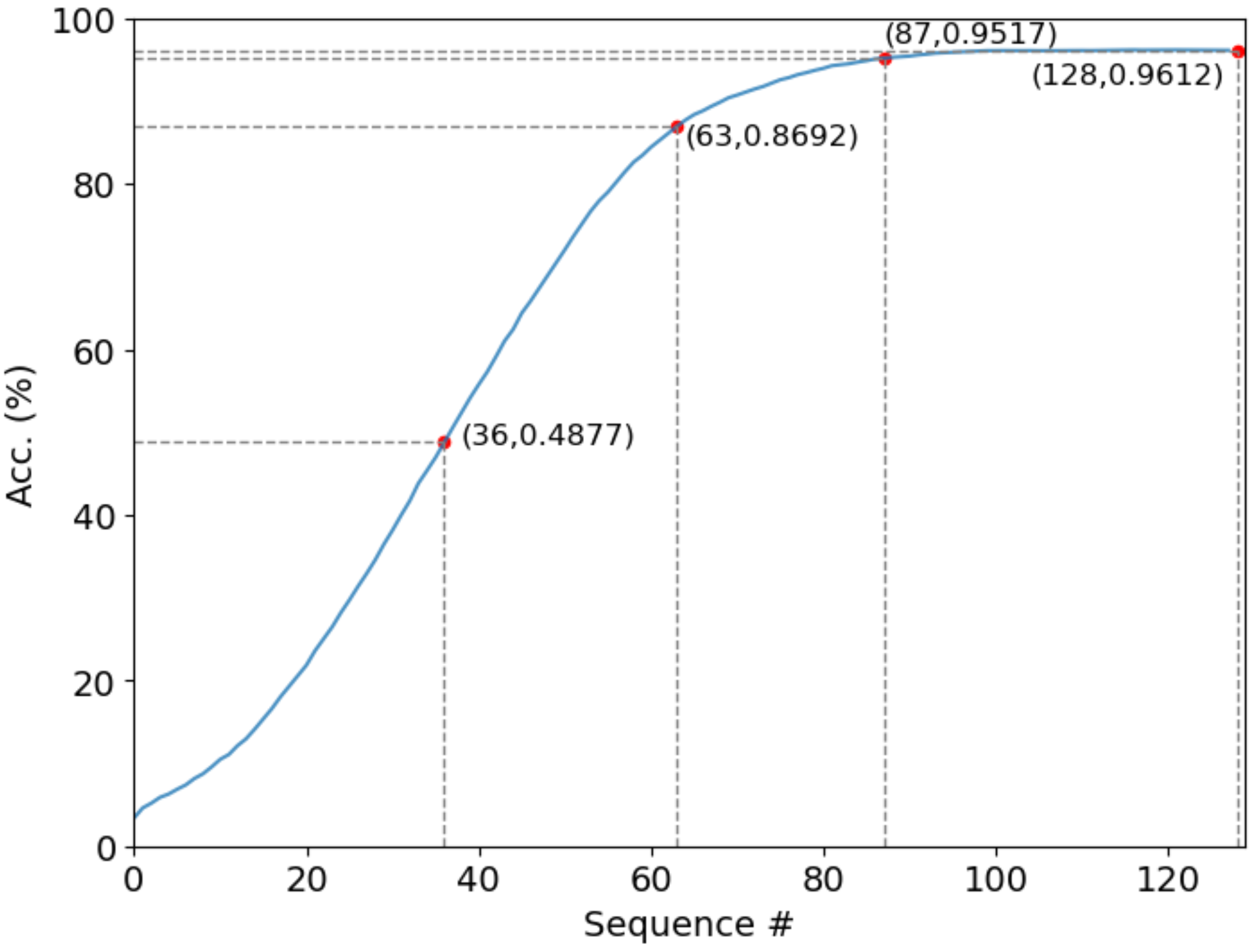

Plot of test accuracy v.s. number of samples in the sequence:

| Model | Sequential Inference | Parallel Training | SNN | Accuracy (%) |

|---|---|---|---|---|

| RNN | Yes | No | No | 92.09 |

| Attention RNN | No | No | No | 93.9 |

| liBRU | Yes | No | No | 95.06 |

| Res15 | Yes | Yes | No | 97.00 |

| KWT2 | No | Yes | No | 97.74 |

| AST | No | Yes | No | 98.11 |

| LIF | Yes | Yes | Yes | 83.03 |

| SFA | Yes | No | Yes | 91.21 |

| Spikformer* | No | Yes | Yes | 93.38 |

| RadLIF | Yes | No | Yes | 94.51 |

| Spike-driven ViT* | No | Yes | Yes | 94.85 |

| LMUFormer | Yes | Yes | No | 96.53 |

| LMUFormer (with states) | Yes | Yes | No | 96.92 |

| Spiking LMUFormer | Yes | Yes | Yes | 96.12 |

- 📂 LMUFormer

- 📂 data/

- 📜

prep_sc.pydownload and preprocess the Google Speech Commands V2 dataset

- 📜

- 📂 src

- 📂 blocks

- 📜

conv1d_embedding.pythree types of Conv1d embedding modules - 📜

lmu_cell.pythe original LMU cell and the spiking version of LMU cell - 📜

lmu.pythree types of LMU modules and the self-attention module - 📜

mlp.pyfour types of MLP modules

- 📜

- 📂 utilities

- 📜

stats.py - 📜

util.py

- 📜

- 📂 logs

- 📜

dataloader.py - 📜

gsc.ymlthe hyper-patameters configuration file - 📜

lmu_rnn.pyregister 3 types of LMUFormer model and one spiking Transformer model - 📜

run.py - 📜

traintest.py

- 📂 blocks

- 📜

requirements.txtconfiguration for cifar10 dataset - 📜

README.mdconfiguration for imagenet100 dataset

- 📂 data/

To run our code, please install the dependency packages in the requirements.txt as following:

pip install -r requirements.txt

cd data/speech_commands

python prep_sc.py

cd src

python run.py

To test our LMUFormer model on a sample-by-sample basis, simply add test_only: True and test_mode: all_seq to the gsc.yml file. Then, set initial_checkpoint to your trained LMUFormer model. This will reproduce the final accuracy result found in the original log file at the end of the test. Additionally, a file named acc_list.pkl will be generated. You can use the code provided below to plot the accuracy as it relates to the increasing number of samples in the sequence.

import pickle

import matplotlib.pyplot as plt

with open('acc_list.pkl', 'rb') as f:

acc_list = pickle.load(f)

plt.plot(acc_list)

plt.xlabel('Sequence #')

plt.ylabel('Acc.(%)')

plt.legend()

The code for this project references the following previous work: