This is a pytorch implementation of DUB: Discrete Unit Back-translation for Speech Translation (ACL 2023 Findings).

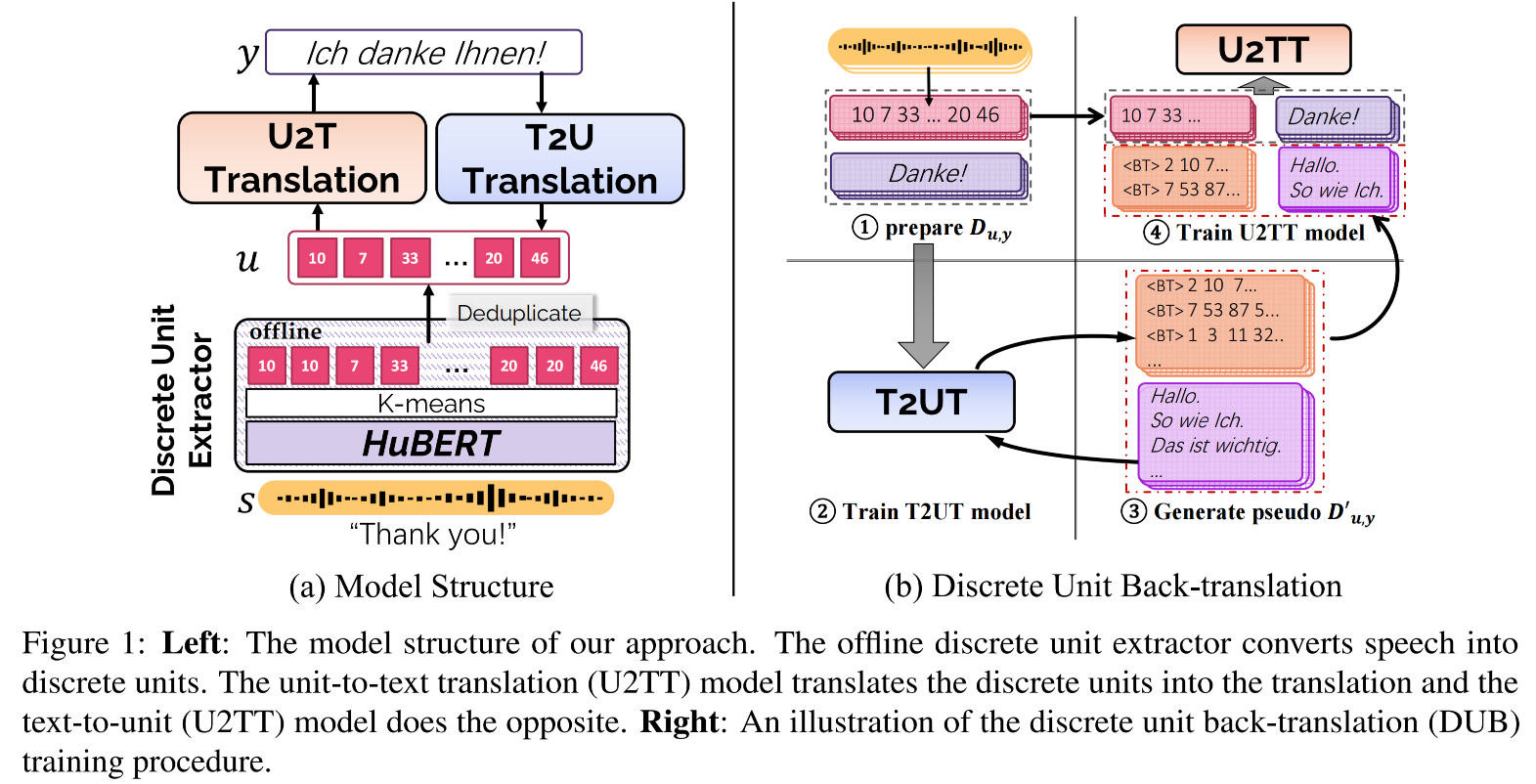

Can speech be unsupervisedly discretized? Is it better to represent speech with discrete units than with continuous features in direct ST? How much benefit can useful MT techniques bring to ST? Discrete Unit Back-translation (DUB) migrates the useful back-translation and pre-training technique from machine translation to speech translation by discretizing the speech signals into unit sequences. Experimental results show that DUB can further yield an average 5.5 BLEU gain on the MuST-C English-to-German, French, and Spanish translation directions. Experimental results on CoVoST-2 dataset shows that DUB is particularly beneficial for low-resource or unwritten languages in the world.

You can download all the models at 🤗huggingface model.

| Datasets | Model | SPM & Vocab |

|---|---|---|

| En-De | •U2TT •T2UT •U2TT_DUB •Bimodal BART |

•SPM model •Vocab |

| En-Es | •U2TT •T2UT •U2TT_DUB |

•SPM model •Vocab |

| En-Fr | •U2TT •T2UT •U2TT_DUB |

•SPM model •Vocab |

git clone git@github.com:0nutation/DUB.git

cd DUB

pip3 install -r requirements.txt

pip3 install --editable ./- Environment setup

export ROOT="DUB"

export LANGUAGE="de"

export MUSTC_ROOT="${ROOT}/data-bin/MuSTC"- Download the MuST-C v1.0 archive

MUSTC_v1.0_en-de.tar.gzand uncompress it:

mkdir -p ${MUSTC_ROOT}

cd $MUSTC_ROOT

tar -xzvf MUSTC_v1.0_en-${LANGUAGE}.tar.gz- Prepare units and text for training

bash ${ROOT}/src/prepare_data.sh ${LANGUAGE}Train unit-to-text translation(U2TT) forward-translation model.

bash entry.sh --task translate --src_lang en_units --tgt_lang ${LANGUAGE}Train text-to-unit translation(T2UT) back-translation model.

bash entry.sh --task translate --src_lang ${LANGUAGE} --tgt_lang en_unitsGenerate pseudo pair data using pretrained T2UT model.

BT_STRATEGY="topk10" #["beam5", "topk10", "topk300"]

bash ${ROOT}/src/back_translate.sh --real_lang ${LANGUAGE} --sys_lang en_units --ckpt_name checkpoint_best.pt --bt_strategy ${BT_STRATEGY}Train U2TT on mixture of real and pseudo pair data.

bash ${ROOT}/src/back_translate.sh --task translate --src_lang en_units --tgt_lang ${LANGUAGE} --bt_strategy ${BT_STRATEGY}Train bimodal BART. Before pre-training bimodal BART, you need to generate GigaSpeech units and save it to ${ROOT}/data-bin/RawDATA/bimodalBART/en_units-${LANGUAGE}/train.unit

bash entry.sh --task bimodalBART --src_lang en_units --tgt_lang ${LANGUAGE}Train U2TT with bimodal BART as pretrained model.

bash entry.sh --task translate --src_lang en_units --tgt_lang ${LANGUAGE} --bimodalBARTinit Trueexport CKPT_PATH="path to your checkpoint"

bash ${ROOT}/src/evaluate.sh ${LANGUAGE} ${CKPT_PATH}If you find DUB useful for your research and applications, please cite using the BibTex:

@inproceedings{zhang2023dub,

title = {DUB: Discrete Unit Back-translation for Speech Translation},

author = {Dong Zhang and Rong Ye and Tom Ko and Mingxuan Wang and Yaqian Zhou},

booktitle = {Findings of ACL},

year = {2023},

}