Natural Language Processing

Application

Description

Type

🏷️ Part-of-speech tagging (POS)

Identify if each word is a noun, verb, adjective, etc. (aka Parsing).

🔤

📍 Named entity recognition (NER)

Identify names, organizations, locations, medical codes, time, etc.

🔤

👦🏻❓ Coreference Resolution

Identify several ocuurences on the same person/objet like he, she

🔤

🔍 Text categorization

Identify topics present in a text (sports, politics, etc).

🔤

❓ Question answering

Answer questions of a given text (SQuAD, DROP dataset).

💭

👍🏼 👎🏼 Sentiment analysis

Possitive or negative comment/review classification.

💭

🔮 Language Modeling (LM)

Predict the next word. Unupervised.

💭

🔮 Masked Language Modeling (MLM)

Predict the omitted words. Unupervised.

💭

🔮 Next Sentence Prediction (NSP)

💭

📗→📄 Summarization

Crate a short version of a text.

💭

🈯→🆗 Translation

Translate into a different language.

💭

🆓→🆒 Dialogue bot

Interact in a conversation.

💭

💁🏻→🔠 Speech recognition

Speech to text. See AUDIO cheatsheet.

🗣️

🔠→💁🏻 Speech generation

Text to speech. See AUDIO cheatsheet.

🗣️

🔤: Natural Language Processing (NLP)

💭: Natural Language Understanding (NLU)

🗣️: Speech and sound (speak and listen)

Preprocess

Tokenization : Split the text into sentences and the sentences into words.Lowercasing : Usually done in Tokenization Punctuation removal : Remove words like ., ,, :. Usually done in Tokenization Stopwords removal : Remove words like and, the, him. Done in the past.Lemmatization : Verbs to root form: organizes, will organize organizing → organize This is better.Stemming : Nouns to root form: democratic, democratization → democracy. This is faster.Subword tokenization

Extract features

Document features

Bag of Words (BoW) : Counts how many times a word appears in a text. (It can be normalize by text lenght)TF-IDF : Measures relevance for each word in a document, not frequency like BoW.N-gram : Probability of N words together.Sentence and document vectors. paper2014 , paper2017

Word features

Word Vectors : Unique representation for every word (independent of its context).

Word2Vec GloVe : By StandfordFastText : By Facebook

Contextualized Word Vectors : Good for polysemic words (meaning depend of its context).

CoVE ELMO Transformer encoder : Done with with self-attention. ⭐

Build model

Bag of Embeddings Linear algebra/matrix decomposition

Latent Semantic Analysis (LSA) that uses Singular Value Decomposition (SVD).

Non-negative Matrix Factorization (NMF)

Latent Dirichlet Allocation (LDA): Good for BoW

Neural nets

Recurrent NNs decoder (LSTM, GRU)Transformer decoder (GPT, BERT, ...) ⭐

Hidden Markov Models

Regular expressions : (Regex) Find patterns.Parse trees : Syntax od a sentence

Recurent nets

Tricks

Teacher forcing: Feed to the decoder the correct previous word, insted of the predicted previous word (at the beggining of training)

Attention: Learns weights to perform a weighted average of the words embeddings.

Tokenizer : Create subword tokens. Methods: BPE...Embedding : Create vectors for each token. Sum of:

Token Embedding Positional Encoding : Information about tokens order (e.g. sinusoidal function).

Dropout

Transformer blocks (6, 12, 24,...)

Normalization

Multi-head attention layer (with a left-to-right attention mask )

Each attention head uses self attention to process each token input conditioned on the other input tokens.

Left-to-right attention mask ensures that only attends to the positions that precede it to the left.

Normalization

Feed forward layers:

Linear H→4H

GeLU activation func

Linear 4H→H

Normalization

Output embedding

Softmax

Label smothing: Ground truth -> 90% the correct word, and the rest 10% divided on the other words.

Lowest layers: morphology

Middle layers: syntax

Highest layers: Task-specific semantics

Score

For what?

Description

Interpretation

Perplexity LM The lower the better.

GLUE NLU An avergae of different scores

BLEU Translation Compare generated with reference sentences (N-gram)

The higher the better.

"He ate the apple" & "He ate the potato" has the same BLEU score.

Step

Task

Data

Who do this?

1 [Masked] Language Model Pretraining 📚 Lot of text corpus (eg. Wikipedia)

🏭 Google or Facebook

2 [Masked] Language Model Finetunning 📗 Only you domain text corpus

💻 You

3 Your supervised task (clasification, etc) 📗🏷️ You labeled domain text

💻 You

Packages

Description

Type

Parse trees, execelent tokenizer (8 languages)

🔤

Semantic analysis, topic modeling and similarity detection.

🔤

Very broad NLP library. Not SotA.

🔤

Unsupervised text tokenizer by Google

🔤

Fast.ai NLP: ULMFiT fine-tuning

🔤

TorchText (Pytorch subpackage)

🔤

Word vector representations and sentence classification (157 languages)

🔤

pytorch-transformers: 8 pretrained Pytorch transformers

🔤

SpaCy + pytorch-transformers

🔤

Super easy library for BERT based models

🔤

Pretrained models for 53 languages

🔤

🔤

An open-source NLP research library, built on PyTorch.

🔤

Fast & easy NLP transfer learning for the industry.

🔤

NLP library designed for reproducible experimentation management.

🔤

A very simple framework for state-of-the-art NLP.

🔤

SotA NLP deep learning topologies and techniques.

🔤

Scikit-learn style model finetuning for NLP.

🔤

pip install spacy

python -m spacy download en_core_web_sm

python -m spacy download es_core_news_sm

python -m spacy download es_core_news_md import spacy

nlp = spacy .load ("en_core_web_sm" ) # Load English small model

nlp = spacy .load ("es_core_news_sm" ) # Load Spanish small model without Word2Vec

nlp = spacy .load ('es_core_news_md' ) # Load Spanish medium model with Word2Vec

text = nlp ("Hola, me llamo Javi" ) # Text from string

text = nlp (open ("file.txt" ).read ()) # Text from file

spacy .displacy .render (text , style = 'ent' , jupyter = True ) # Display text entities

spacy .displacy .render (text , style = 'dep' , jupyter = True ) # Display word dependencies es_core_news_md has 534k keys, 20k unique vectors (50 dimensions)

coche = nlp ("coche" )

moto = nlp ("moto" )

print (coche .similarity (moto )) # Similarity based on cosine distance

coche [0 ].vector # Show vector

🤗 Means availability (pretrained PyTorch implementation) on pytorch-transformers package developed by huggingface.

Model

Creator

Date

Breif description

Data

🤗

1st Transformer Google

Jun. 2017

Encoder & decoder transformer with attention

ULMFiT Fast.ai

Jan. 2018

Regular LSTM

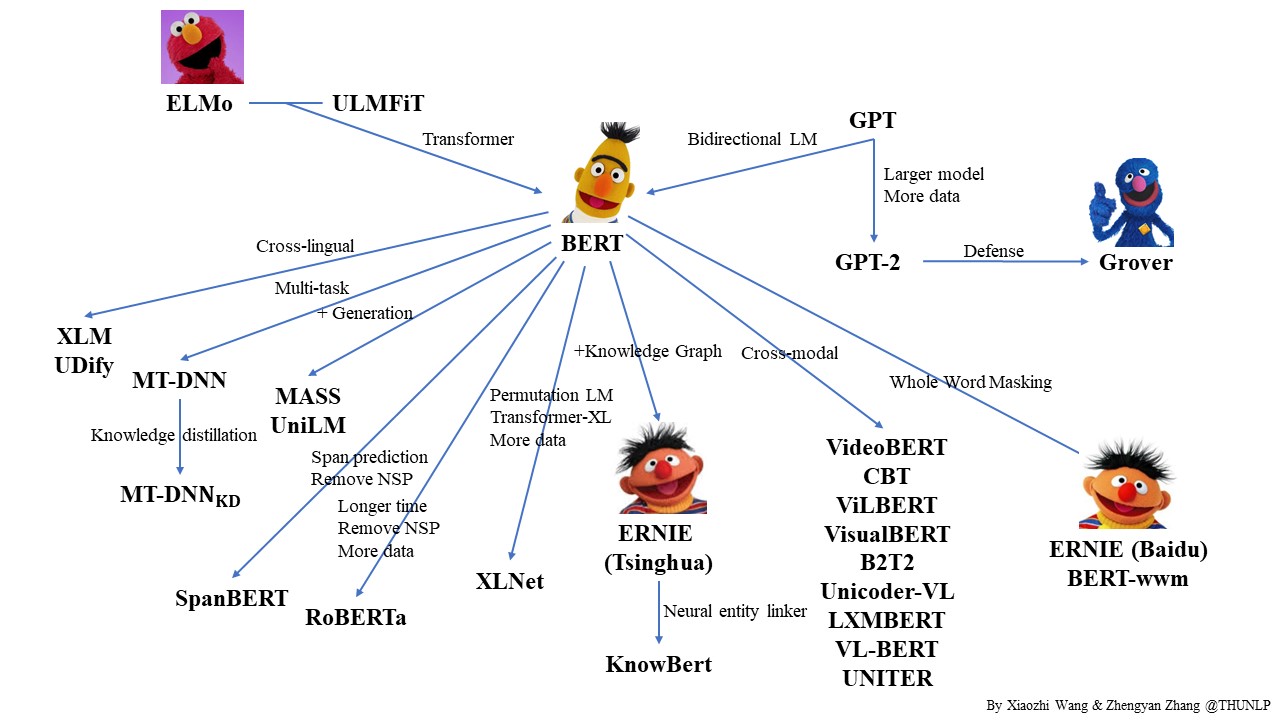

ELMo AllenNLP

Feb. 2018

Bidirectional LSTM

GPT OpenAI

Jun. 2018

Transformer on LM

✔

BERT Google

Oct. 2018

Transformer on MLM (& NSP)

16GB

✔

Transformer-XL Google/CMU

Jan. 2019

✔

XLM/mBERT Facebook

Jan. 2019

Multilingual LM

✔

Transf. ELMo AllenNLP

Jan. 2019

GPT-2 OpenAI

Feb. 2019

Good text generation

✔

ERNIE Baidu research

Apr. 2019

XLNet :Google/CMU

Jun. 2019

BERT + Transformer-XL

130GB

✔

RoBERTa Facebook

Jul. 2019

BERT without NSP

160GB

✔

MegatronLM Nvidia

Aug. 2019

Big models with parallel training

DistilBERT Hugging Face

Aug. 2019

Compressed BERT

16GB

✔

MiniBERT Google

Aug. 2019

Compressed BERT

ALBERT Google

Sep. 2019

Parameter reduction on BERT

https://huggingface.co/pytorch-transformers/pretrained_models.html

Model

2L

3L

6L

12L

18L

24L

36L

48L

54L

72L

1st Transformer yes

ULMFiT yes

ELMo yes

GPT 110M

BERT 110M

340M

Transformer-XL 257M

XLM/mBERT Yes

Yes

Transf. ELMo

GPT-2 117M

345M

762M

1542M

ERNIE Yes

XLNet :110M

340M

RoBERTa 125M

355M

MegatronLM 355M

2500M

8300M

DistilBERT 66M

MiniBERT Yes

Attention : (Aug 2015)

1st Transformer : (Google AI, jun. 2017)

Introduces the transformer architecture: Encoder with self-attention, and decoder with attention.

Surpassed RNN's State of the Art

Paper: Attention Is All You Need

blog .

ULMFiT : (Fast.ai, Jan. 2018)

Regular LSTM Encoder-Decoder architecture with no attention.

Introduces the idea of transfer-learning in NLP:

Take a trained tanguge model: Predict wich word comes next. Trained with Wikipedia corpus for example (Wikitext 103).

Retrain it with your corpus data

Train your task (classification, etc.)

Paper: Universal Language Model Fine-tuning for Text Classification

ELMo : (AllenNLP, Feb. 2018)

Context-aware embedding = better representation. Useful for synonyms.

Made with bidirectional LSTMs trained on a language modeling (LM) objective.

Parameters: 94 millions

Paper: Deep contextualized word representations

site .

GPT : (OpenAI, Jun. 2018)

Made with transformer trained on a language modeling (LM) objective.

Same as transformer, but with transfer-learning for ther NLP tasks.

First train the decoder for language modelling with unsupervised text, and then train other NLP task.

Parameters: 110 millions

Paper: Improving Language Understanding by Generative Pre-Training

site code

BERT : (Google AI, oct. 2018)

Transformer-XL : (Google/CMU, Jan. 2019)

XLM/mBERT : (Facebook, Jan. 2019)

Transformer ELMo : (AllenNLP, Jan. 2019)

GPT-2 : (OpenAI, Feb. 2019)

ERNIE (Baidu research, Apr. 2019)

XLNet : (Google/CMU, Jun. 2019)

RoBERTa (Facebook, Jul. 2019)

MegatronLM (Nvidia, Aug. 2019)

Too big

Parameters: 8300 millions

DistilBERT (Hugging Face, Aug. 2019)

Compression of BERT with Knowledge distillation (teacher-student learning)

A small model (DistilBERT) is trained with the output of a larger model (BERT)

Comparable results to BERT using less parameters

Parameters: 66 millions

What is NLP? ✔Topic Modeling with SVD & NMF Topic Modeling & SVD revisited Sentiment Classification with Naive Bayes Sentiment Classification with Naive Bayes & Logistic Regression, contd. Derivation of Naive Bayes & Numerical Stability Revisiting Naive Bayes, and Regex Intro to Language Modeling Transfer learning ULMFit for non-English Languages Understanding RNNs Seq2Seq Translation Word embeddings quantify 100 years of gender & ethnic stereotypes Text generation algorithms Implementing a GRU Algorithmic Bias Introduction to the Transformer ✔The Transformer for language translation ✔What you need to know about Disinformation