LAA-Net: Localized Artifact Attention Network for Quality-Agnostic and Generalizable Deepfake Detection

This is an official implementation for LAA-Net! [Paper]

This is an official implementation for LAA-Net! [Paper]

- Released pretrained weights

- 26/02/2024: LAA-Net has been accepted in CVPR2024.

- 15/11/2023: First version pre-released for this open source code.

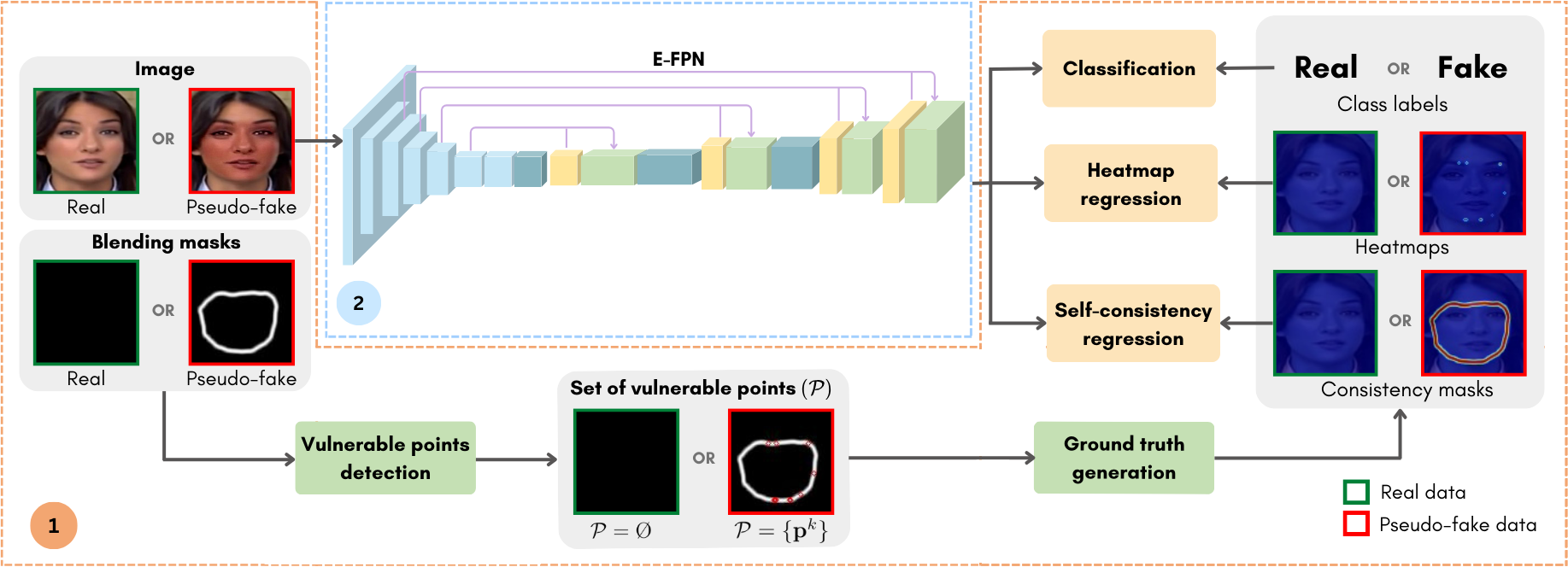

This paper introduces a novel approach for high-quality deepfake detection called Localized Artifact Attention Network (LAA-Net). Existing methods for high-quality deepfake detection are mainly based on a supervised binary classifier coupled with an implicit attention mechanism. As a result, they do not generalize well to unseen manipulations. To handle this issue, two main contributions are made. First, an explicit attention mechanism within a multi-task learning framework is proposed. By combining heatmap-based and self-consistency attention strategies, LAA-Net is forced to focus on a few small artifact-prone regions. Second, an Enhanced Feature Pyramid Network (E-FPN) is suggested as a simple and effective mechanism for spreading discriminative low-level features into the final feature output, with the advantage of limiting redundancy. Experiments performed on several benchmarks show the superiority of our approach in terms of Area Under the Curve (AUC) and Average Precision (AP).

Results on FF++ in-dataset evaluation and 4 datasets (CDF, DFW, DFD, DFDC) under cross-dataset evaluation setting reported by AP and AUC.

| LAA-Net | FF++ | CDF | DFW | DFD | DFDC | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| w/ BI |

|

|

|

|

|

||||||||||||||||||

| w/ SBI |

|

|

|

|

|

For experiment purposes, we encourage the installment of the following libraries. Both Conda or Python virtual env should work.

- CUDA: 11.4

- Python: >= 3.8.x

- PyTorch: 1.8.0

- TensorboardX: 2.5.1

- ImgAug: 0.4.0

- Scikit-image: 0.17.2

- Torchvision: 0.9.0

- Albumentations: 1.1.0

- 📌 The pre-trained weights of using BI and SBI can be found here!

We further provide an optional Docker file that can be used to build a working env with Docker. More detailed steps can be found here.

- Install docker to the system (postpone this step if docker has already been installed):

sudo apt install docker

- To start your docker environment go to the folder dockerfiles:

cd dockerfiles - Create a docker image (you can put any name you want):

docker build --tag 'laa_net' .

-

Preparation

-

Prepare environment

Installing main packages as the recommended environment.

-

Prepare dataset

-

Downloading FF++ Original dataset for training data preparation. Following the original split convention, it is firstly used to randomly extract frames and facial crops:

python package_utils/images_crop.py -d {dataset} \ -c {compression} \ -n {num_frames} \ -t {task}(This script can also be utilized for cropping faces in other datasets such as CDF, DFD, DFDC for cross-evaluation test. You do not need to run crop for DFW as the data is already preprocessed).

Parameter Value Definition -d Subfolder in each dataset. For example: ['Face2Face','Deepfakes','FaceSwap','NeuralTextures', ...] You can use one of those datasets. -c ['raw','c23','c40'] You can use one of those compressions -n 128 Number of frames (default 32 for val/test and 128 for train) -t ['train', 'val', 'test'] Default train These faces cropped are saved for online pseudo-fake generation in the training process, following the data structure below:

ROOT = '/data/deepfake_cluster/datasets_df' └── Celeb-DFv2 └──... └── FF++ └── c0 ├── test │ └── frames │ └── Deepfakes | ├── 000_003 | ├── 044_945 | ├── 138_142 | ├── ... │ ├── Face2Face │ ├── FaceSwap │ ├── NeuralTextures │ └── original | └── videos ├── train │ └── frames │ └── aligned | ├── 001 | ├── 002 | ├── ... │ └── original | ├── 001 | ├── 002 | ├── ... | └── videos └── val └── frames ├── aligned └── original └── videos -

Downloading Dlib [68] [81] facial landmarks detector pretrained and place into

/pretrained/. whereas the 68 and 81 will be used for the BI and SBI synthesis, respectively. -

Landmarks detection and alignment. At the same time, a folder for aligned images (

aligned) is automatically created with the same directory tree as the original one. After completing the script running, a file that stores metadata information of the data is saved atprocessed_data/c0/{SPLIT}_<n_landmarks>_FF++_processed.json.python package_utils/geo_landmarks_extraction.py \ --config configs/data_preprocessing_c0.yaml \ --extract_landmarks \ --save_aligned -

(Optional) Finally, if using BI synthesis, for the online pseudo-fake generation scheme, 30 similar landmarks are searched for each facial query image beforehand.

python package_utils/bi_online_generation.py \ -t search_similar_lms \ -f processed_data/c0/{SPLIT}_68_FF++_processed.jsonThe final annotation file for training is created as

processed_data/c0/dynamic_{SPLIT}BI_FF.json

-

-

-

Training script

We offer a number of config files for specific data synthesis. With BI, open

configs/efn4_fpn_hm_adv.yaml, please make sure you setTRAIN: TrueandFROM_FILE: Trueand run:./scripts/train_efn_adv.shOtherwise, with SBI, with the config file

configs/efn4_fpn_sbi_adv.yaml:./scripts/efn_sbi.sh -

Testing script

For BI, open

configs/efn4_fpn_hm_adv.yaml, withsubtask: evalin the test section, we support evaluation mode, please turn offTRAIN: FalseandFROM_FILE: Falseand run:./scripts/test_efn.shOtherwise, for SBI

./scripts/test_sbi.sh⚠️ Please make sure you set the correct path to your download pre-trained weights in the config files.ℹ️ Flip test can be used by setting

flip_test: Trueℹ️ The mode for single image inference is also provided, please set

sub_task: test_imageand pass an image path as an argument in test.py

Please contact dat.nguyen@uni.lu. Any questions or discussions are welcomed!

We acknowledge the excellent implementation from mmengine, BI, and SBI.

This software is © University of Luxembourg and is licensed under the snt academic license. See LICENSE

Please kindly consider citing our papers in your publications.

@InProceedings{Nguyen_2024_CVPR,

author = {Nguyen, Dat and Mejri, Nesryne and Singh, Inder Pal and Kuleshova, Polina and Astrid, Marcella and Kacem, Anis and Ghorbel, Enjie and Aouada, Djamila},

title = {LAA-Net: Localized Artifact Attention Network for Quality-Agnostic and Generalizable Deepfake Detection},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {17395-17405}

}