Read this in other languages: English, 中文.

Akamai's anti-crawling system is a product designed to protect websites from attacks and ensure API data security. Currently, it has two methods of protection. One is protection at the HTTPS request level, and the other is to collect current browser information for risk control interception. Different sites and interfaces have varying degrees of risk control interception.

There are many articles online about bypassing ja3. I recommend a third-party Python library for bypassing ja3, curl_cffi.

The site analyzed this time is maersk.com. The main verification is done using the _abck cookie value. This is returned when submitting the sensor_data parameter.

If the sensor_data value is valid, a valid _abck will be returned. Then, you can happily request the content of the corresponding website. However, _abck has a time limit. After being intercepted by a 403 error, you need to request it again.

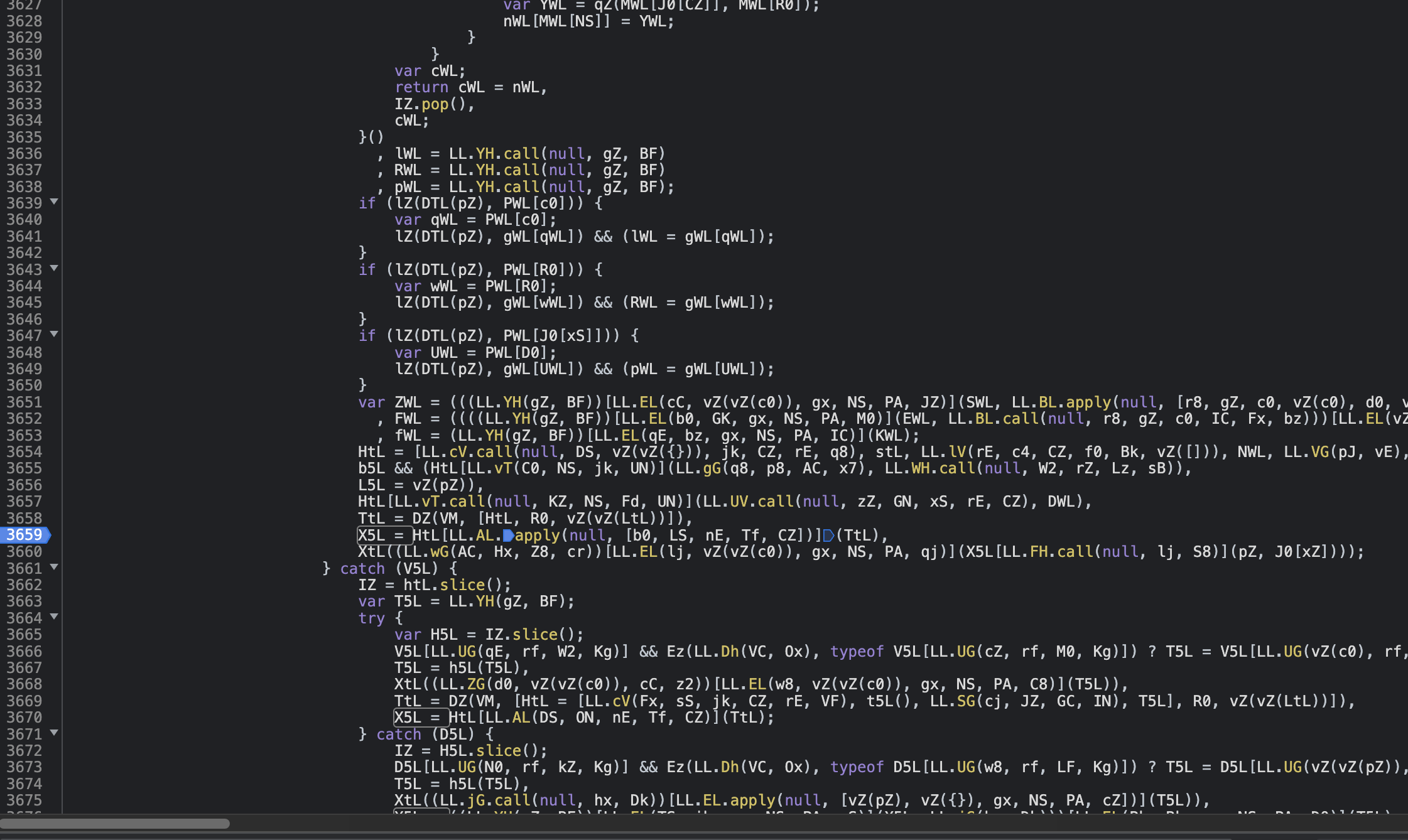

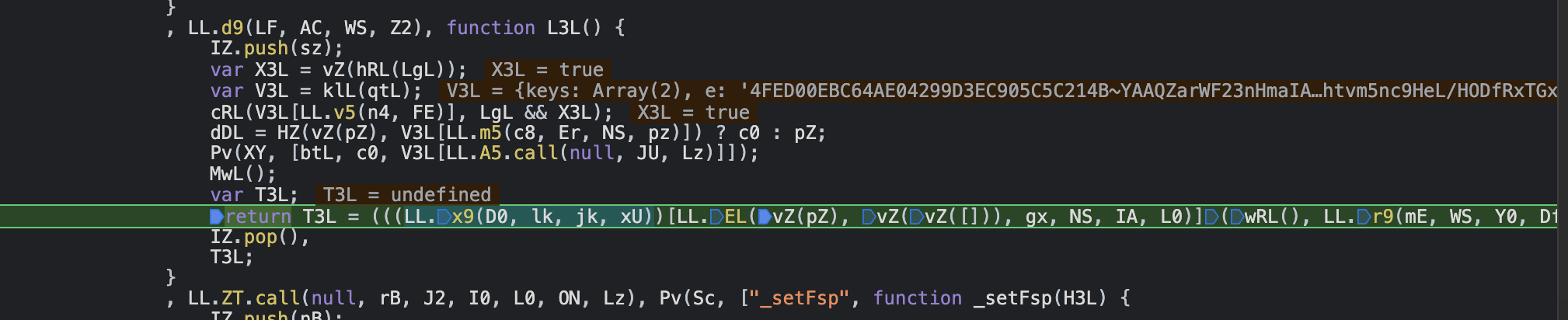

Let's now analyze how the sensor_data parameter is generated.

The JavaScript code responsible for generating this parameter is shown in the image above. It's obfuscated, but no worries, we can employ AST (Abstract Syntax Tree) to de-obfuscate it. For those interested in the nuances of AST de-obfuscation, you may refer to an expert named Cai's AST knowledge base. Without going into the de-obfuscation process, I dived directly into debugging the code.

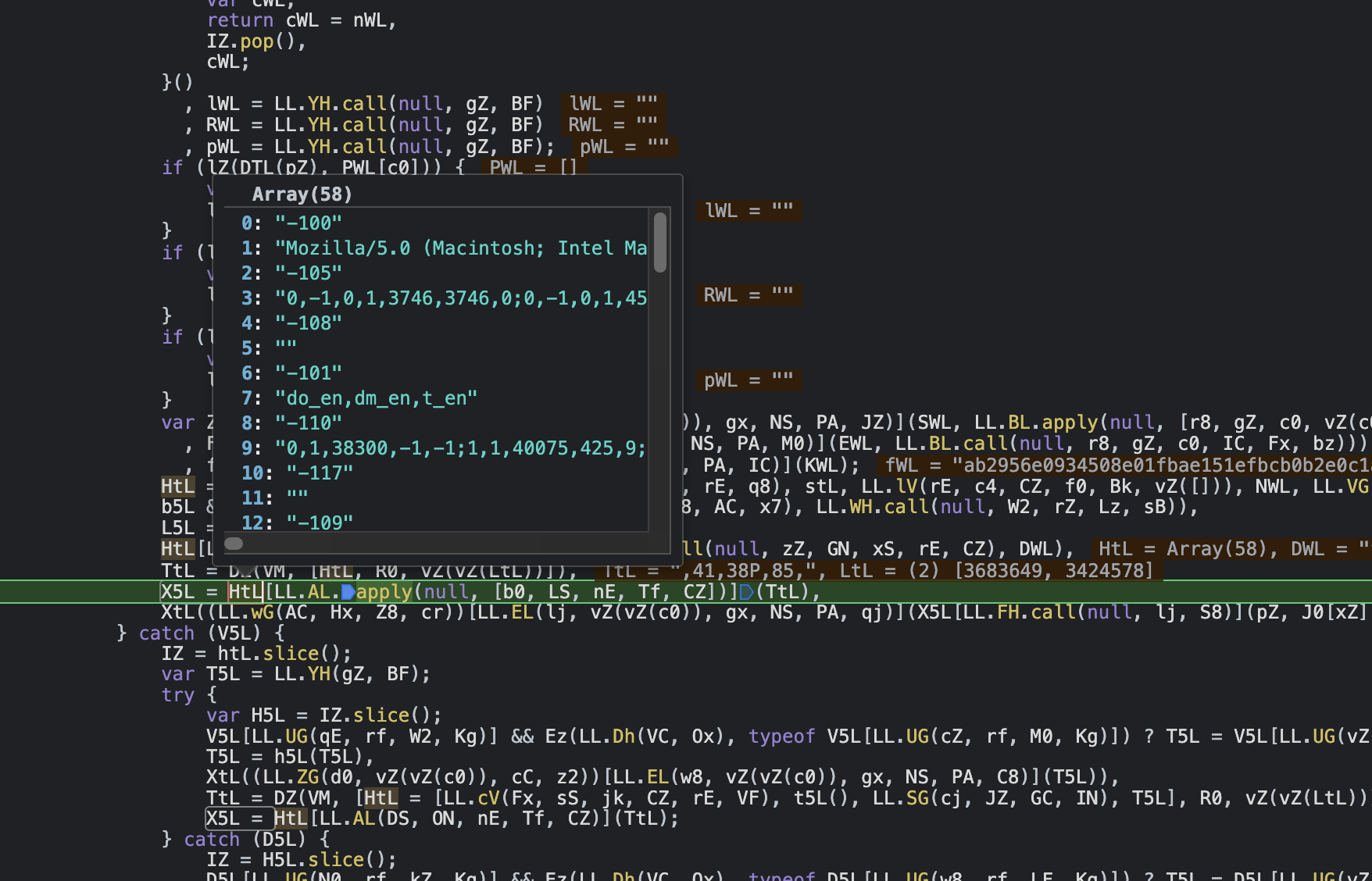

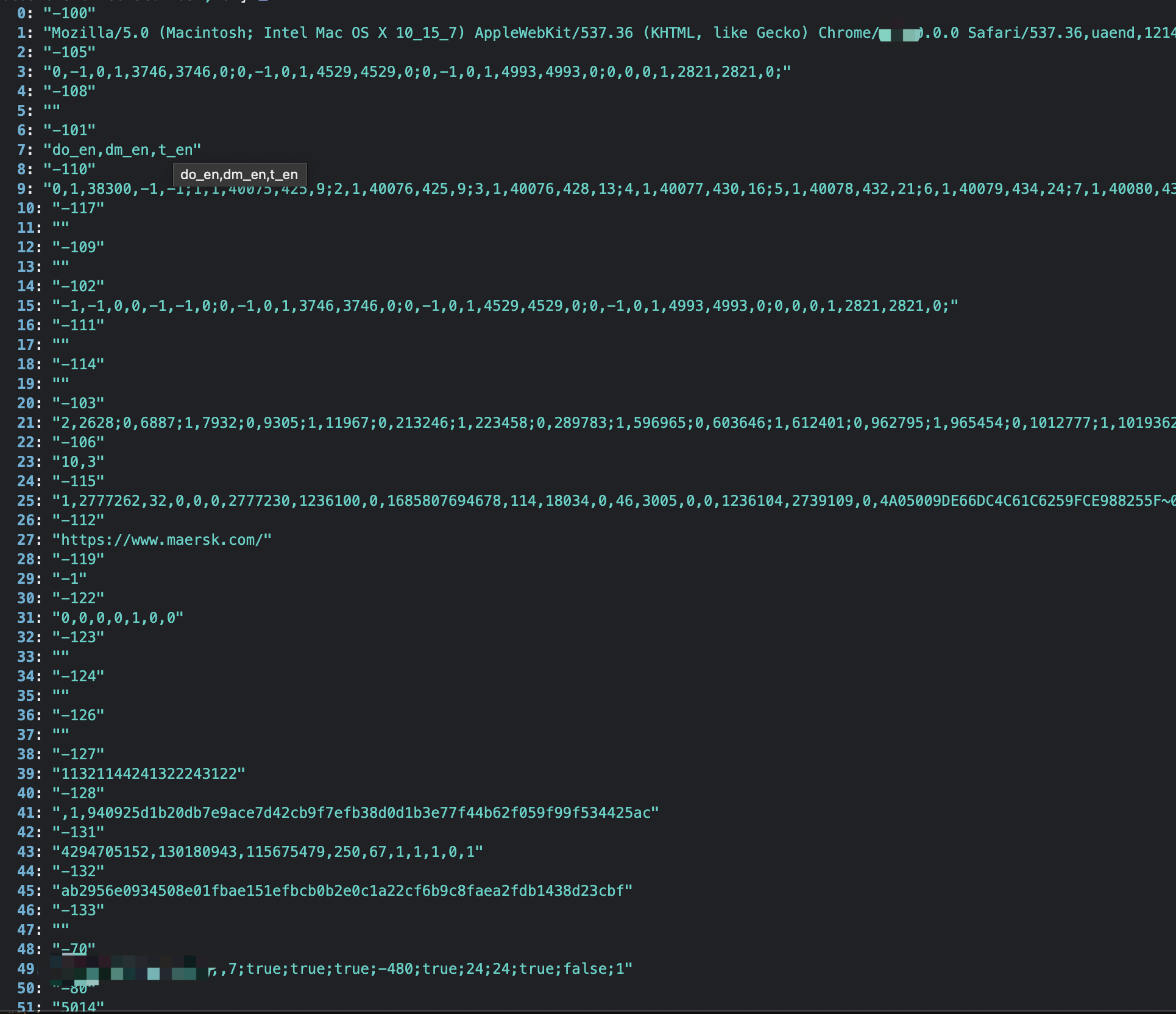

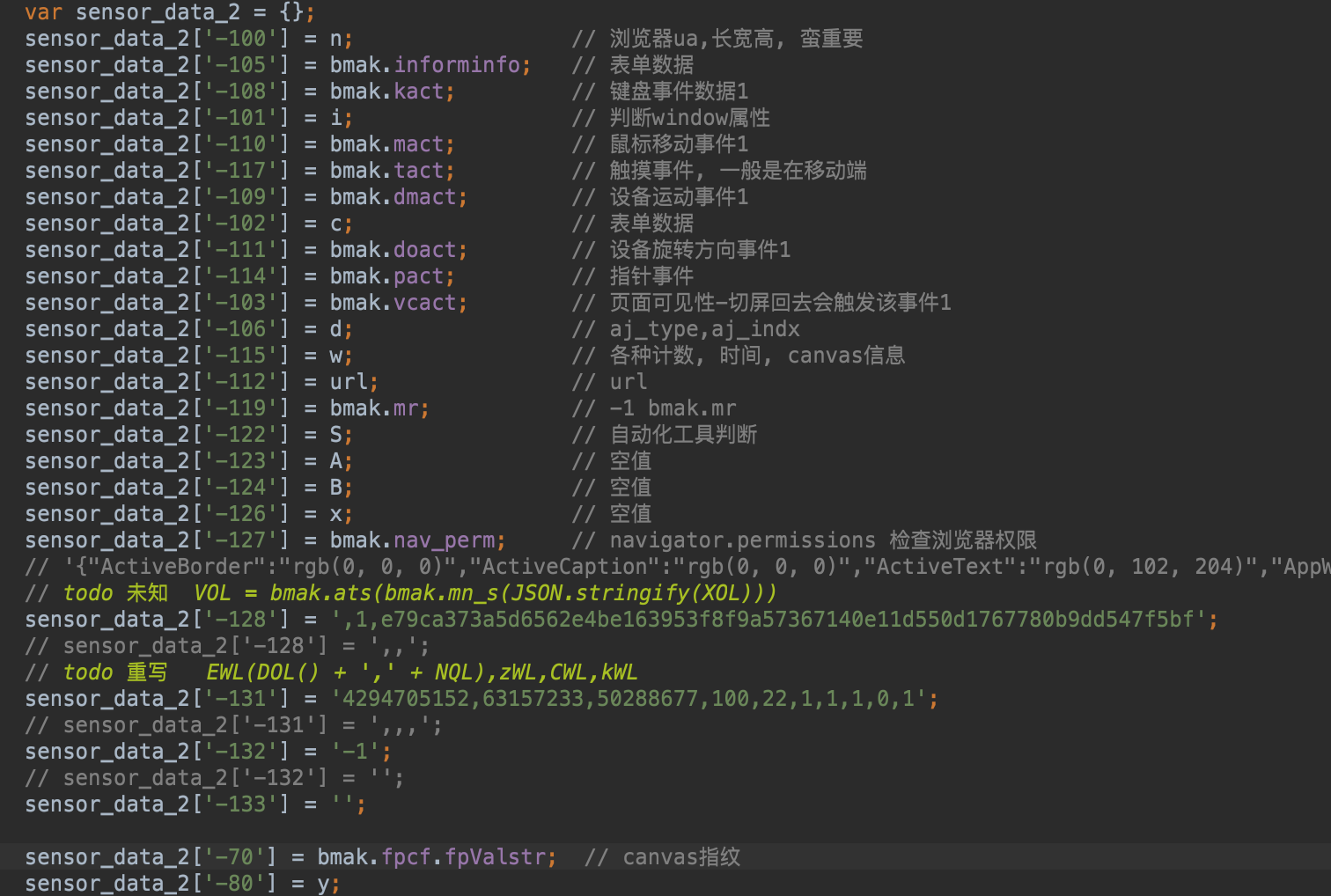

The sensor_data parameter is formed by concatenating and encrypting a 58-element array, as shown above. Let's delve deeper to understand what each element in this array represents.

These images showcase the array's detailed content.

By debugging the JS code, I was able to discern the meaning and significance of several parameters within the array. Crucially, I discovered that the canvas fingerprint and motion trajectory were the most vital components. Moreover, it's essential to note that the canvas fingerprint can't be randomly forged. To bypass this, one might collect real browser canvas fingerprints to use as replacements. As for motion trajectories, algorithms can be designed to simulate genuine mouse movements, clicks, and other behaviors.

:::info

When dealing with high concurrent requests, it's imperative that simulated browser fingerprint data and motion trajectory data appear genuine. Also, it's necessary to switch IPs dynamically. Making numerous requests from a single IP will lead to its blacklisting. Another crucial insight is that if the generated parameters aren't realistic enough, the Akamai system might allow them but will delay the request processing time. This latency becomes evident in some critical interfaces.

:::

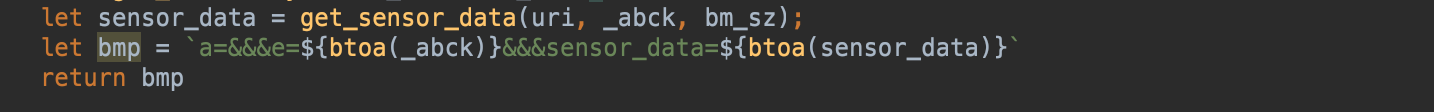

This parameter is included in the request headers. Its generation is relatively straightforward as it's derived from the sensor_data parameter, and is encoded using base64. Let's examine it.

The translation of the relevant code reveals:

This is how it's generated.

With a concurrency of 100, the pass rate is 100%. As you can see, if the sensor_data is generated more realistically, the pass rate is very high, bypassing Akamai's system in less than a second. If the confidence is not very high, it will slow down, and it might take up to 10 seconds to pass. Of course, this also depends on the IP address used.

I've already encapsulated the algorithm for generating sensor_data into an API, which can be called. If you don't want to research it yourself, you can contact me for API call services. Of course, there's a fee. My contact details:

Telegram: xwggnp