Official PyTorch Implementation of MULTI-LAYERED SEMANTIC REPRESENTATION NETWORK FOR MULTI-LABEL IMAGE CLASSIFICATION.

Abstract

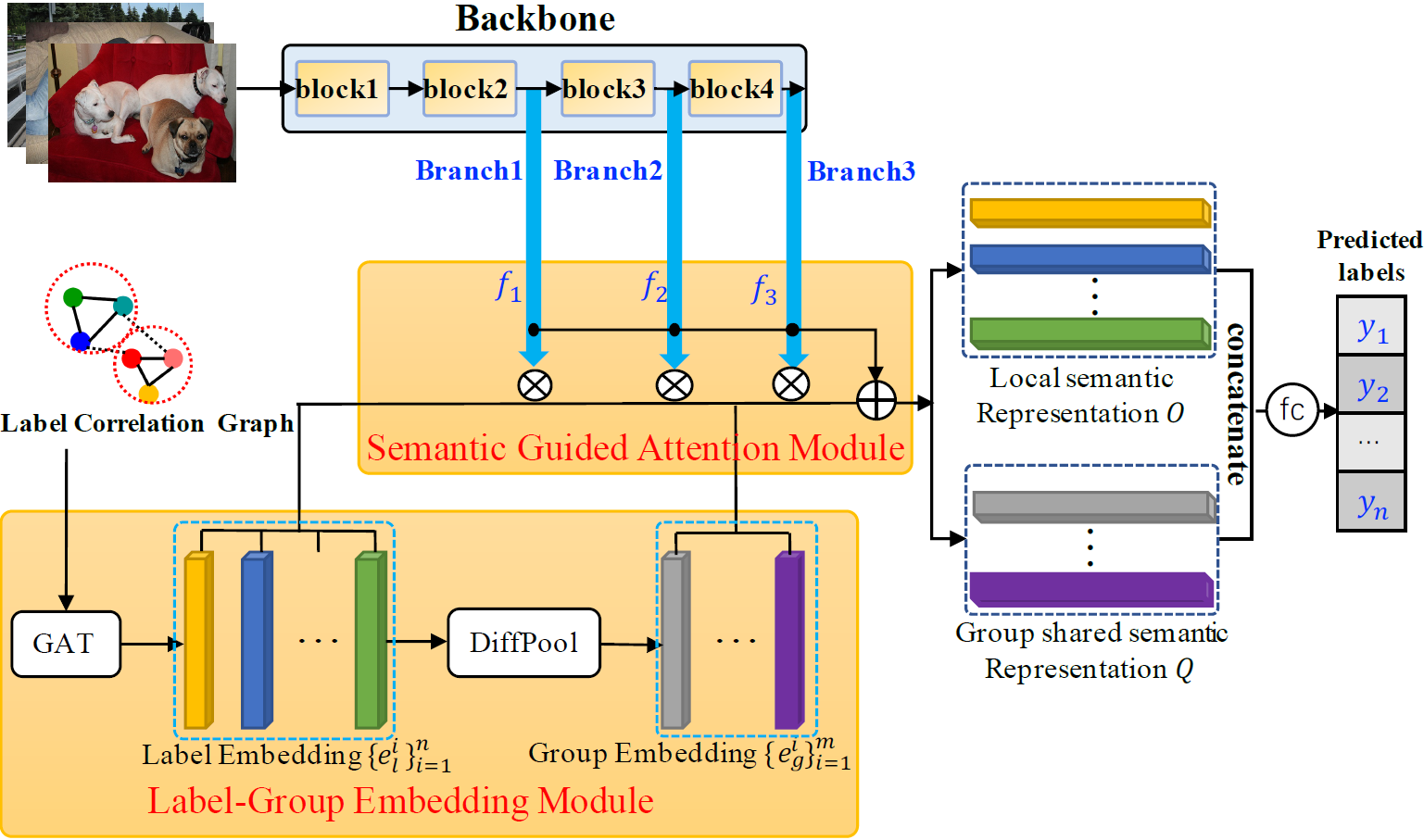

Multi-label image classification (MLIC) is a fundamental and practical task, which aims to assign multiple possible labels to an image. In recent years, many deep convolutional neural network (CNN) based approaches have been proposed which model label correlations to discover semantics of labels and learn semantic representations of images. This paper advances this research direction by improving both the modeling of label correlations and the learning of semantic representations. On the one hand, besides local semantics of each label, we propose to further explore global semantics shared by multiple labels. On the other hand, existing approaches mainly learn the semantic representations at the last convolutional layer of a CNN. But it has been noted that the image representations of different layers of CNN capture different levels or scales of features and have different discriminative abilities. We thus propose to learn semantic representations at multiple convolutional layers. To this end, this paper designs a Multi-layered Semantic Representation Network (MSRN) which discovers both local and global semantics of labels through modeling label correlations and utilizes the label semantics to guide the semantic representations learning at multiple layers through an attention mechanism. Extensive experiments on four benchmark datasets including VOC 2007, COCO, NUS-WIDE and Apparel show a competitive performance of the proposed MSRN against state-of-the-art models.

|

Please install the following packages

- python==3.6

- numpy==1.19.5

- torch==1.7.0+cu101

- torchnet==0.0.4

- torchvision==0.8.1+cu101

- tqdm==4.41.1

- prefetch-generator==1.0.1

Download url: https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

Please rename the model to resnet101.pth.tar, and put it to folder pretrained:

!mkdir pretrained

!cp -p /path/resnet101.pth.tar /path/MSRN/pretrained

http://host.robots.ox.ac.uk/pascal/VOC/voc2007/

https://cocodataset.org/#download

Refer to: https://github.com/Alibaba-MIIL/ASL/blob/main/MODEL_ZOO.md

https://www.kaggle.com/kaiska/apparel-dataset

checkpoint/voc (GoogleDrive)

checkpoint/coco (GoogleDrive)

checkpoint/nus-wide (GoogleDrive)

checkpoint/Apparel (GoogleDrive)

or

[Baidu](https://pan.baidu.com/s/1jgaSxURuxR1Z3AV_-hJ-KA 提取码:1qes )

lr: learning ratebatch-size: number of images per batchimage-size: size of the imageepochs: number of training epochse: evaluate model on testing setresume: path to checkpoint

python3 demo_voc2007_gcn.py data/voc --image-size 448 --batch-size 24 -e --resume checkpoint/voc/voc_checkpoint.pth.tar

python3 demo_coco_gcn.py data/coco --image-size 448 --batch-size 12 -e --resume checkpoint/coco/coco_checkpoint.pth.tar

python3 demo_coco_gcn.py data/coco --image-size 448 --batch-size 12 -e --resume checkpoint/nuswide/nuswide_checkpoint.pth.tar

python3 demo_apparel_gcn.py data/coco --image-size 448 --batch-size 12 -e --resume checkpoint/apparel/apparel_checkpoint.pth.tar

@inproceedings{2021MSRN,

author = {},

title = {},

booktitle = {},

year = {}

}