SIFS: An Advanced Swarm Intelligence-Based Toolbox for Feature Selection in High-Dimensional Biomedical Datasets

SIFS is an advanced toolbox based on swarm intelligence for feature selection in high-dimensional biomedical datasets. Specifically, SIFS is a machine learning platform with a graphical interface that enables the construction of automated machine learning pipelines for computational analysis and prediction using locally uploaded high-dimensional biomedical datasets. SIFS provides four main modules, including feature selection and normalization, clustering and dimensionality reduction, machine learning and classification, for biologists and bioinformaticians to perform multiple-choice feature engineering and analysis, machine learning algorithm construction, performance evaluation, statistical analysis, and data visualization without the need for additional programming. In terms of feature selection, SIFS integrates 55 swarm intelligence algorithms and 5 traditional feature selection algorithms; in machine learning, SIFS integrates 21 machine learning algorithms (including 12 traditional classification algorithms, two ensemble learning frameworks, and seven deep learning methods). Additionally, SIFS's user-friendly GUI (Graphical User Interface) facilitates smooth analysis for biologists, significantly improving efficiency and user experience compared to existing pipelines.

SIFS is an open-source toolbox based on Python, designed to run within a Python environment (Python 3.6 or higher) and is compatible with multiple operating systems such as Windows, Mac, and Linux. Before installing and running SIFS, all dependencies should be installed within the Python environment, including PyQt5, qdarkstyle, numpy (1.18.5), pandas (1.0.5), threading, sip, datetime, platform, pickle, copy, scikit-learn (0.23.1), math, scipy (1.5.0), collections, itertools, torch (≥1.3.1), lightgbm (2.3.1), xgboost (1.0.2), matplotlib (3.1.1), seaborn, joblib, warnings, random, multiprocessing, and time. For convenience, we strongly recommend users to install the Anaconda Python environment on their local machines, which can be freely downloaded from https://www.anaconda.com/. Detailed steps for installing these dependencies are as follows:

-

Download and install the Anaconda platform (Conda can create and manage virtual environments, allowing users to use different Python versions and dependencies in different projects, ensuring isolation and independence between projects): The download link is https://www.anaconda.com/products/individual.

-

Download and install PyTorch (PyTorch is an open-source deep learning framework that provides flexible and efficient tools for building and training deep neural network models). For PyTorch installation, please refer to https://pytorch.org/get-started/locally/.

-

Install necessary Python packages and dependencies such as lightgbm and qdarkstyle.

To run SIFS, navigate to the installation folder of SIFS and execute the 'main.py' script as follows:

python main.pyOnce SIFS is launched, the interface will display as shown in Figure 1.

Here, we provide a step-by-step user guide to demonstrate the workflow of the SIFS toolkit by running examples provided in the "examples" directory. The four fundamental functionalities of SIFS, including feature extraction, feature analysis (clustering and dimensionality reduction), predictor construction, and data/result visualization, are designed and implemented.

| Module name | Function |

|---|---|

| Feature Normalization/Selection | 1) 55 population intelligent feature algorithms + 5 traditional feature selection algorithms 2) 2 feature normalization methods |

| Cluster / Dimensionality Reduction | 1) 10 clustering algorithms 2) 3 three-dimensional dimensionality reduction algorithms |

| Machine Learning | 21 machine learning algorithms (12 traditional classification algorithms, 2 ensemble learning frameworks, and 7 deep learning methods) |

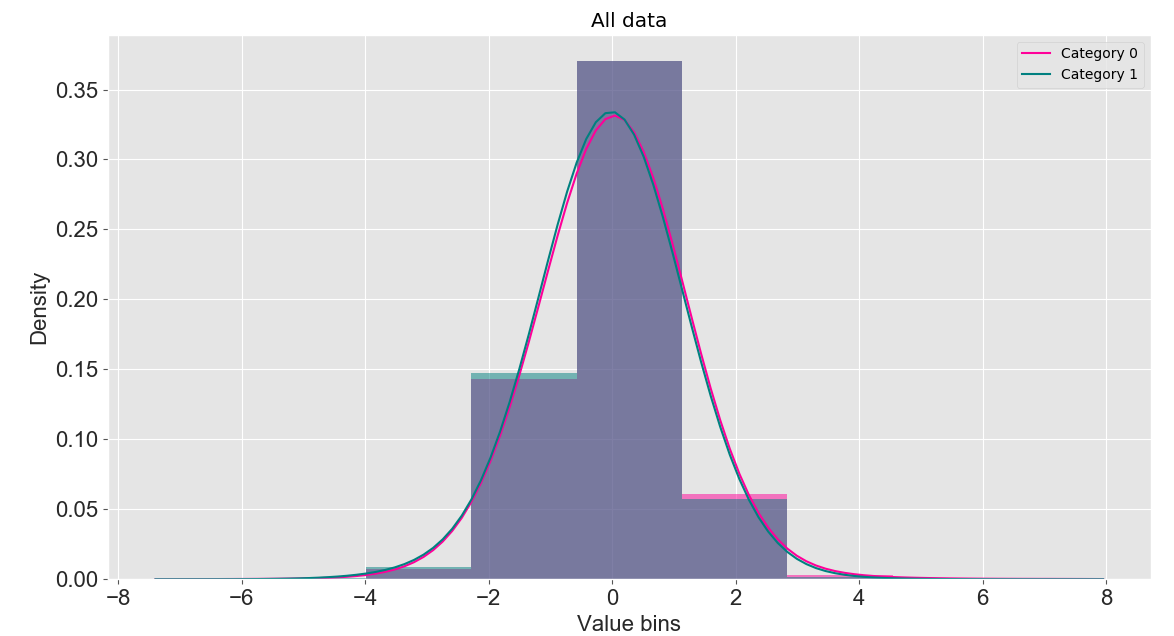

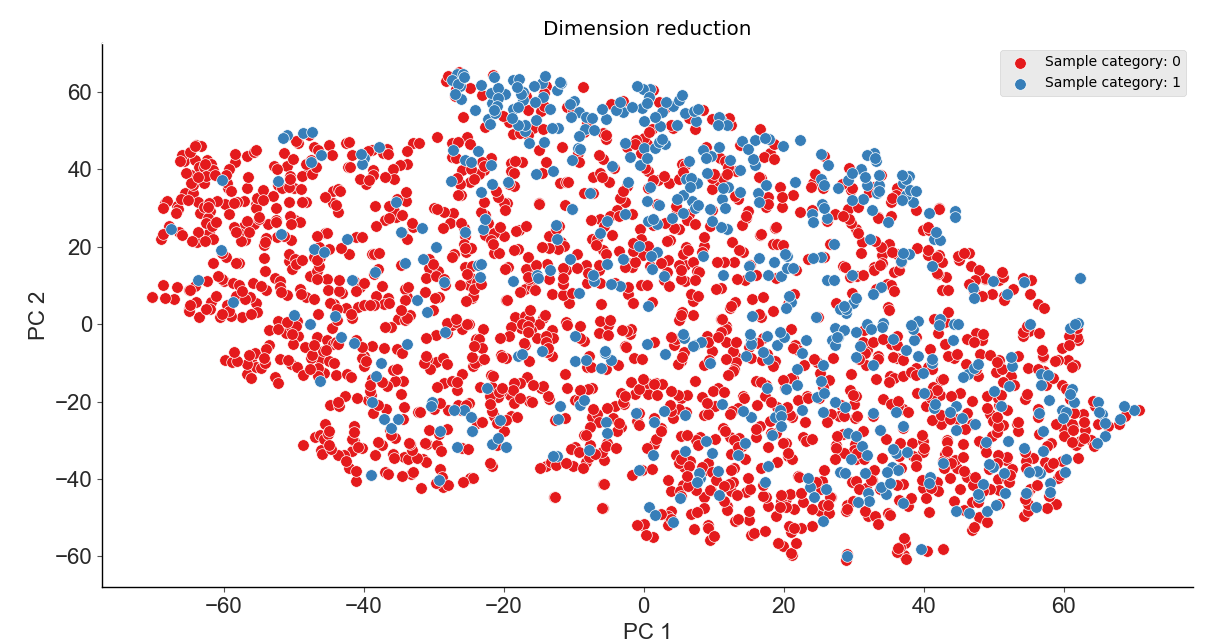

| Data visualization | Data visualization (scatter plots for clustering and dimensionality reduction results, histograms and kernel density plots for data distribution, ROC and PRC curves for performance evaluation) |

For feature analysis and predictor construction, SIFS supports four file formats: LIBSVM format, Comma-Separated Values (CSV), Tab-Separated Values (TSV), and Waikato Environment For Knowledge analysis (WEKA) format. For LIBSVM, CSV, and TSV formats, the first column must be the sample label. For examples of these file formats, please refer to the "data" directory of the software.

SIFS is an advanced swarm intelligence-based toolbox for feature selection on high-dimensional biomedical datasets. It facilitates four primary functionalities: feature selection, feature analysis, predictor construction, and data/result visualization. Within the SIFS module, there are three panels:

- "Feature Normalization/Selection" is utilized for feature selection and normalization algorithms.

- The "Cluster/Dimensionality Reduction" panel is dedicated to feature dimensionality reduction and clustering.

- The "Machine Learning" panel is used for building prediction models.

The panel comprises 60 feature selection algorithms, including 55 swarm intelligence-based feature algorithms and 5 traditional feature selection algorithms, for feature selection. Additionally, it offers 2 feature normalization methods for data preprocessing.

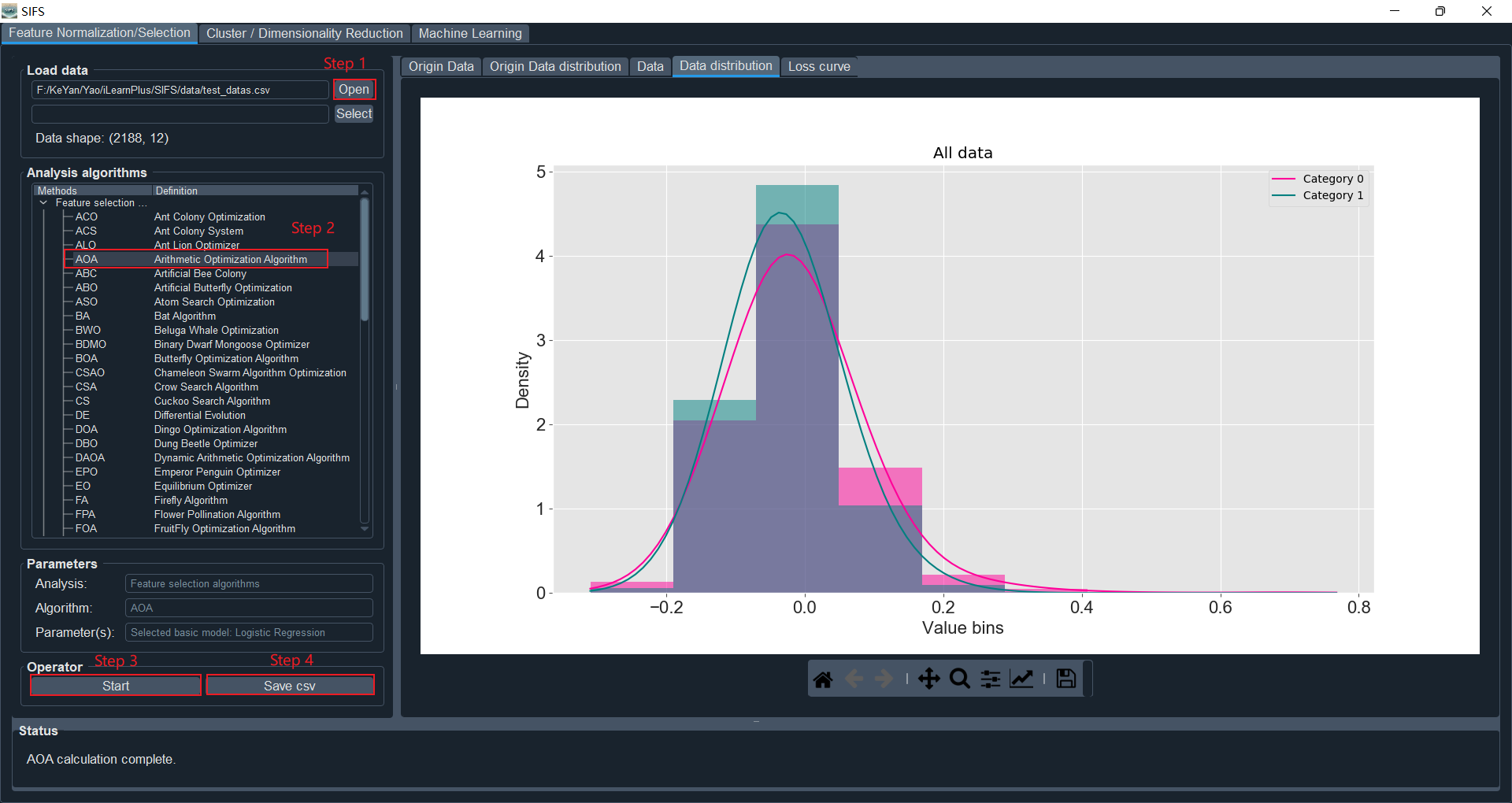

Click on the "Open" button in the "Feature Normalization/Selection" panel, and select the data file (for example: "test_datas.csv" in the "data" directory of the iLearnPlus package).

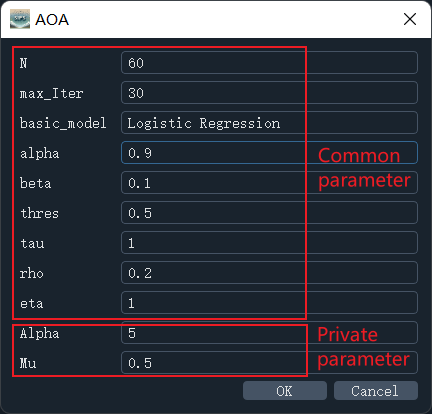

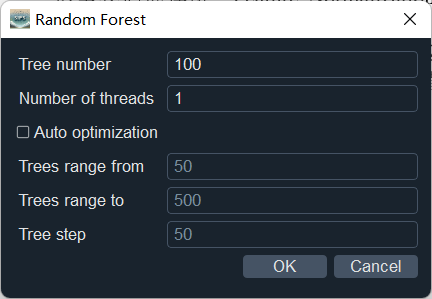

Select the feature selection algorithm under the "Feature selection data" column (taking AOA as an example), and set the corresponding parameters in the parameter dialog box (using default parameters here). Parameters include common parameters and private parameters (each feature selection algorithm has its specific parameters, as shown in Figure 2). SIFS currently supports 17 basic models for evaluating and optimizing feature selection algorithms, including Logistic Regression, K-Nearest Neighbors, and XGBoost. In the "Parameters" section, information such as processing type (feature selection or normalization), selected algorithm, and base model (used for feature selection optimization) will be displayed.

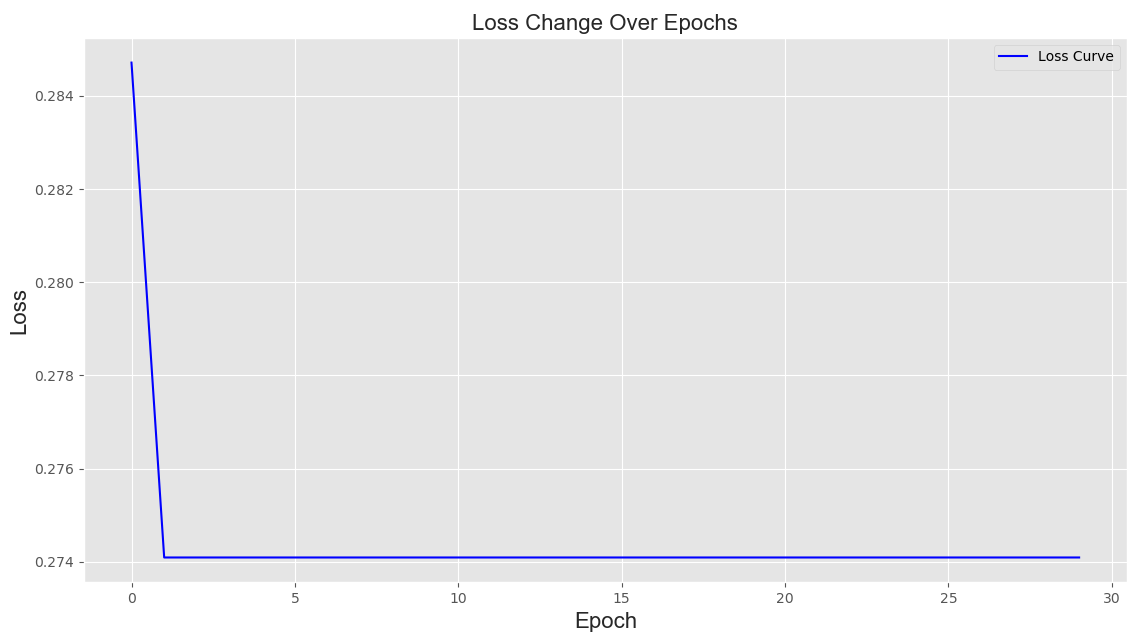

Click the "Start" button to compute the features after feature selection. The data before feature selection and its graphical representation will be displayed in the "Origin Data" and "Origin Data distribution" panels, respectively. The data and graphical representation after feature selection will be shown in the "Data" and "Data distribution" panels, respectively. Histograms and kernel density plots are used here to display the distribution of feature encodings. Additionally, the loss curve of model optimization during the feature selection process will be shown in the "Loss Curve" panel.

Click the "Save" button to save the generated data after feature selection. SIFS supports saving the computed features in four formats: LIBSVM, CSV, TSV, and WEKA, facilitating direct use of these features in subsequent analysis, predictor construction, etc. Additionally, SIFS provides the TSV_1 format, which includes sample and feature labels. Moreover, all plots in SIFS are generated using the matplotlib library and can be saved in various image formats such as PNG, JPG, PDF, TIFF, etc.

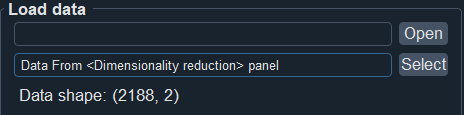

Note 1: In this module, besides supporting uploading data from local files, you can also choose to use dimensionality-reduced data obtained from the "Cluster/Dimensionality Reduction" module for feature selection.

Note 2: The loss curve of model optimization during the feature selection process will be displayed in the "Loss Curve" panel, as shown below:

Note 3: In addition to being used for feature selection, this module can also be used for feature normalization, as shown in the following figure:

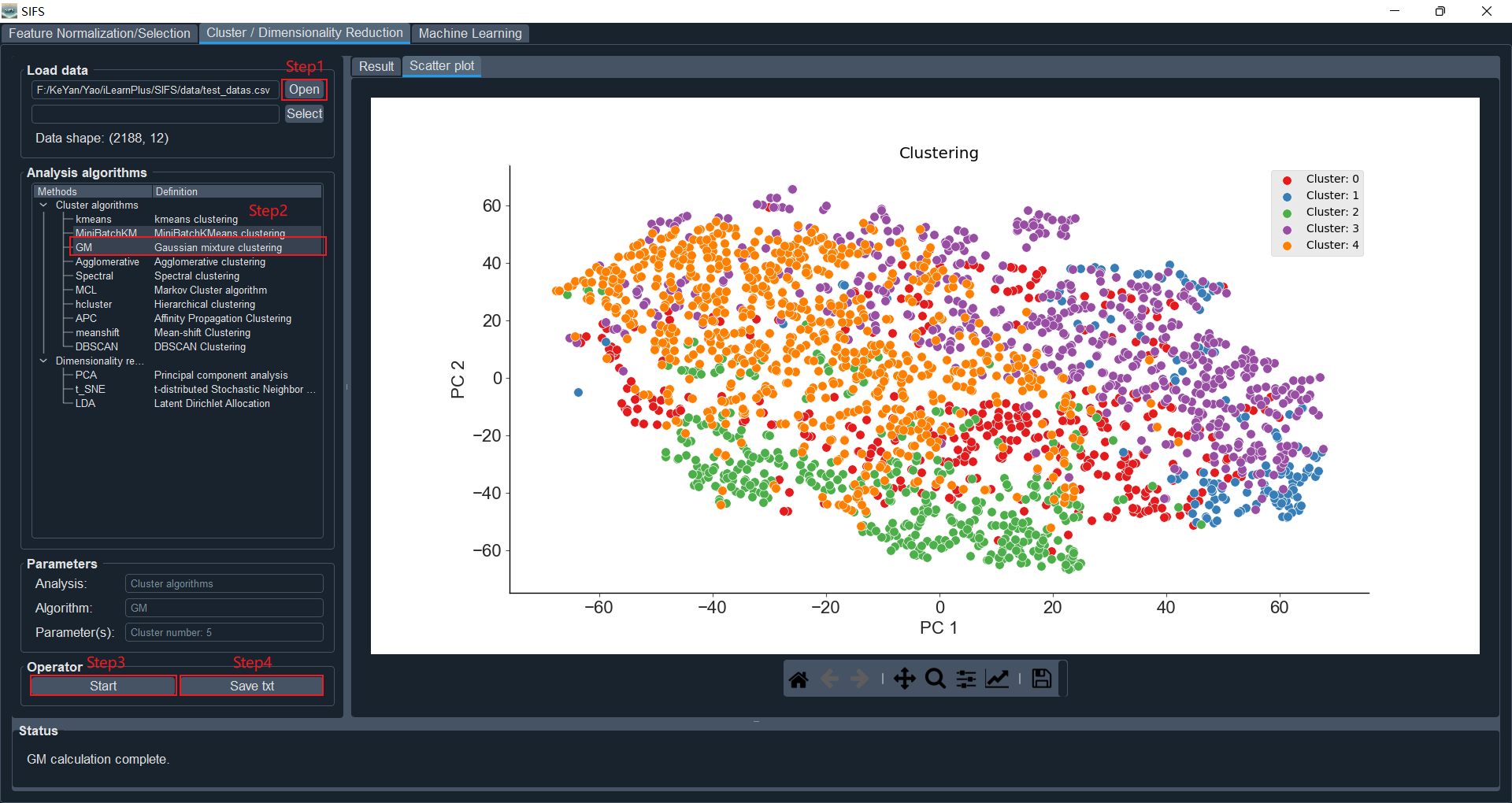

SIFS provides various options to facilitate feature analysis, including 10 feature clustering methods, 3 dimensionality reduction methods, 2 feature normalization methods, and 60 feature selection methods. In the SIFS module, the "Cluster/Dimensionality Reduction" panel is used to deploy clustering and dimensionality reduction algorithms, while the "Feature Normalization/Selection" panel is used to implement feature normalization and selection functionalities. Here, we take clustering as an example:

There are two methods to load data for analysis:

- Open an encoded file.

- Select data generated from other panels.

Here, we will load data from a file. Click the "Open" button and select the "test_datas.csv" file in the "data" directory.

Here, we select the "GM" clustering algorithm as a demonstration. We set the number of clusters to 5. To visualize the clustering results, we will reduce the dimensionality of the clustered data to two dimensions for visualization.

Click the "Start" button to begin the analysis process. The clustering results and graphical representations will be displayed in the "Result" and "Scatter plot" panels, respectively. Here, we use a scatter plot to visualize the clustering results.

Click the "Save" button to save the generated clustering results.

In addition to being used for feature clustering, this module can also be used for feature dimensionality reduction. Here, we will use PCA to reduce the original data to 3 dimensions (the visualization results will be displayed in 2 dimensions) as shown below:

SIFS offers 12 traditional classification algorithms, two ensemble learning frameworks, and seven deep learning methods. The implementation of these algorithms in SIFS is based on four third-party machine learning platforms, including scikit-learn (3), XGBoost (4), LightGBM (5), and PyTorch (6). Among them, the CNN algorithm serves as the core machine learning algorithm.

There are two methods to load data for analysis:

- Load data files from local storage.

- Select data generated from other panels.

Here, we will select to load training and testing data from the "Feature Normalization/Selection" panel.

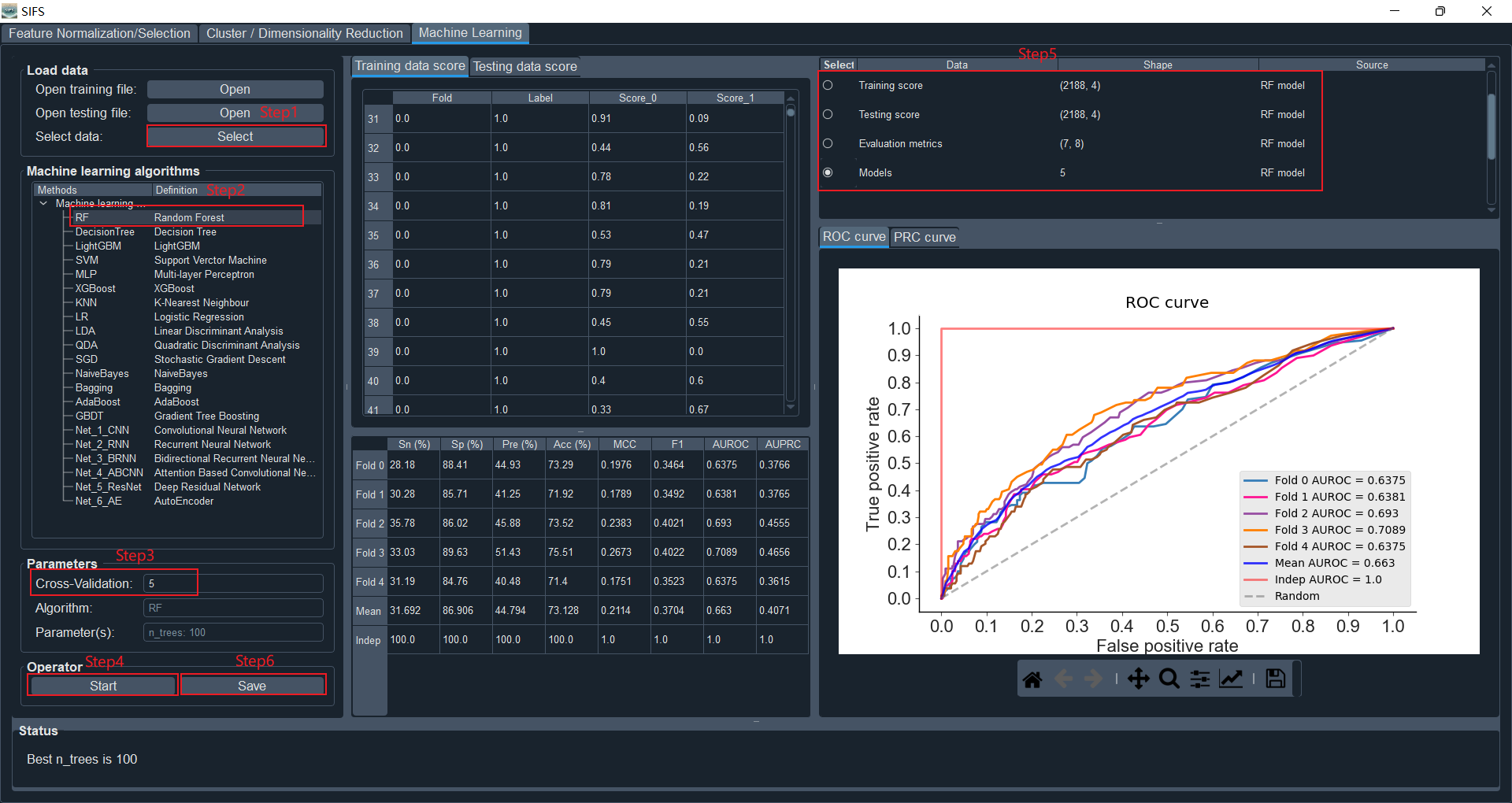

Select "Random Forest" and use the default parameter values.

Set k to 5

Click the "Start" button to initiate the analysis process. The evaluation metrics for prediction scores, k-fold cross-validation, independent testing, as well as ROC and PRC curves, will be displayed.

The predictor construction module of SIFS supports saving files such as indicator scores, evaluation metrics, and trained models.

As described in this article, SIFS supports both binary classification tasks and multi-class classification tasks. For binary classification problems, SIFS supports 8 commonly used metrics, including sensitivity (Sn), specificity (Sp), accuracy (Acc), Matthews correlation coefficient (MCC), precision, f1-score, area under the ROC curve (AUROC), and area under the precision-recall curve (AUPRC). The definitions of Sn, Sp, Acc, MCC, Precision, and F1-score are as follows:

Where TP, FP, TN, and FN represent the quantities of true positives, false positives, true negatives, and false negatives, respectively. The values of AUROC and AUPRC, calculated based on the Receiver-Operating Characteristic (ROC) curve and Precision-Recall curve, respectively, range from 0 to 1. Higher values of AUROC and AUPRC indicate better predictive performance of the model.

For multi-class classification tasks, performance is typically evaluated using accuracy (Acc), which is defined as:

Where TP(i), FP(i), TN(i), and FN(i) represent the quantities of samples (numerator) correctly predicted as class i, the total number of class i samples predicted as any other class, the total number of samples correctly predicted as non-class i, and the total number of non-class i samples predicted as class i, respectively.

To facilitate users, we provide a brief guide that users can use to identify the location of the given part of the source code in SIFS. The table is as follows:

| Main Directory | Subdirectory | Content |

|---|---|---|

| Data | CSV and other file formats | Example files stored in this directory |

| Document | SIFS_manual.pdf | User manual |

| images | Image Files | Icons and image files |

| losses | jFitnessFunction.py | Fitness functions for feature selection optimization |

| models | Model files ending with ".pkl" | By default, models will be saved in this directory |

| util | Feature_selection | 55 population intelligence feature selection algorithms |

| DataAnalysis.py | Classes for feature analysis, including clustering, feature normalization, selection, and dimensionality reduction | |

| EvaluationMetrics.py | Classes for computing evaluation metrics | |

| InputDialog.py | GUI classes for all dialogs for parameter settings | |

| MachineLearning.py | Classes for model construction, including all traditional machine learning algorithms | |

| MCL.py | Markov clustering algorithm | |

| ModelMetrics.py | Classes for storing evaluation metric data and data for plotting ROC and PRC curves | |

| Nets.py | Architecture for storing deep learning algorithms | |

| PlotWidgets.py | GUI classes for plotting, such as ROC, PRC curves, scatter plots, and kernel density | |

| TableWidget.py | GUI for table display | |

| Main.py | -- | GUI for launching the program |

| SIFS.py | -- | GUI for the SIFS module |