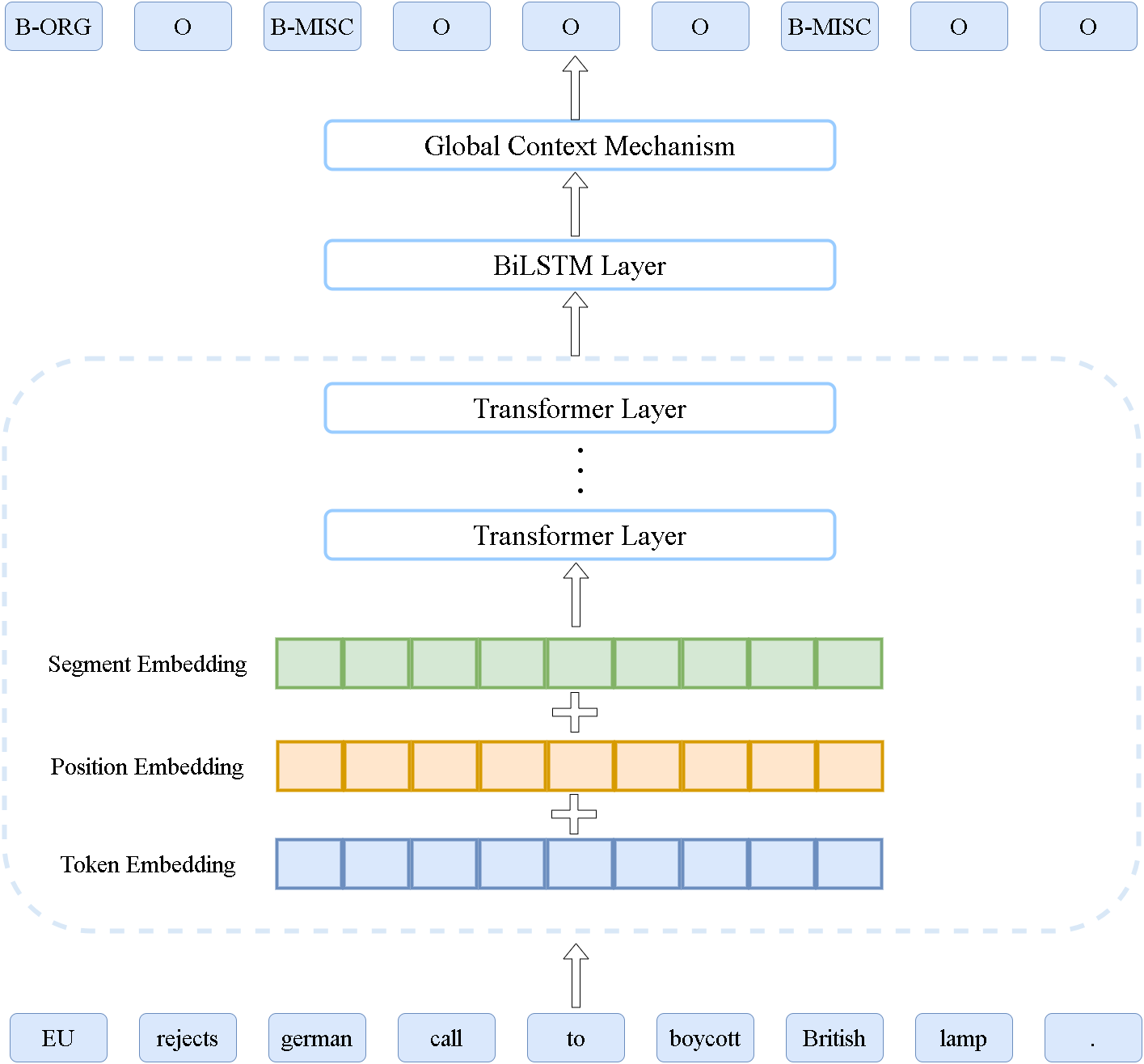

Global Context Mechanism for Sequence Labeling

- python==3.8.13

- torch==1.13.0

- transformers==4.27.1

- tqdm==4.64.0

- numpy==1.22.4

- Aspect-Based sentiment analysis (ABSA): all datasets for ABSA can be found in (Li, 2019)

- Named Entity Recognition: Conll2003, wnut2017, weibo

- Part of Speech Tagging: Universal Dependencies

| Layers | Rest14 | Rest15 | Rest16 | Laoptop14 | Conll2003 | Wnut2017 | Conll2003 | UD | |

|---|---|---|---|---|---|---|---|---|---|

| BERT | 1E-5 | 1E-5 | 1E-5 | 1E-5 | 1E-5 | 1E-5 | 1E-5 | 1E-5 | 1E-5 |

| BiLSTM | 5E-4 | 1E-3 | 5E-4 | 5E-4 | 1E-3 | 1E-3 | 1E-3 | 1E-3 | 1E-3 |

| context | 1E-3 | 1E-3 | 1E-5 | 1E-5 | 1E-3 | 1E-3 | 1E-3 | 1E-4 | 1E-3 |

| classification | 1E-4 | 1E-4 | 1E-4 | 1E-4 | 1E-4 | 1E-4 | 1E-4 | 1E-4 | 1E-4 |

bert-base-chinese and bert-base-cased is used for English datasets and Chinese datasets respectively. batch size:

- ABSA: Rest14 32, Rest15 16, Rest16 32, Laptop14 16.

- NER: 16 is applied for all datasets.

- POS Tagging: 16 is applied for all datasets.

python main.py --dataset_type absa --dataset_name rest14 --use_tagger True --use_context True

- model_name: pretrained model name. default: bert-base-cased

- cache_dir: the directory to save pretrained model.

- use_tagger: using BiLSTM or not. default: True

- use_context: using context mechanism or not. default: False

- context_mechanism: which context mechanism will be used. default: global

- mode: using pretrained language or not. default: pretrained

- tagger_size: dimension of BiLSTM output. default 600

In case of that you have specific dataset format, making a new reader function which is a parameter to construct the Dataset classes.

Rename the files under each dataset to train.txt, valid.txt and test.txt respectively. the format samples are given under each dataset directory.

| Layers | Rest14 | Rest15 | Rest16 | Laoptop14 | Conll2003 | Wnut2017 | Conll2003 | UD | |

|---|---|---|---|---|---|---|---|---|---|

| BERT | 69.75 | 57.07 | 65.95 | 58.49 | 91.51 | 43.59 | 68.09 | 95.56 | 96.85 |

| BERT-BiLSTM | 73.47 | 61.14 | 71.05 | 61.12 | 91.85 | 46.95 | 68.86 | 95.66 | 95.90 |

| BERT-BiLSTM-context | 73.84 | 63.24 | 71.51 | 62.92 | 91.91 | 48.02 | 69.84 | 95.62 | 97.01 |