This is repository for Unity Machine Learning Agent and Reinforcement Learning(RL).

Unity release awesome tool for making reinforcement learning environment! Unity Machine Learning

I usually make 2D RL environment with pygame. pygame environment repository

Thanks to Unity, I am trying to make some 3D RL Environments.

I wrote summary of RL algorithms in pygame environment repository

Some of the environment made by purchased models. In this case, it is hard to provide raw unity codes. However, there are some simple environments which are made by simple or free model. I will provide raw unity codes for those environments if I solve the capacity problem.

Software

- Windows7 (64bit), Ubuntu16.04

- Python 3.5.2

- Anaconda 4.2.0

- Tensorflow-gpu 1.3.0

- Unity version 2017.2.0f3 Personal

- opencv3 3.1.0

Hardware

-

CPU: Intel(R) Core(TM) i7-4790K CPU @ 4.00GHZ

-

GPU: GeForce GTX 1080Ti

-

Memory: 8GB

I added some environments and assets for making ML-agents

- ML-agents: It is basic ML-agent v0.3 environment from official ML-agents github

- Assets: assets for making games

This project was one of the project of Machine Learning Camp Jeju 2017.

After the camp, simulator was changed to Unity ML-Agents. Also, I wrote a paper about this project and it accepted to Intelligent Vehicle Symposium 2018

The Repository link of this project as follows.

The agent of this environment is vehicle. Obstacles are other 8 different kind of vehicles. If vehicle hits other vehicle, it gets minus reward and game restarts. If vehicle hits start, it gets plus reward and game goes on. The specific description of the environment is as follows.

Above demo, referenced papers to implement algorithm are as follows.

Download links of this environments are as follows.

- Vehicle Environment Dynamic Obs Windows Link

- Vehicle Environment Dynamic Obs Mac Link

- Vehicle Environment Dynamic Obs Linux Link

Yeah~~ This environment won ML-Agents Challenge!!! 👑

The agent of this environment is vehicle. Obstacles are static tire barriers. If vehicle hits obstacle, it gets minus reward and game restarts. If vehicle hits start, it gets plus reward and game goes on. The specific description of the environment is as follows.

Sample video of this game is as follows.

Game demo youtube video

Above demo, referenced papers to implement algorithm are as follows.

Download links of this environments are as follows.

This is similar with famous game Flappy Bird.

I implemented this game from Unity Lecture

Yellow bird moves up and down. The bird has not to be collided with ground or columns. Also, if it goes above the screen, the game ends.

The rules of the flappy bird are as follows.

+1 Reward

- Agent moves through the columns: reward

-1 Reward

- Agent collide with columns

- Agent collide with ground

- Agent moves above the screen

Terminal conditions

- It is same with the -1 reward condition

Download links of this environments are as follows.

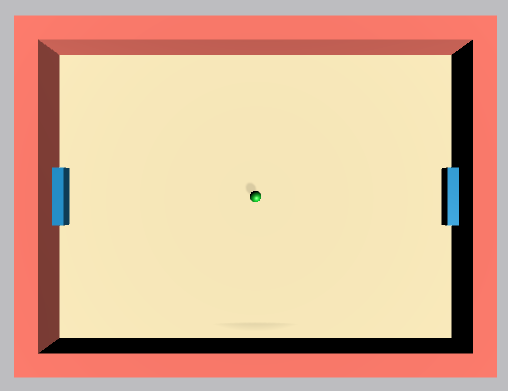

This is simple and popular environment for testing deep reinforcement learning algorithms.

Two bars have to hit the ball to win the game. In my environment, left bar is agent and right bar is enemy. Enemy is invincible, so it can hit every ball. In every episode, ball is fired in random direction.

The rules of the pong are as follows.

- Agent hits the ball: reward +1

- Agent misses the ball: reward -1

Terminal conditions

- Agent misses the ball

- Agent hit the ball 5 time in a row

Download links of this environments are as follows.