Pytorch implementation of WAE-MMD(paper).

python 3.6.4

pytorch 0.3.1.post2

visdom

- download

img_align_celeba.zipandlist_eval_partition.txtfiles from here, makedatadirectory, put downloaded files intodata, and then run./preprocess_celeba.sh. for example,

.

└── data

└── img_align_celeba.zip

└── list_eval_partition.txt

- initialize visdom

python -m visdom.server

- run by scripts

sh run_celeba_wae_mmd.sh

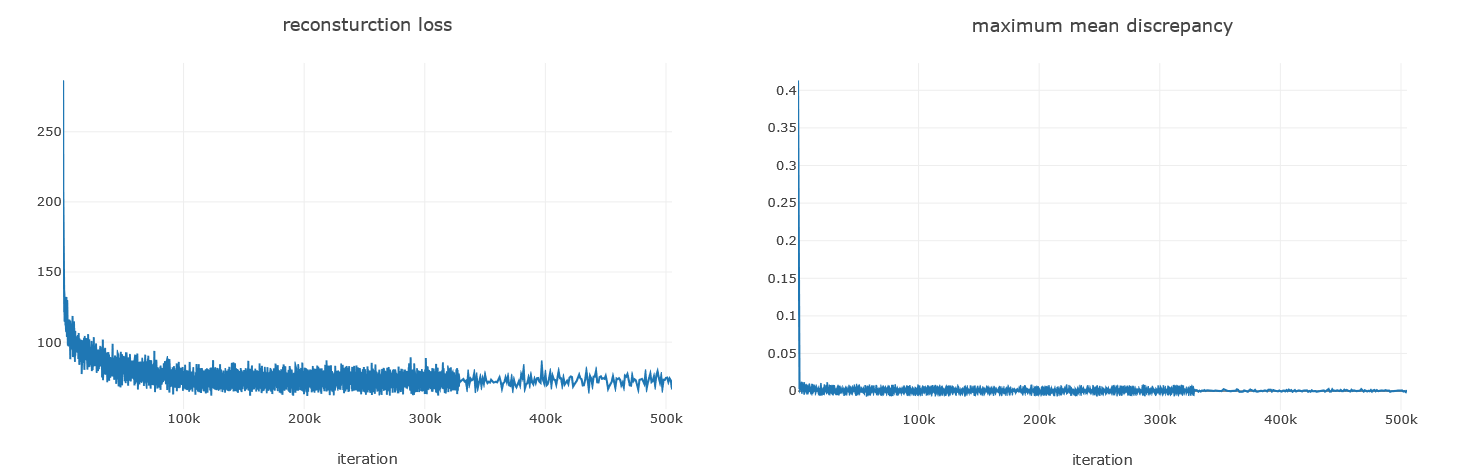

- check training process on the visdom server

localhost:8097

- Wasserstein Auto-Encoders, Tolstikhin et al, ICLR, 2018

- Code repos : official, re-implementation, both in Tensorflow