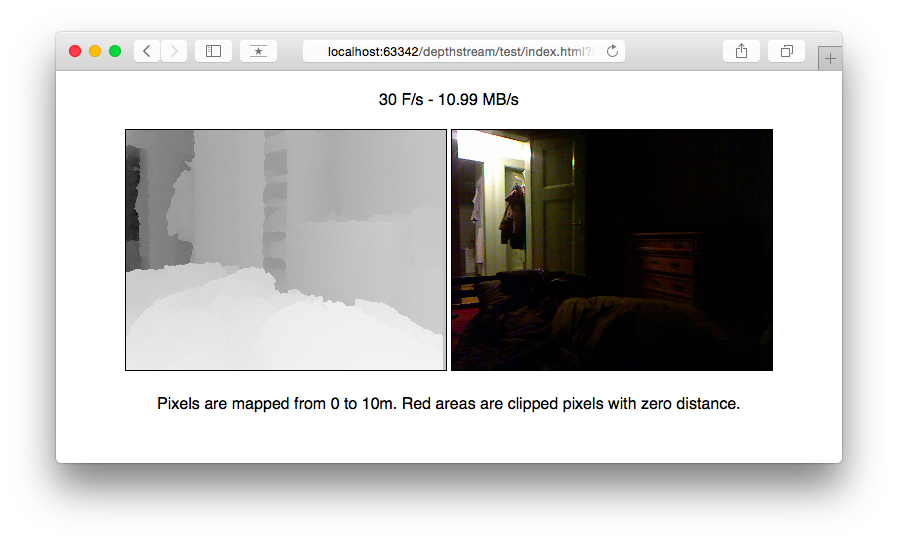

stream kinect's depth image to websocket clients

With Depsthream you can easily get depth image data from your Kinect using a WebSocket Connection. A wepage running on the same computer can that way easily obtain the depth pixel array and feed into an animation.

Depthstream.

Usage:

depthstream [options]

Options:

-h --help Show this screen.

-i --info Show connected Kinects.

-p --port=<n> Port for server. [default: 9090].

-d --device=<n> Device to open. [default: 0].

-b --bigendian Use big endian encoding.

-r --reduce=<n> Reduce resolution by nothing or a power of 2. [default: 0]

-I --interpolate=<n> Interpolate zeroed pixels with a filter block of n*n. [default: 0]

-s --skip=<n> Skip every nth frame of the incomming 30fps stream. [default: 0]

-c --color Append color data.

Make a connection to the server and request a frame or stream mode by sending a message:

var ws = new WebSocket('ws://localhost:9090');

ws.binaryType = 'arraybuffer';

ws.onopen = function (event) {

ws.send('1'); // request the current cashed frame

ws.send('*'); // add connection to the stream list

};

ws.onmessage = function (message) {

// the array contains 640*480 values from 0 to 10000

// representing the depth in millimeters

var array = new Uint16Array(message.data);

};See the example.

First install dependencies using brew:

$ brew install libusb

$ brew install libfreenectThen download the latest binary from releases.

$ gopm get

$ gopm build