This repository is an unofficial version of Class Activation Mapping written in PyTorch.

Paper and Archiecture: Learning Deep Features for Discriminative Localization

Paper Author Implementation: metalbubble/CAM

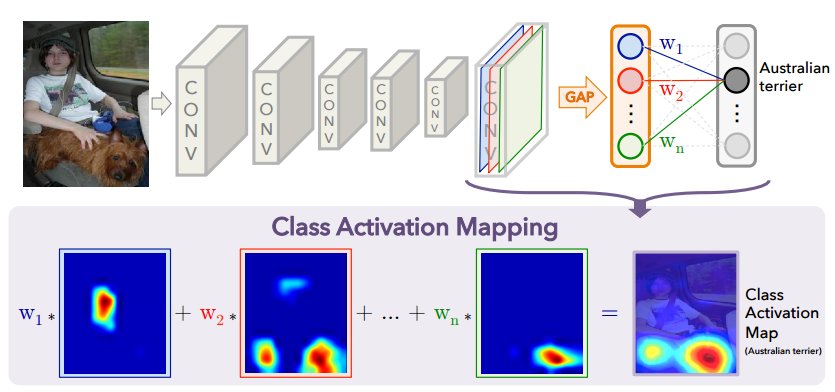

We propose a technique for generating class activation maps using the global average pooling (GAP) in CNNs. A class activation map for a particular category indicates the discriminative image regions used by the CNN to identify that category. The procedure for generating these maps is illustrated as follows:

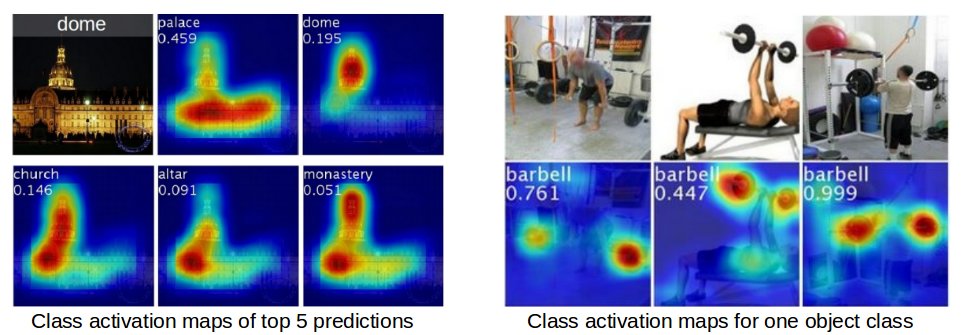

Class activation maps could be used to intepret the prediction decision made by the CNN. The left image below shows the class activation map of top 5 predictions respectively, you can see that the CNN is triggered by different semantic regions of the image for different predictions. The right image below shows the CNN learns to localize the common visual patterns for the same object class.

Usage: python3 main.py

Network: Inception V3

Data: Kaggle dogs vs. cats

- Download the 'test1.zip' and 'train.zip' files and upzip them.

- Divde the total dataset into train group and test group. As you do that, images must be arranged in this way:

kaggle/train/cat/*.jpg kaggle/test/cat/*.jpg

Checkpoint

- Checkpoint will be created in the checkpoint folder every ten epoch.

- By setting

RESUME = #, you can resume fromcheckpoint/#.pt.