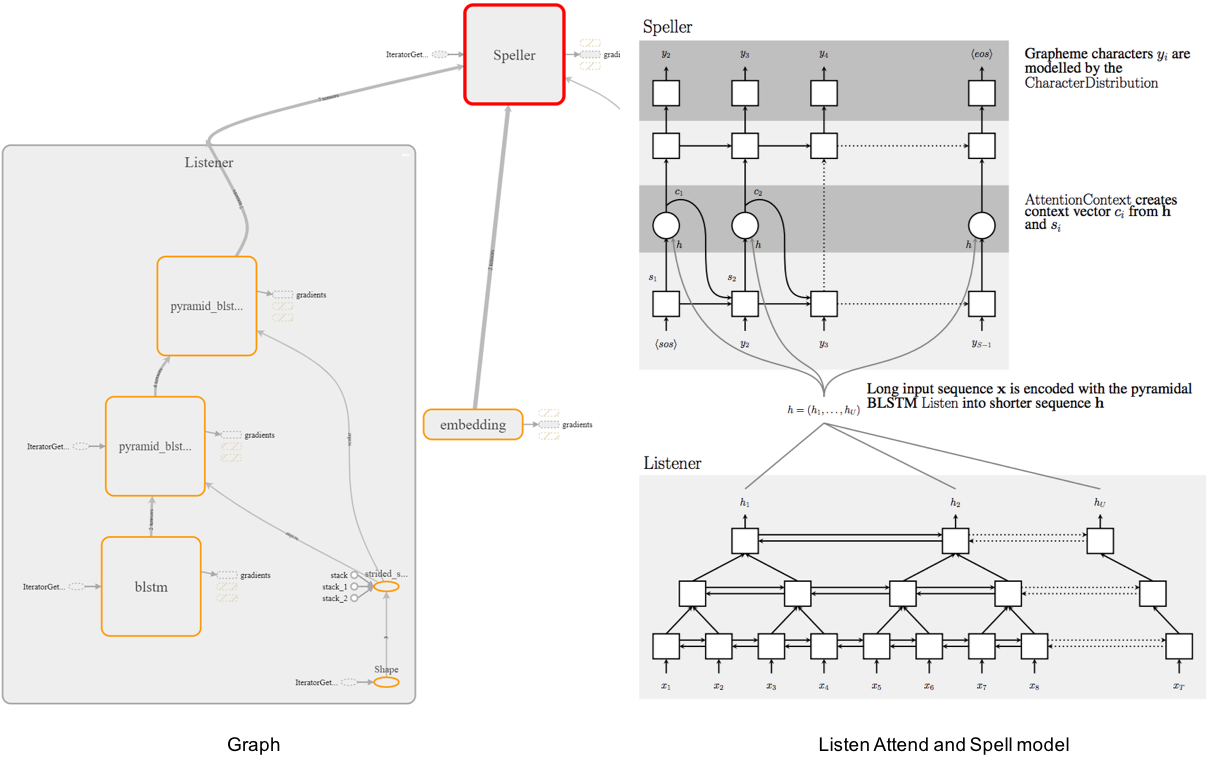

A TensorFlow Implementation of Listen Attention and Spell

This is a tensorflow implementation of end-to-end ASR. Though there are several fantastic github repos in tensorflow, I tried to implement it without using tf.contrib.seq2seq API in this repo. In addition, the performance on LibriSpeech dev/test sets is evaluated and the evaluation results are reasonable.

- Components:

- Char/Subword text encoding.

- MFCC/fbank acoustic features with per utterance CMVN.

- LAS training (visualized with tensorboard: loss, sample text outputs, features, alignments).

- TFrecord dataset pipline.

- Batch testing using greedy decoder.

- Beam search decoder.

- RNNLM.

Note that this project is still in progress.

-

Notes

- Currently, I only test this model on MFCC 39 (13+delta+accelerate) features.

- CTC related parts are not yet fully tested.

- Volume augmentation is currently commented out because it shows little improvements.

-

Improvements

- CNN-based Listener.

- Location-aware attention.

- Augmentation include speed perturbation.

- Label smoothing. (IMPORANT)

- Scheduled learning rate.

- Scheduled sampling.

- Bucketing.

-

Some advice

- Generally, LibriSpeech-100 is not large enough to train a well-perform LAS.

- In my experience, adding more data is the best policy.

- A better way to check if your model is learning in a right way is to monitor the speech-text aligments in tensorboard or set verbosity=1.

pip3 install virtualenv

virtualenv --python=python3 venv

source venv/bin/activate

pip3 install -r requirements.txtThe definitions of the args are described in las/arguments.py. You can modify all args there before preprocessing, training, testing and decoding.

- Libirspeech train/dev/test data

bash prepare_libri_data.sh I include dataset pipeline and training pipeline in run.sh.

bash run.shTrain RNNLM: (NOT READY)

Testing with gready decoder.

python3 test.py --split SPLIT \ # test or dev

--unit UNIT \

--feat_dim FEAT_DIM \

--feat_dir FEAT_DIR \

--save_dir SAVE_DIR Beam search decoder.

python3 decode.py --split SPLIT \ # test or dev

--unit UNIT \

--beam_size BEAM_SIZE \

--convert_rate 0.24 \ # 0.24 is large enough.

--apply_lm APPLY_LM \

--lm_weight LM_WEIGHT \

--feat_dim FEAT_DIM \

--feat_dir FEAT_DIR \

--save_dir SAVE_DIR tensorboard --logdir ./summary

Results trained on LibriSpeech-360 (WER)

| Model | dev-clean | test-clean |

|---|---|---|

| Char LAS | 0.249 | 0.262 |

- Listen, Attend and Spell

- Attention-Based Models for Speech Recognition

- On the Choice of Modeling Unit for Sequence-to-Sequence Speech Recognition

- Char RNNLM: TensorFlow-Char-RNN

- A nice Pytorch version: End-to-end-ASR-Pytorch.

- Add scheduled sampling.

- Add location-aware attention.

- Evaluate the performance with subword unit: Subword las training.

- Decoding with subword-based RNNLM.

- Evaluate the performance on joint CTC training, decoding.