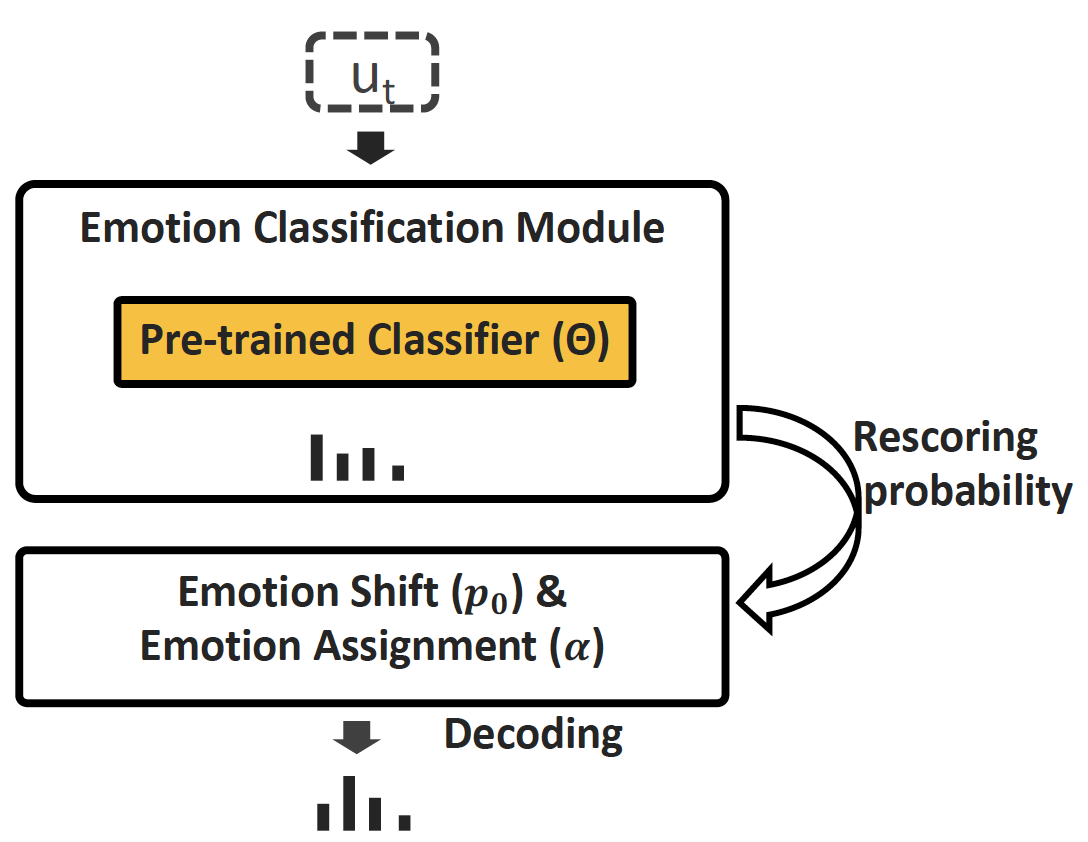

This is an implementation of Dialogical Emotion Decoder presented in this year ICASSP 2020. In this repo, we use IEMOCAP as an example to evaluate the effectiveness of DED.

- The performance is better than shown in the paper because I found a little bug in the rescoring part.

- To test on your own emotion classifier, replace

data/outputs.pklwith your own outputs.- Dict, {utt_id: logit} where utt_id is the utterance name in IEMOCAP.

pip3 install virtualenv

virtualenv --python=python3 venv

source venv/bin/activate

pip3 install -r requirements.txtCurrently this repo only supports IEMOCAP.

The definitions of the args are described in ded/arguments.py. You can modify all args there.

python3 main.py --verbosity 1 --result_file RESULT_FILEResults of DED with beam size = 5.

| Model | UAR | ACC |

|---|---|---|

| Pretrained Classifier | 0.671 | 0.653 |

| DED | 0.710 | 0.695 |