-

get code base running locally.

- figure out how to run though the entire code quickly.

- quick test run:

python src/main.py --name quick --contrast_local_inter --contrast_local_intra --interintra_weight 0.5 --max_size 64 --pub_data_num 2 --feature_dim 2 --num_img_clients 2 --num_txt_clients 2 --num_mm_clients 3 --client_num_per_round 2 --local_epochs 2 --comm_rounds 2 --not_bert--contrast_local_inter --contrast_local_intra --interintra_weight 0.5Cream options.--max_sizeadded by xiegeo, 0 or 10000 for old behavior, client training data count, per client.--pub_data_numpublic training data size (default 50000), proportional to communication cost (memory for local simulation) cost.--feature_dimnumber of public features (default 256), proportional to communication cost.--num_img_clients 2 --num_txt_clients 2 --num_mm_clients 3 --client_num_per_round 2number of max client of each type, and total number of client per round.--local_epochs 2 --comm_rounds 2local and global rounds.--not_bertuse a simpler model

-

get code to run in a network

- see the "How to run the network" section.

-

Learn:

- Transformers

- Multimodal

- Federated Learning

-

Implement networking

- try FedML? (to much rewrite for fedML to do it properly, otherwise too hacky.)

- try custom network? (do a quick demo version)

- flags: the same as local version.

- fed_config: setup server and client options.

A network requires n + 2 processes. Where n is the number of clients, plus a command server over http, and a global round computation provider.

python src/federation/server.py --name testpython src/federation/global.py --name test --contrast_local_inter --contrast_local_intra --interintra_weight 0.5 --max_size 64 --pub_data_num 2 --feature_dim 2 --not_bertReplace txt0 with the client to start.

python src/federation/client.py --name test --client_name txt_0 --max_size 64 --pub_data_num 2 --feature_dim 2 --not_bertThe network has to share the learned features. This could be through a file server, a CDN, or shared network storage, ex. Directly accessing the same files is the easies to implement and easily extends to shared network storage, so this is implemented first for ease of local testing without lose of generality.

see report/poc.pdf

Begin original readme

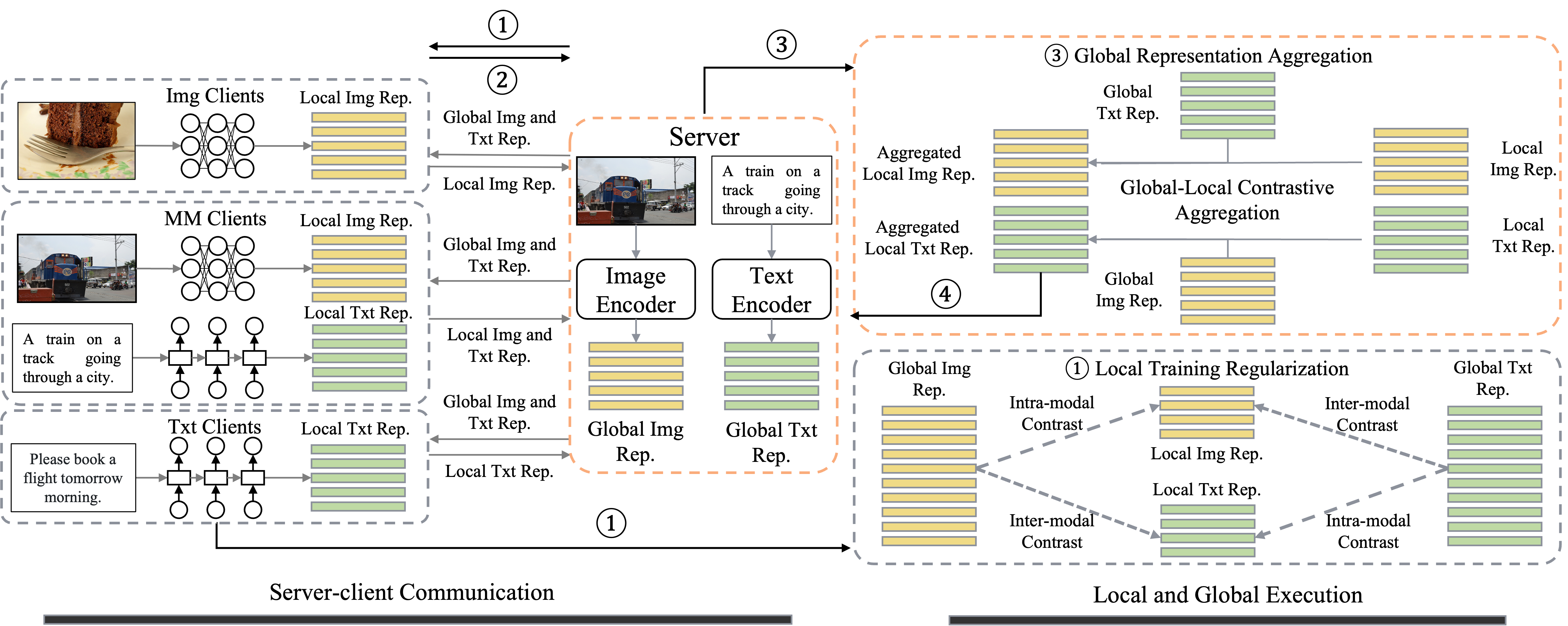

This repo contains a PyTorch implementation of the paper Multimodal Federated Learning via Contrastive Representation Ensemble (ICLR 2023).

Note: This repository will be updated in the next few days for improved readability, easier environment setup, and datasets management. Please stay tuned!

The required packages of the environment we used to conduct experiments are listed in requirements.txt.

Please note that you should install apex by following the instructions from https://github.com/NVIDIA/apex#installation, instead of directly running pip install apex.

For datasets, please download the MSCOCO, Flicker30K, CIFAR-100, and AG_NEWS datasets, and arrange their directories as follows:

os.environ['HOME'] + 'data/'

├── AG_NEWS

├── cifar100

│ └── cifar-100-python

├── flickr30k

│ └── flickr30k-images

├── mmdata

│ ├── MSCOCO

│ │ └── 2014

│ │ ├── allimages

│ │ ├── annotations

│ │ ├── train2014

│ │ └── val2014

To reproduce CreamFL with BERT and ResNet101 as server models, run the following shell command:

python src/main.py --name CreamFL --server_lr 1e-5 --agg_method con_w --contrast_local_inter --contrast_local_intra --interintra_weight 0.5If you find the paper provides some insights into multimodal FL or our code useful 🤗, please consider citing:

@article{yu2023multimodal,

title={Multimodal Federated Learning via Contrastive Representation Ensemble},

author={Yu, Qiying and Liu, Yang and Wang, Yimu and Xu, Ke and Liu, Jingjing},

journal={arXiv preprint arXiv:2302.08888},

year={2023}

}

We would like to thank for the code from PCME and MOON repositories.