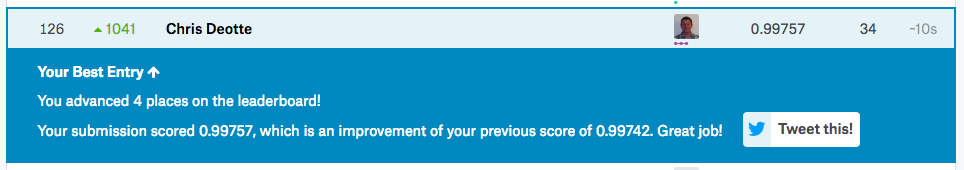

I created this C program to learn CNNs and compete in Kaggle's MNIST digit classifier competition. This program loads Kaggle's 42000 training digit images, displays them, builds and trains a convolutional neural network, displays validation accuracy progress plots, and classifies Kaggle's 28000 test digit images. It applies data augmentation to create new training images and achieves an impressive accuracy of 99.5% placing in the top 10%. I posted a detailed report here. Next using TensorFlow (and the power of GPUs!) I created an ensemble of CNNs that scores 99.75% here placing in the top 5%.

To compile this program, you must download the file webgui.c from here. That library creates the graphic user interface (GUI). Compile everything with the single command gcc CNN.c webgui.c -lm -lpthread -O3. Also download the Kaggle MNIST images here, and place train.csv and test.csv in the directory with your executable.

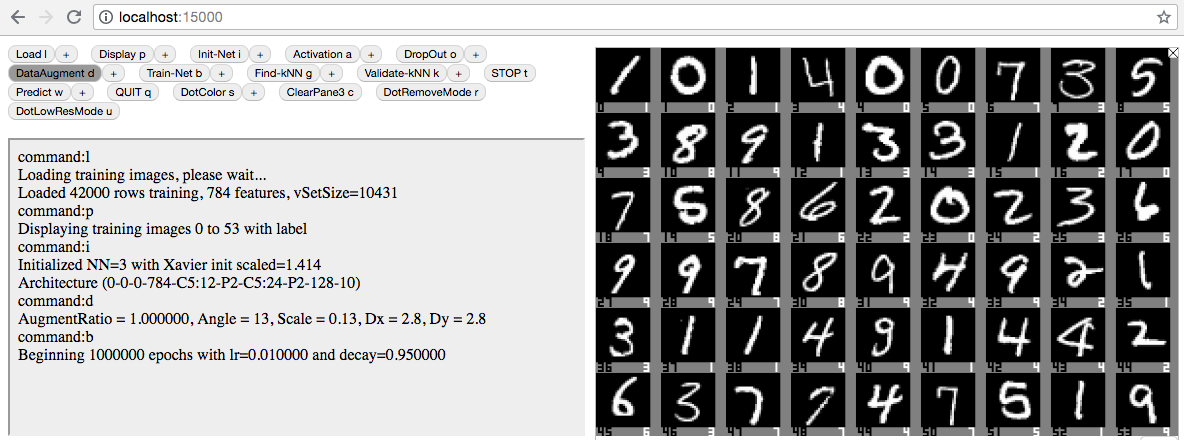

Upon running the compiled program, your terminal window will say webgui: Listening on port 15000 for web browser.... Open a web browser and enter the address localhost:15000, and you will see the interface. (Note that you can also run this program on a remote server and enter that server's IP address followed by :15000) Next hit the buttons: Load, Display, Init-Net, Data Augment, Train-Net, and you will see the following:

Let the CNN train. Unfortunately I didn't implement GPU or minibatches yet, so each epoch takes a slow 4 minutes. Be patient and let it train for 75 epochs and you will see the net achieve 99.5% accuracy!

To make predictions for Kaggle, click the + sign next to the Load button and set trainSet = 0 and select dataFile = test.csv. Then click Load. Next click Predict which writes Submit.csv to your hard drive. Upload that file to Kaggle and celebrate!

To obtain 99.75%, you must train 15 CNNs using architecture 784-C3:32-C3:32-P2-C3:64-C3:64-P2-128-10 with data augmentation 0.1 for 30 epochs and ensemble them. (Each CNN achieves 99.65% by itself.) This takes a long time without GPUs, therefore it is preferable to execute the TensorFlow code posted here or here.