Code and Data of the paper, Joint Activity Recognition and Indoor Localization with WiFi Fingerprints.

- PyTorch 1.0.0

- Please download data, and decompress it at the root folder of this repository.

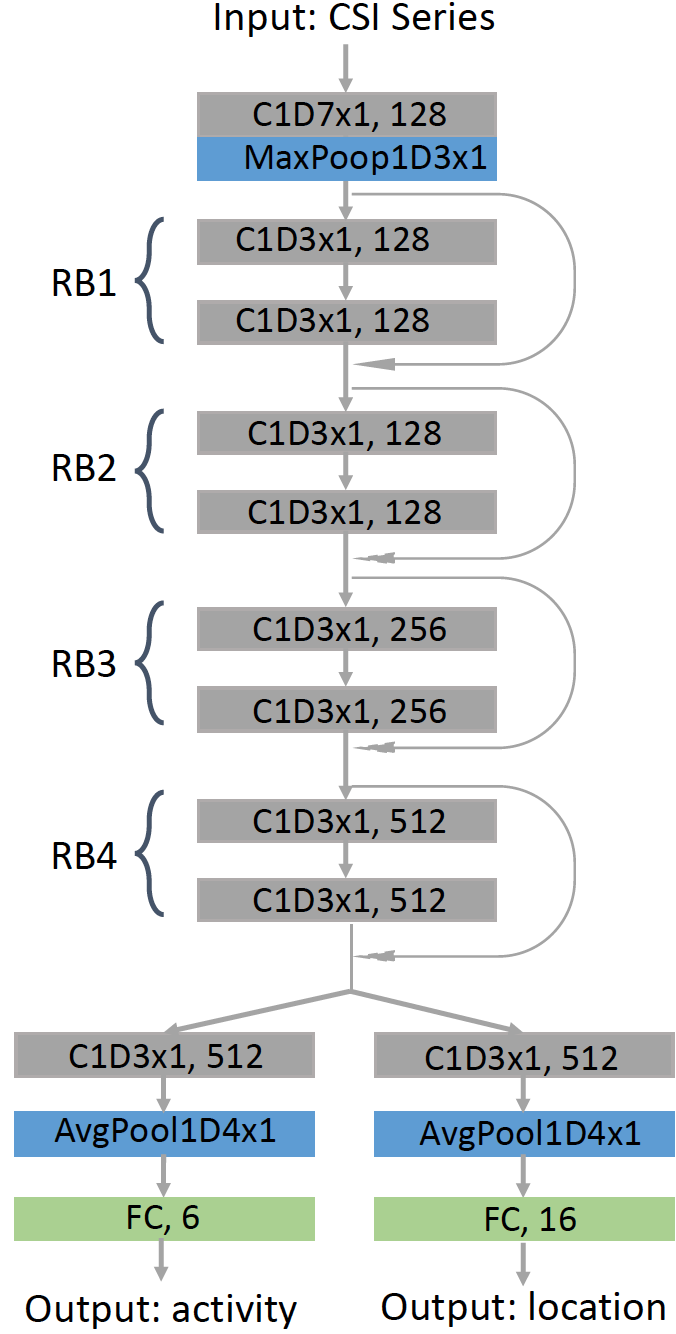

Activity Label: 0. hand up; 1. hand down; 2. hand left; 3. hand right; 4. hand circle; 5. hand cross. Location Label: 0, 1, 2, ..., 15

-

Please download pre-trained weights, and decompress it at the root folder of this repository.

-

Then run train.py or test.py

You may need original data (not segmented and upsampled) for your research, here

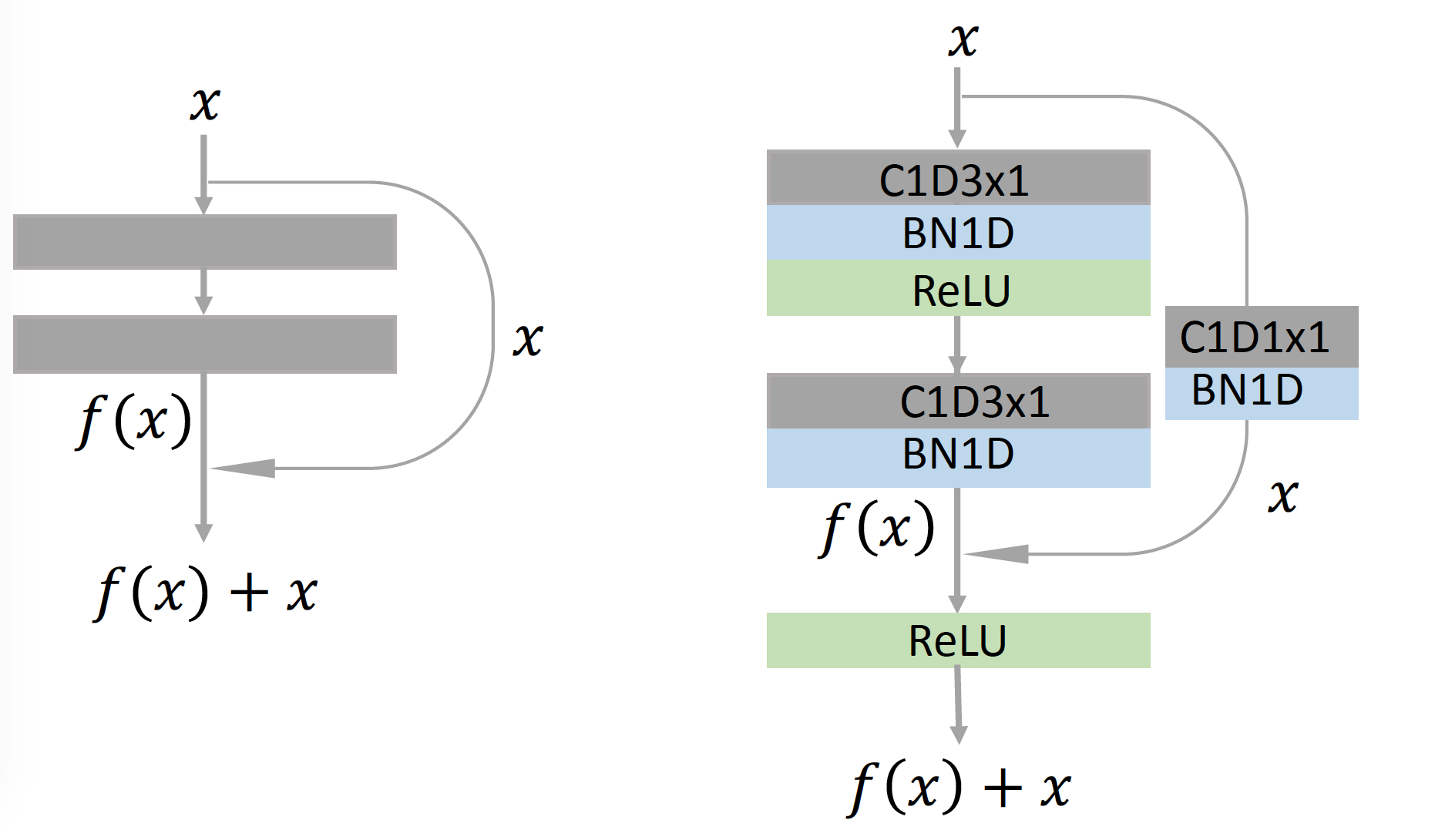

- 1D residual block

- 1D ResNet-[1,1,1,1]

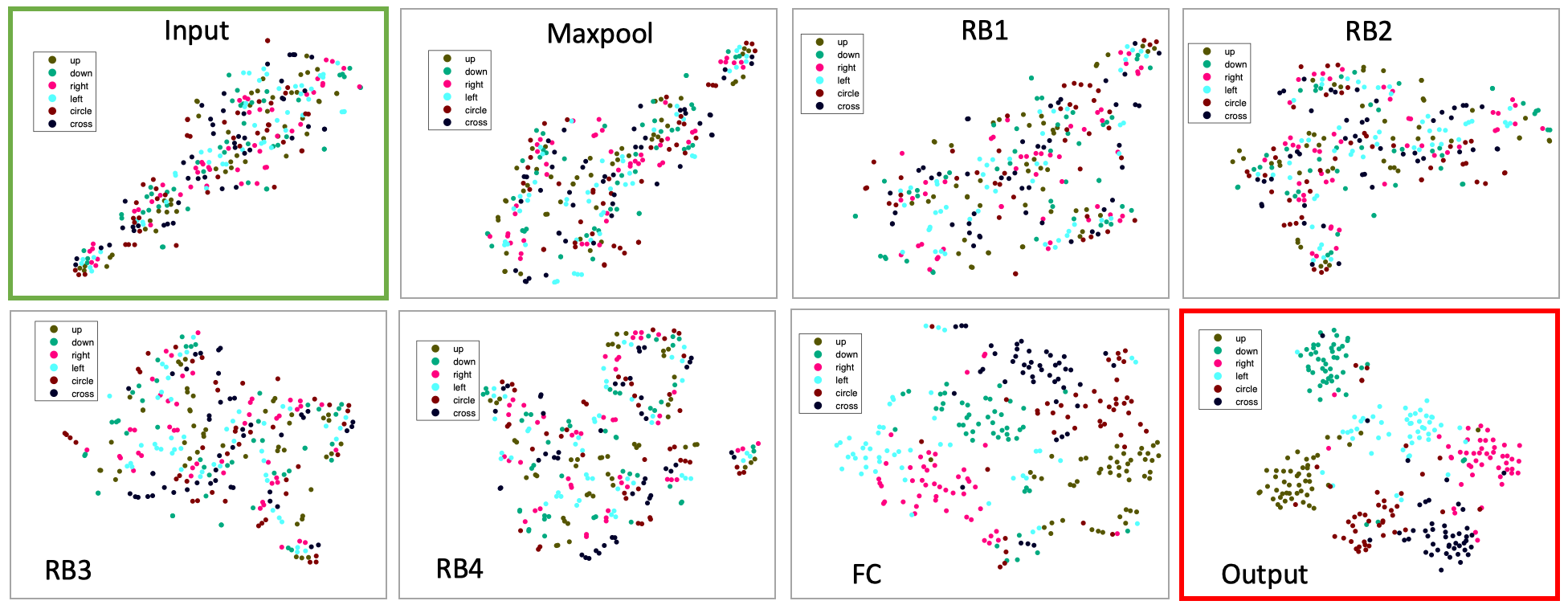

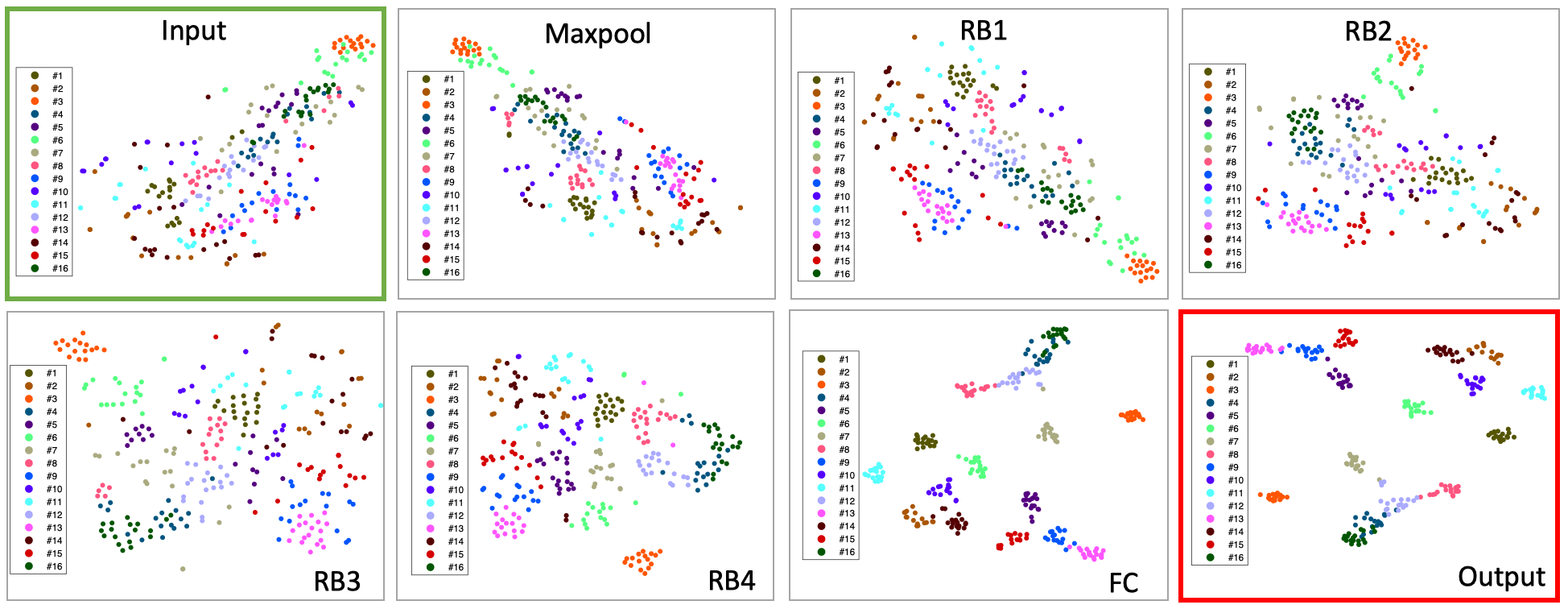

For t-SNE visualization

Please download vis, and run main_plot_tsne.m

If this helps your research, please cite this paper.

@article{wang2019joint,

title={Joint Activity Recognition and Indoor Localization With WiFi Fingerprints},

author={Wang, Fei and Feng, Jianwei and Zhao, Yinliang and Zhang, Xiaobin and Zhang, Shiyuan and Han, Jinsong},

journal={IEEE Access},

volume={7},

pages={80058--80068},

year={2019},

}