- About

- Development setup

- Production setup

- Environment variables

- Rest API and Queues board

- Working with scenarios

- Working with scenario schedulers

- Tutorial: Creating the fist scenario

- Integrations

- License

Crawler is a standalone application written in Node.js built on top of Express.js, Crawlee, Puppeteer and BullMQ, allowing you to crawl data from web pages by defining scenarios. This is all controlled through the Rest API.

- Docker compose

- Make

$ git clone https://github.com/68publishers/crawler.git crawler

$ cd crawler

$ make initHTTP Basic authorization is required for API access and administration. Here we need to create a user to access the application.

$ docker exec -it crawler-app npm run user:create- Docker

- Postgres

>=14.6 - Redis

>=7

For production use, the following Redis settings must be made:

- Configuring persistence with

Append-only-filestrategy - https://redis.io/docs/management/persistence/#aof-advantages - Set

Max memory policytonoeviction- https://redis.io/docs/reference/eviction/#eviction-policies

Firstly, you need to run the database migrations with the following command:

$ docker run \

--network <NETWORK> \

-e DB_URL=postgres://<USER>:<PASSWORD>@<HOSTNAME>:<PORT>/<DB_NAME> \

--entrypoint '/bin/sh' \

-it \

--rm \

68publishers/crawler:latest \

-c 'npm run migrations:up'Then download the seccomp file, which is required to run chrome:

$ curl -C - -O https://raw.githubusercontent.com/68publishers/crawler/main/.docker/chrome/chrome.jsonAnd run the application:

$ docker run \

-- init \

--network <NETWORK> \

-e APP_URL=<APPLICATION_URL> \

-e DB_URL=postgres://<USER>:<PASSWORD>@<HOSTNAME>:<PORT>/<DB_NAME> \

-e REDIS_HOST=<HOSTNAME> \

-e REDIS_PORT=<PORT> \

-e REDIS_AUTH=<PASSWORD> \

-p 3000:3000 \

--security-opt seccomp=$(pwd)/chrome.json \

-d \

--name 68publishers_crawler \

68publishers/crawler:latestHTTP Basic authorization is required for API access and administration. Here we need to create a user to access the application.

$ docker exec -it 68publishers_crawler npm run user:create| Name | Required | Default | Description |

|---|---|---|---|

| APP_URL | yes | - | Full origin of the application e.g. https://www.example.com. The variable is used to create links to screenshots etc. |

| APP_PORT | no | 3000 |

Port to which the application listens |

| DB_URL | yes | - | Connection string to postgres database e.g. postgres://root:root@localhost:5432/crawler |

| REDIS_HOST | yes | - | Redis hostname |

| REDIS_PORT | yes | - | Redis port |

| REDIS_AUTH | no | - | Optional redis password |

| REDIS_DB | no | 0 |

Redis database number |

| WORKER_PROCESSES | no | 5 |

Number of workers that process the queue of running scenarios |

| CRAWLEE_STORAGE_DIR | no | ./var/crawlee |

Directory where crawler stores runtime data |

| CHROME_PATH | no | /usr/bin/chromium-browser |

Path to Chromium executable file |

| SENTRY_DSN | no | - | Logging into the Sentry is enabled if the variable is passed |

| SENTRY_SERVER_NAME | no | crawler |

Server name that is passed into the Sentry logger |

The specification of the Rest API (Swagger UI) can be found at endpoint /api-docs. Usually http://localhost:3000/api-docs in case of development setup. You can try to call all endpoints here.

Alternatively, the specification can be viewed online.

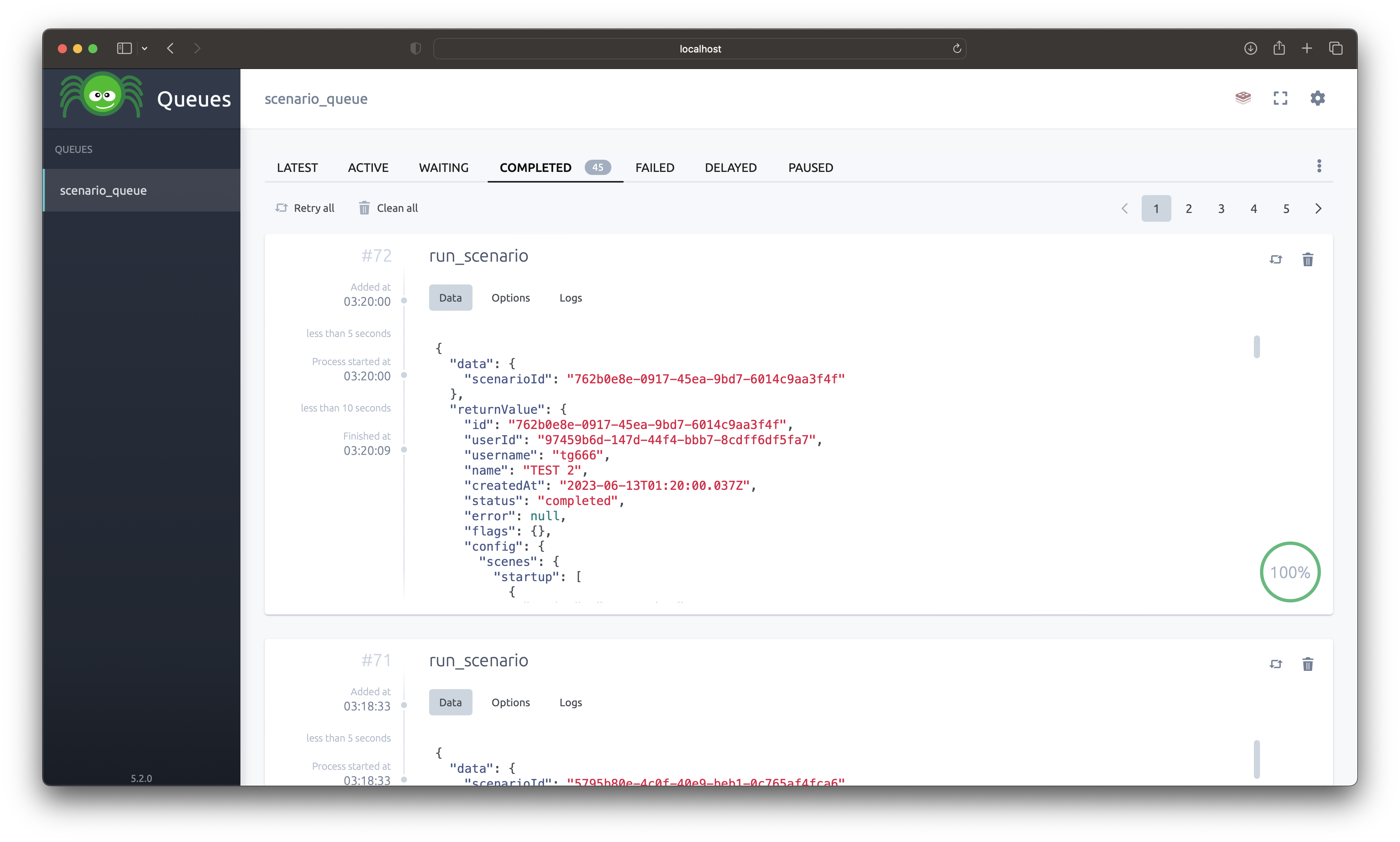

BullBoard is located at /admin/queues. Here you can see all the scenarios that are currently running or have already run.

@todo

@todo

@todo

- PHP Client for Crawler's API - 68publishers/crawler-client-php

The package is distributed under the MIT License. See LICENSE for more information.