The purpose of this project was to build a system that allows user to control the Arlo mobile robot using static hand gestures, with use of camera. The project consists of three parts, the first was to develop script that supposed to recognize the gestures controlling the robot. For this purpose author used convolutional neural network, which had to be properly trained. The next step was to create a robot program that had to properly react to application commands. The last step was to handle communication between programs, Bluetooth 4.0 Low Energy standard was used for this purpose. Unfortunately, during the final control test Bluetooth module has been damaged, in this case the author decided to use simple serial communication instead. The main assumptions of the project were to achieve real-time processing system and achieve high accuracy of hand gesture recognition.

Arlo: https://www.parallax.com/product/arlo-robotic-platform-system

Full video: https://www.youtube.com/watch?v=j6qOpACT1z0

- Mobile Robot arlo

- Bluetooth 4.0 Le module

- Laptop with bluetooth communication enabled

- Clone the repo

- Install python 3

- Install python dependencies, such as: Pytorch, Bleak, PyQt

- Run script "mainAplication.py"

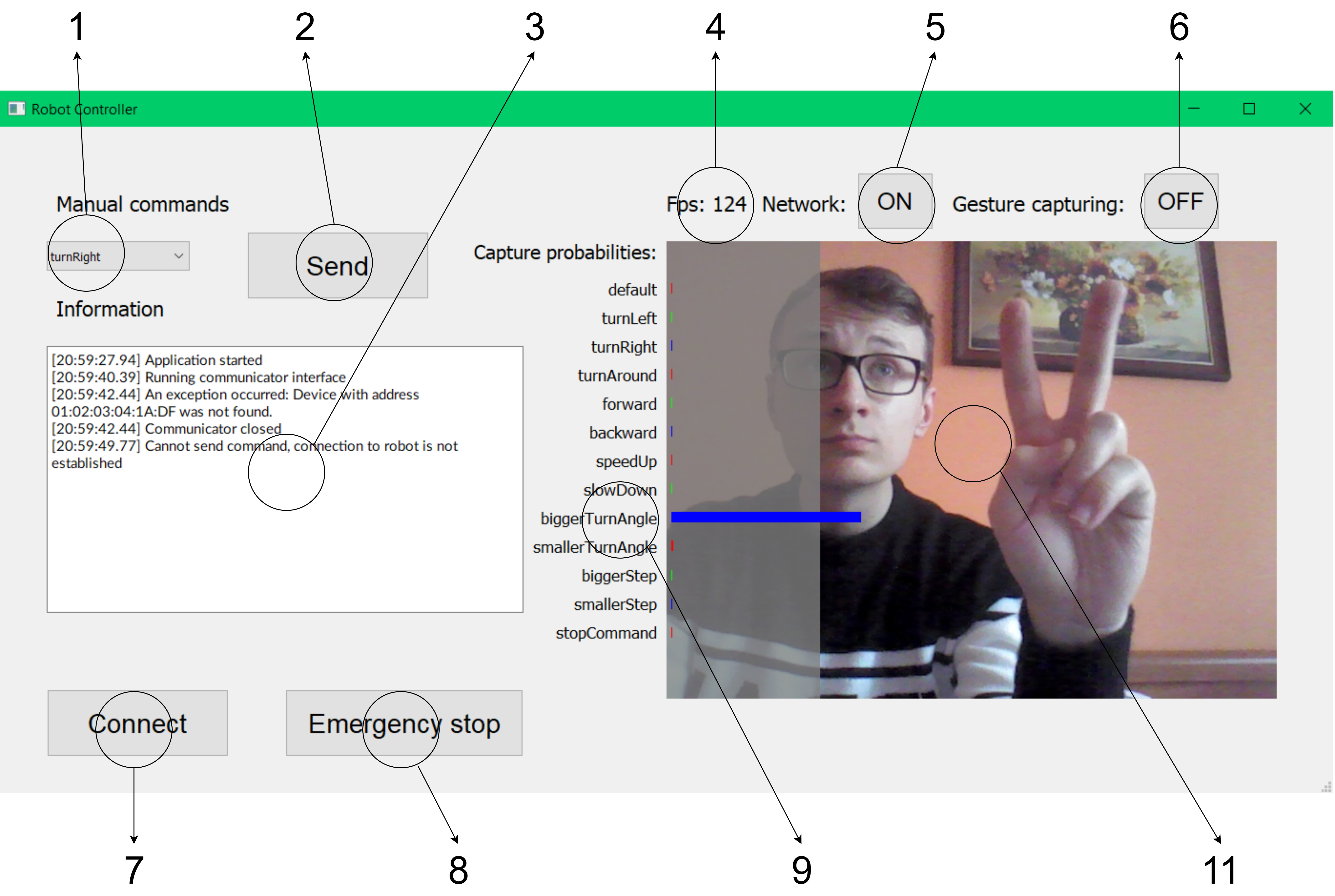

- Manual command list,

- Send manual chosen command,

- Command window, contains information about, gestures captured, execution command status etc.,

- Frames per second,

- On/Off neural network processing,

- On/Off automatic gesture detection. If gesture is detected for more than 2s script automatically sends command to Arlo. Can be also activated via Ctrl.

- Connect/Disconnect,

- Emergency stop, cancels all commands actual beeing in execution. Can be also activated via Shift

- Commands and probability of ocurance in actual dataframe

- Dataframe preview in 640x480 resolution, grey area is ignored in gesture detection process.

- Default

- Turn left

- Turn right

- Turn around

- Forward

- Backward

- Speed up

- Slow down

- Bigger turn angle

- Smaller turn angle

- Bigger step

- Smaller step

- Emergency Stop

Distributed under the MIT License. See LICENSE for more information.

Sylwester Dawida

Poland, AGH

2020