Weifeng Lin, Xinyu Wei, Ruichuan An, Peng Gao

Bocheng Zou, Yulin Luo, Siyuan Huang, Shanghang Zhang and Hongsheng Li

[🌐 Project Page] [📖 Paper] [🤗 MDVP-Data] [🤗 MDVP-Bench] [🤖️ Model] [🎮 Demo]

-

[2024.03.28] 🔥 We released the MDVP-Data dataset and MDVP-Bench benchmark.

-

[2024.03.28] 🔥 We released the SPHINX-V-13B model and online demo.

-

[2024.03.28] 🚀 We release the arXiv paper.

-

[2024.03.28] 🚀 We released the traning and evaluation code.

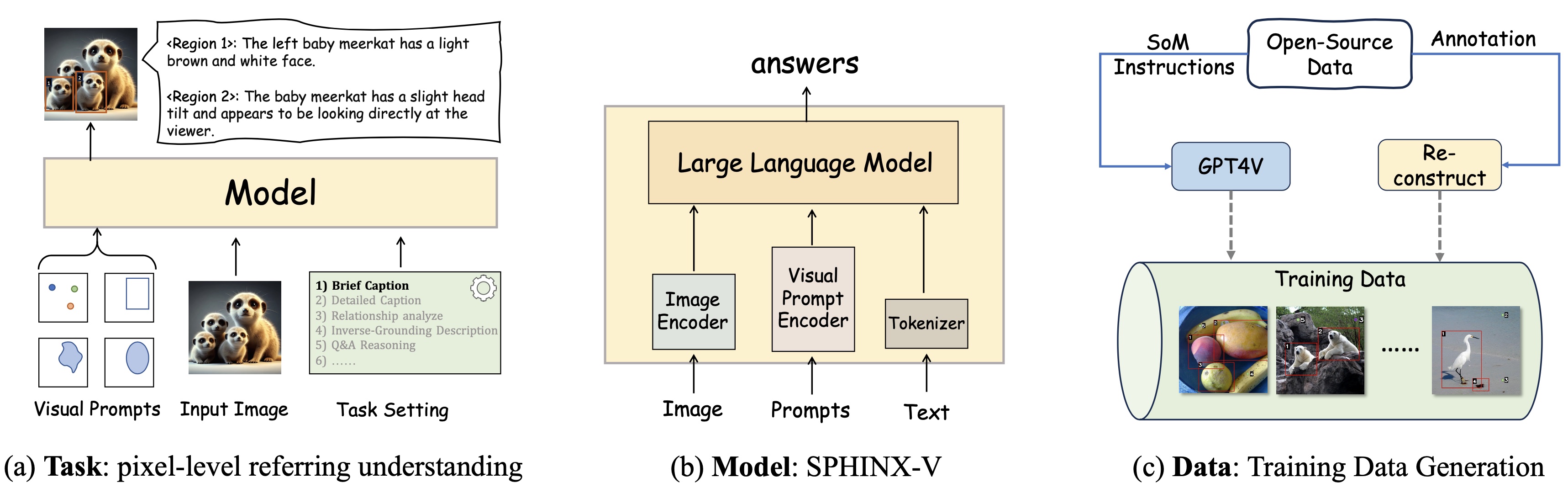

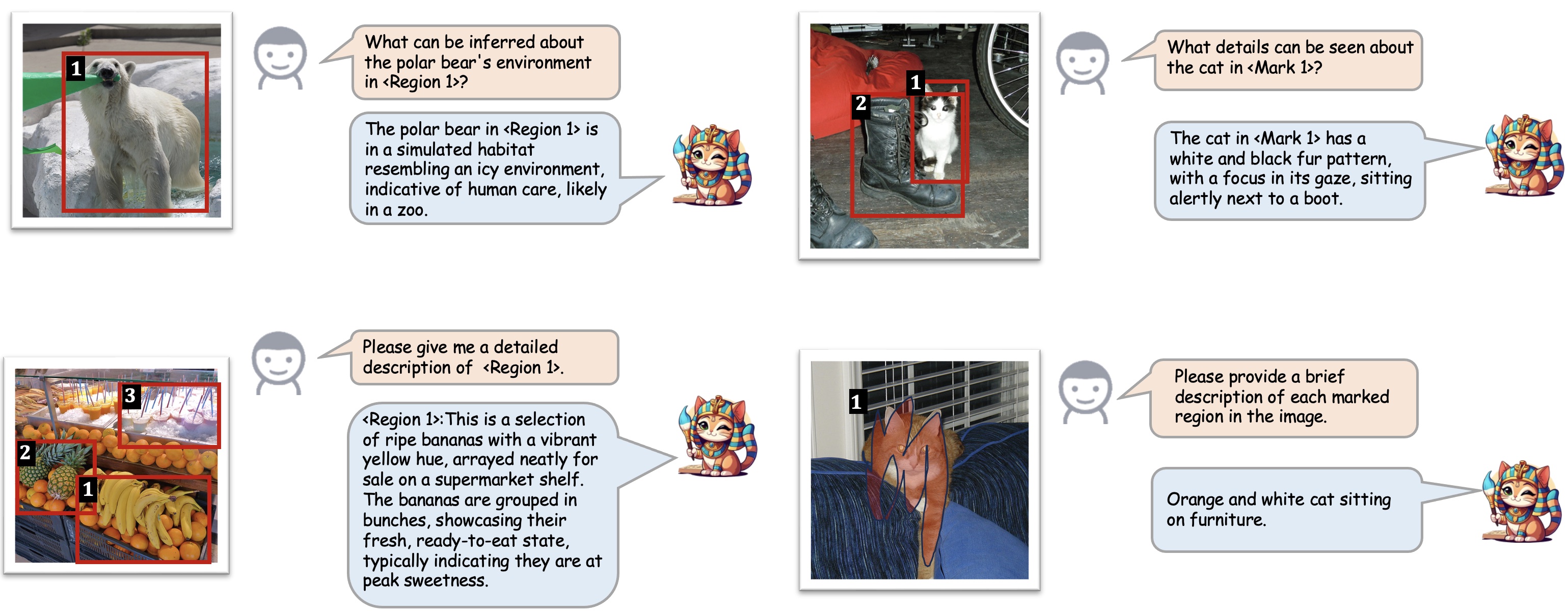

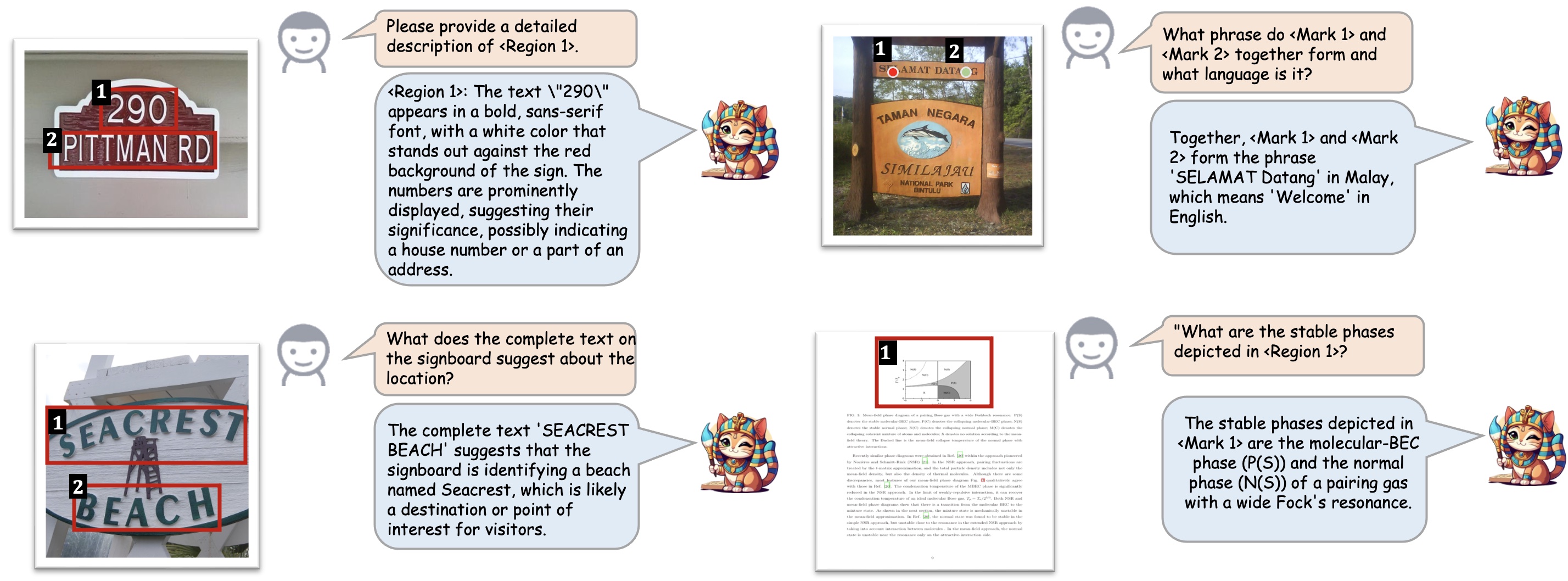

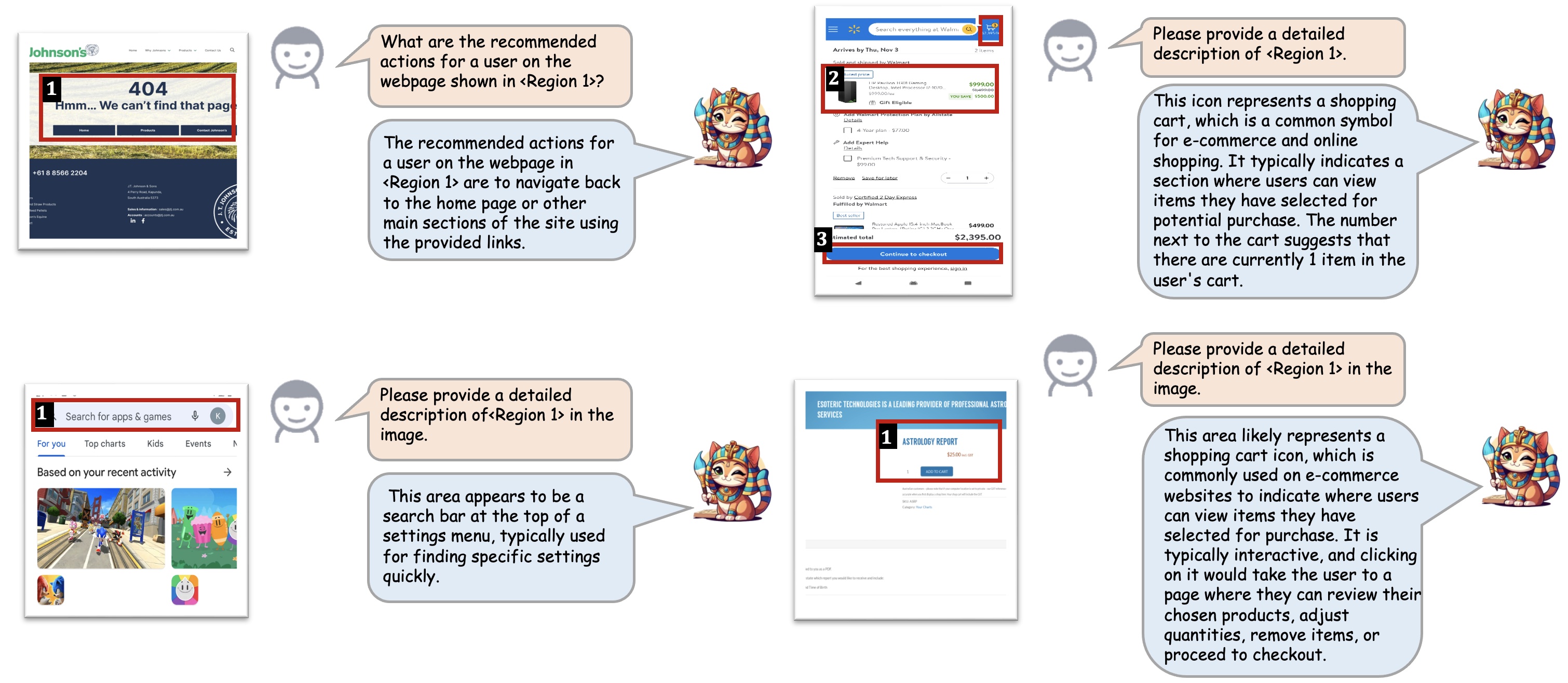

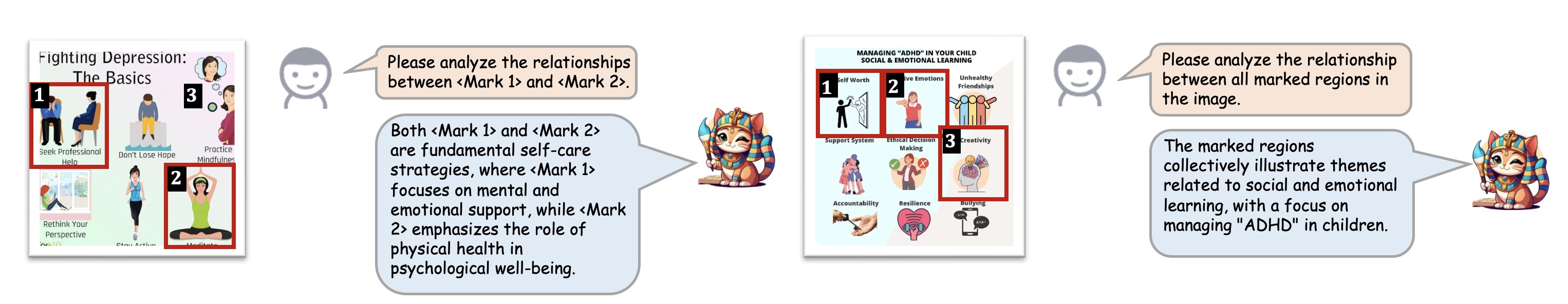

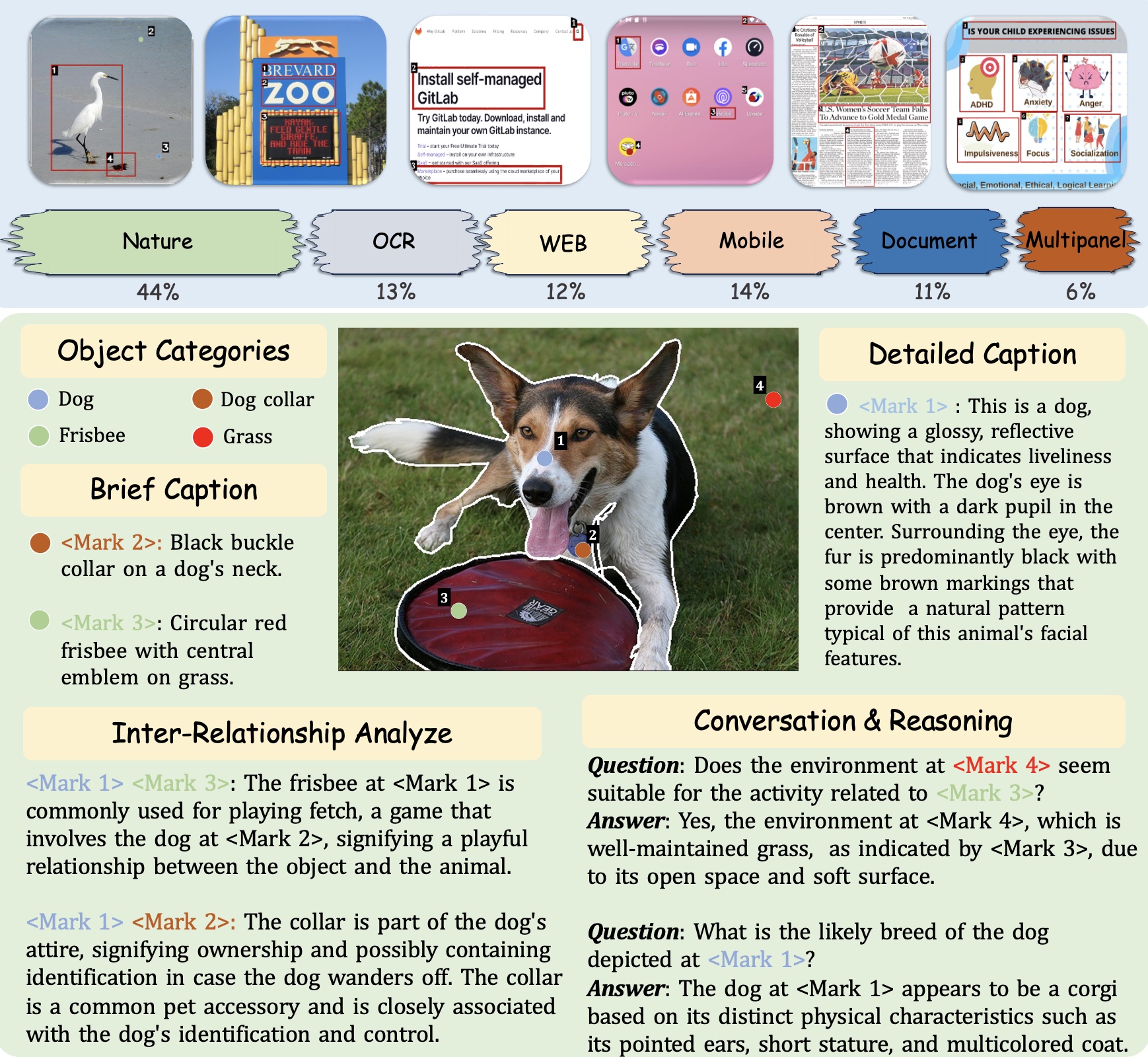

The interaction between humans and artificial intelligence (AI) is a crucial factor that reflects the effectiveness of multimodal large language models (MLLMs). However, current MLLMs primarily focus on image-level comprehension and limit interaction to textual instructions, thereby constraining their flexibility in usage and depth of response. Therefore, we introduce the Draw-and-Understand project: a new model, a multi-domain dataset, and a challenging benchmark for visual prompting.

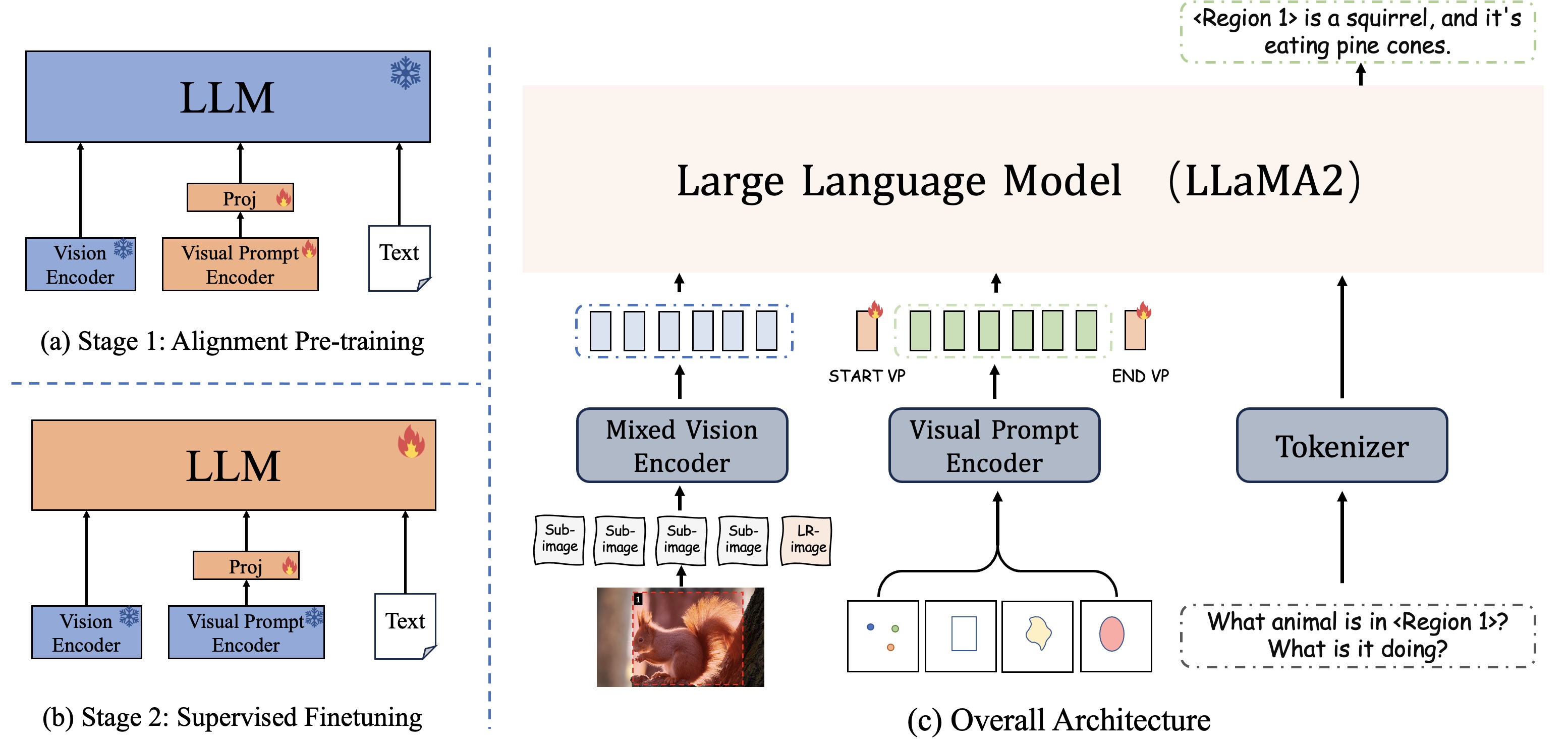

Specifically, the model is named SPHINX-V, a new multimodal large language model designed for visual prompting, equipped with a novel visual prompt encoder and a two-stage training strategy. SPHINX-V supports multiple visual prompts simultaneously across various types, significantly enhancing user flexibility and achieve a fine-grained and open-world understanding of visual prompts.

- Clone this repository and navigate to Draw-and-Understand folder

git clone https://github.com/AFeng-x/Draw-and-Understand.git

cd Draw-and-Understand- Install packages

# Create a new conda environment named 'sphinx-v' with Python 3.10

conda create -n sphinx-v python=3.10 -y

# Activate the 'sphinx-v' environment

conda activate sphinx-v

# Install required packages from 'requirements.txt'

pip install -r requirements.txt- Optional: Install Flash-Attention

# Draw-and-Understand is powered by flash-attention for efficient attention computation.

pip install flash-attn --no-build-isolation- Install Draw-and-Understand as Python Package

# go to the root directory of Draw-and-Understand

cd Draw-and-Understand

# install Draw-and-Understand

pip install -e .

# After this, you will be able to invoke “import SPHINX_V” without the restriction of working directory.- To enable the segmentation ability shown in our official demo, SAM is also needed:

pip install git+https://github.com/facebookresearch/segment-anything.gitSPHINX-V-13b Stage-1 Pre-training Weight: 🤗Hugging Face / Baidu

SPHINX-V-13b Stage-2 Fine-tunings Weight: 🤗Hugging Face / Baidu

Other required weights and configurations: 🤗Hugging Face

Please download them to your own machine. The file structure should appear as follows:

accessory/checkpoints/sphinx-v/stage2

├── consolidated.00-of-02.model.pth

├── consolidated.01-of-02.model.pth

├── tokenizer.model

├── config.json

└── meta.json

accessory/checkpoints/llama-2-13b

├── params.json

accessory/checkpoints/tokenizer

├── tokenizer.model

-

MDVP-Data is a comprehensive dataset for multi-domain visual-prompt instruction tuning. This dataset encompasses data for both point-level and region-level understanding, designed to enhance a model’s comprehension ability and robustness.

-

Based on MDVP-Data, we also introduce MDVP-Bench, a challenging benchmark designed to evaluate tasks that require a combination of detailed description referrals, inter-relationship analysis, and complex reasoning.

-

Prepare data

- Please download the annotations of our pre-training data and images. (Refer to the Dataset Preparation)

-

Stage 1: Image-Visual Prompt-Text Alignment Pre-training

- Download the pretrained SPHINX-v2-1k Weights from Hugging face or Baidu(88z0). Place the model in the "accessory/checkpoints/sphinx-v2-1k" directory.

- Download the ViT-H SAM model and place the model in the "accessory/checkpoints/sam" directory.

- Pre-training configuration is vp_pretrain.yaml. Please ensure that all annotations are included and update the image paths in each JSON file to reflect the paths on your machine.

- Update the model paths in the run script.

- Run

bash scripts/train_sphinx-v_pretrain_stage1.sh.

-

Stage 2: Multi-Task End-to-End Supervised Finetuning

- Download SPHINX-V Stage-1 Pre-training Weights from 🤖️Checkpoints. Alternatively, you may use your own model weights trained from Stage 1.

- Place the model in the "accessory/checkpoints/sphinx-v/stage1" directory.

- Fine-tuning configuration is vp_finetune.yaml. Please ensure that all annotations are included and update the image paths in each JSON file to reflect the paths on your machine.

- Update the model paths in the run script.

- Run

bash scripts/train_sphinx-v_finetune_stage2.sh.

See evaluation for details.

We provide a simple example for inference in inference.py

You can launch this script with torchrun --master_port=1112 --nproc_per_node=1 inference.py

💻 requirments:

- For this demo, it needs to prepare the SPHINX-V stage-2 checkpoints and ViT-H SAM model, and place them in the

accessory/checkpoints/directory. - Make sure you have installed Segment Anything.

- Run.

cd accessory/demos

bash run.sh

- LLaMA-Accessory: the codebase we built upon.

- SAM: the demo also uses the segmentation result from SAM.

If you find our Draw-and-Understand project useful for your research and applications, please kindly cite using this BibTeX:

@misc{lin2024drawandunderstand,

title={Draw-and-Understand: Leveraging Visual Prompts to Enable MLLMs to Comprehend What You Want},

author={Weifeng Lin and Xinyu Wei and Ruichuan An and Peng Gao and Bocheng Zou and Yulin Luo and Siyuan Huang and Shanghang Zhang and Hongsheng Li},

year={2024},

eprint={2403.20271},

archivePrefix={arXiv},

primaryClass={cs.CV}

}