A simple program that can capture an image and quickly determine if it was created by a diffusion model or a GAN using the model presented in the paper "On The Detection of Synthetic Images Generated by Diffusion Models" (https://arxiv.org/abs/2211.00680). By @chiefJang, @KY00KIM, @sunovivid

- clone this repository

- run

$ pip install -r requirements.txt - download the pretrained weights and put them in the

weightsfolder - run

$ python main.py

This is the official repository of the paper: On the detection of synthetic images generated by diffusion models Riccardo Corvi, Davide Cozzolino, Giada Zingarini, Giovanni Poggi, Koki Nagano, Luisa Verdoliva

The synthetic images used as test can be downloaded from the following link alongside a csv file stating the processing applied in the paper on each image. The real images can be downloaded from the following freely available datasets : IMAGENET, UCID,COCO - Common Objects in Context. The real images should then be placed in a folder with the same name that has been recorded in the csv file The directory containing the test set should have the following structure:

Testset directory

|--biggan_256

|--biggan_512

.

.

.

|--real_coco_valid

|--real_imagenet_valid

|--real_ucid

.

.

.

|--taming-transformers_segm2image_valid

The annotations used to generate images with text to images models belong to the COCO Consortium and are licensed under a Creative Commons Attribution 4.0 License (https://cocodataset.org/#termsofuse).

If you plan to use the dataset, please cite our paper "On the detection of synthetic images generated by diffusion models".

For training using ProGAN images, we used the traning-set provided by "CNN-generated images are surprisingly easy to spot...for now"

For training using Latent Diffusion images, we generated 200K fake images, while the 200K real images come from two public datasets, COCO - Common Objects in Context and LSUN - Large-scale Scene Understanding. The fake images and the lists of used real images can be downloaded here. If you plan to use the dataset, please cite our paper "On the detection of synthetic images generated by diffusion models".

In this repository it is also provided a python script to apply on each image the processing outlined by the csv file.

There are also provided the code to test the networks on the provided images.

The networks weights can be downloaded from the following link

In order to launch the scripts, create a conda enviroment using the enviroment.yml provided.

The commands can be launched as follows:

To generate the images modified according to the details contained in the csv file, launch the script as follows:

python csv_operations.py --data_dir /path/to/testset/dir --out_dir /path/to/output/dir --csv_file /path/to/csv/file

In order to calculate the outputs of each model launch the script as shown below

python main.py --data_dir /path/to/testset/dir --out_dir /path/to/output/dir --csv_file /path/to/csv/file

The output CSV contains the logit values provided by the networks. The image is detected fake if the logit value is positive. Finally to generate the csv files containing the accuracies and aucs calculated per detection method and per generator architecture launche the last script as described.

python metrics_evaluations.py --data_dir /path/to/testset/dir --out_dir /path/to/output/dir

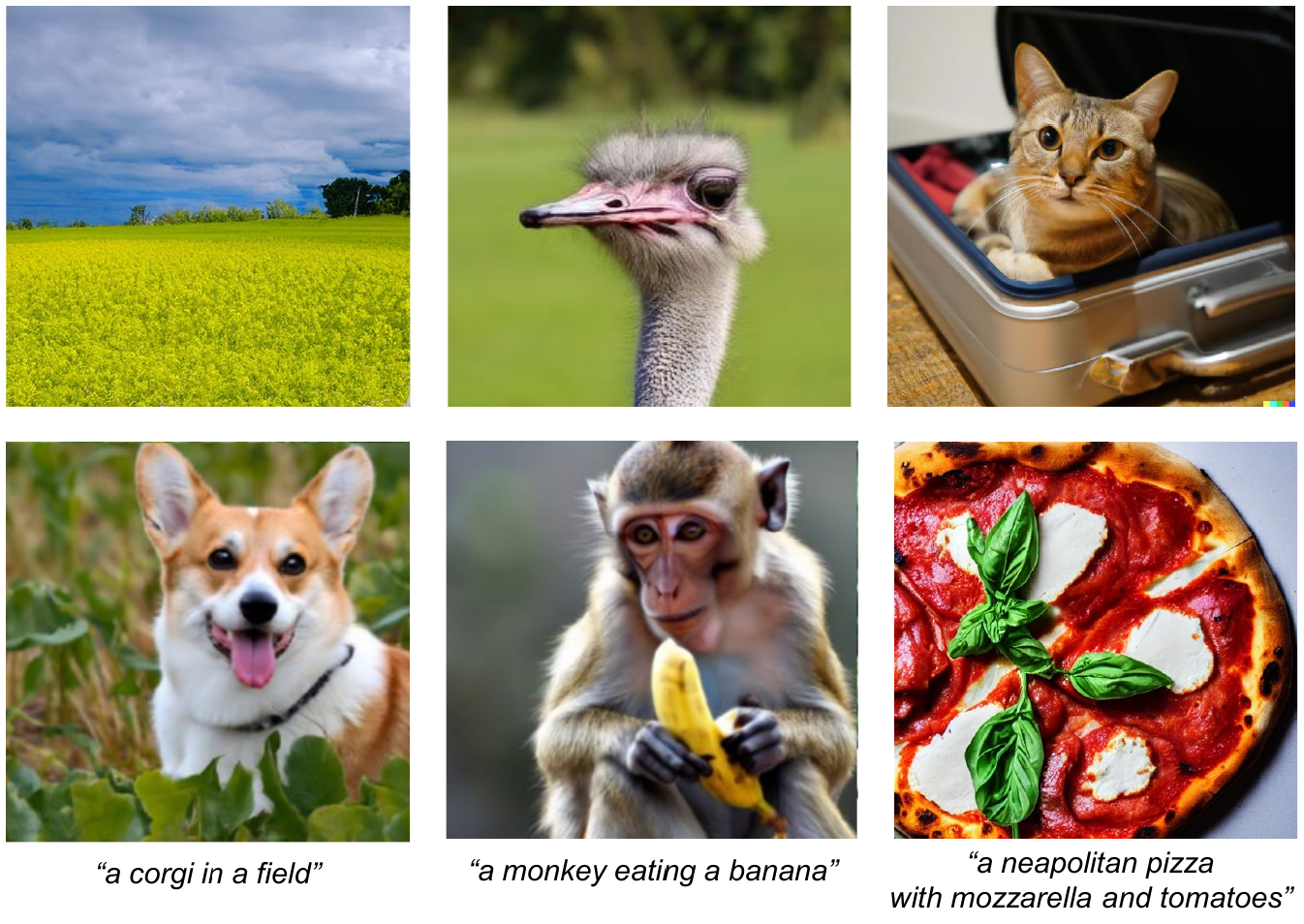

Over the past decade, there has been tremendous progress in creating synthetic media, mainly thanks to the development of powerful methods based on generative adversarial networks (GAN). Very recently, methods based on diffusion models (DM) have been gaining the spotlight. In addition to providing an impressive level of photorealism, they enable the creation of text-based visual content, opening up new and exciting opportunities in many different application fields, from arts to video games. On the other hand, this property is an additional asset in the hands of malicious users, who can generate and distribute fake media perfectly adapted to their attacks, posing new challenges to the media forensic community. With this work, we seek to understand how difficult it is to distinguish synthetic images generated by diffusion models from pristine ones and whether current state-of-the-art detectors are suitable for the task. To this end, first we expose the forensics traces left by diffusion models, then study how current detectors, developed for GAN-generated images, perform on these new synthetic images, especially in challenging social-network scenarios involving image compression and resizing.

The license of the code can be found in the LICENSE.md file.

The annotations used to generate images with text to images models belong to the COCO Consortium and are licensed under a Creative Commons Attribution 4.0 License (https://cocodataset.org/#termsofuse).

@InProceedings{Corvi_2023_ICASSP,

author={Corvi, Riccardo and Cozzolino, Davide and Zingarini, Giada and Poggi, Giovanni and Nagano, Koki and Verdoliva, Luisa},

title={On The Detection of Synthetic Images Generated by Diffusion Models},

booktitle={IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2023},

pages={1-5},

doi={10.1109/ICASSP49357.2023.10095167}

}