IFQA - Official Pytorch Implementation [Project Page]

IFQA: Interpretable Face Quality Assessment

Byungho Jo, Donghyeon Cho, In Kyu Park, Sungeun Hong

In IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) 2023

Paper: WACV Proceeding

Video: Youtube

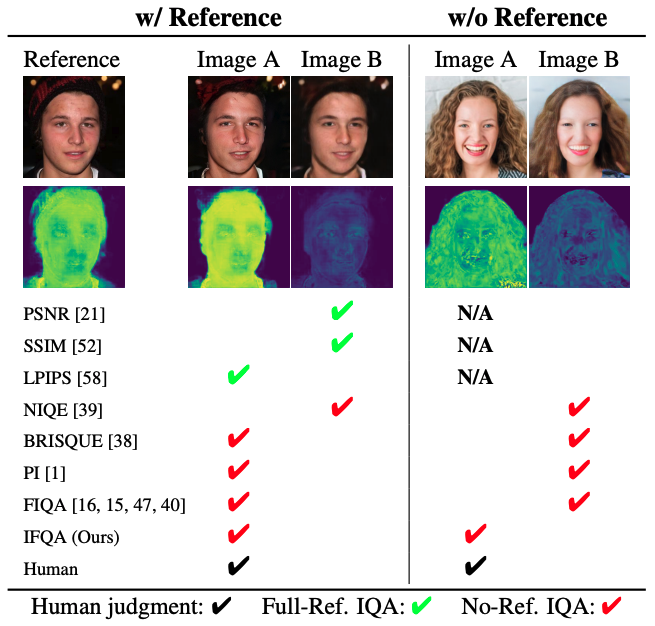

Abstract: Existing face restoration models have relied on general assessment metrics that do not consider the characteristics of facial regions. Recent works have therefore assessed their methods using human studies, which is not scalable and involves significant effort. This paper proposes a novel face-centric metric based on an adversarial framework where a generator simulates face restoration and a discriminator assesses image quality. Specifically, our per-pixel discriminator enables interpretable evaluation that cannot be provided by traditional metrics. Moreover, our metric emphasizes facial primary regions considering that even minor changes to the eyes, nose, and mouth significantly affect human cognition. Our face-oriented metric consistently surpasses existing general or facial image quality assessment metrics by impressive margins. We demonstrate the generalizability of the proposed strategy in various architectural designs and challenging scenarios. Interestingly, we find that our IFQA can lead to performance improvement as an objective function.

- VIT-based Model: Google Drive

- CNN-based Model Google Drive

Place the downloaded weight file in following directory:

IFQA/

├── weights/

├── IFQA_Metric.pth

├── IFQA++_Metric.pth- OS: Windows/Ubuntu

- 64-bit Python 3.7

- PyTorch 1.7.0 (or later).

- Albumentations.

pip install -r requirements.txtIFQA is designed for evaluating the realness of faces. IFQA produces score maps of each pixel and we apply average to get final score.

You can produce quality scores using test.py. For example:

# A single face image input.

python test.py --path=./docs/00021_Blurred.png

# All images within a directory.

python test.py --path=./docsWe thank Eunkyung Jo for helpful feedback on human study design and Jaejun Yoo for constructive comments on various experimental protocols.